How FreshTracks Canada Reduced Regression Effort and Caught Critical Bugs Faster with 10% Automation in Just a Few Weeks

Manual QA testing can significantly drain development resources. FreshTracks Canada spent excessive hours on manual regression testing, causing delayed releases and developer frustration. These testing limitations directly impacted their business operations: missed deadlines, increased overtime, and critical bugs reaching production.

To transform their QA processes, FreshTracks partnered with ThinkSys. By strategically automating their highest-priority test cases using Playwright, FreshTracks Canada achieved substantial improvements within just a few weeks.

This case study explores the implementation strategy, automation journey, and tangible benefits FreshTracks Canada experienced.

About FreshTracks Canada

FreshTracks Canada plans custom travel experiences for adventurous travelers exploring North America. As a digital-first company, they rely heavily on software to manage logistics and support their customers. But their quality assurance process needed work.

The QA team had to manually go through more than 8,000 test cases every time they ran a regression cycle. It took 2 to 3 weeks each time, eating up a lot of the development team’s time.

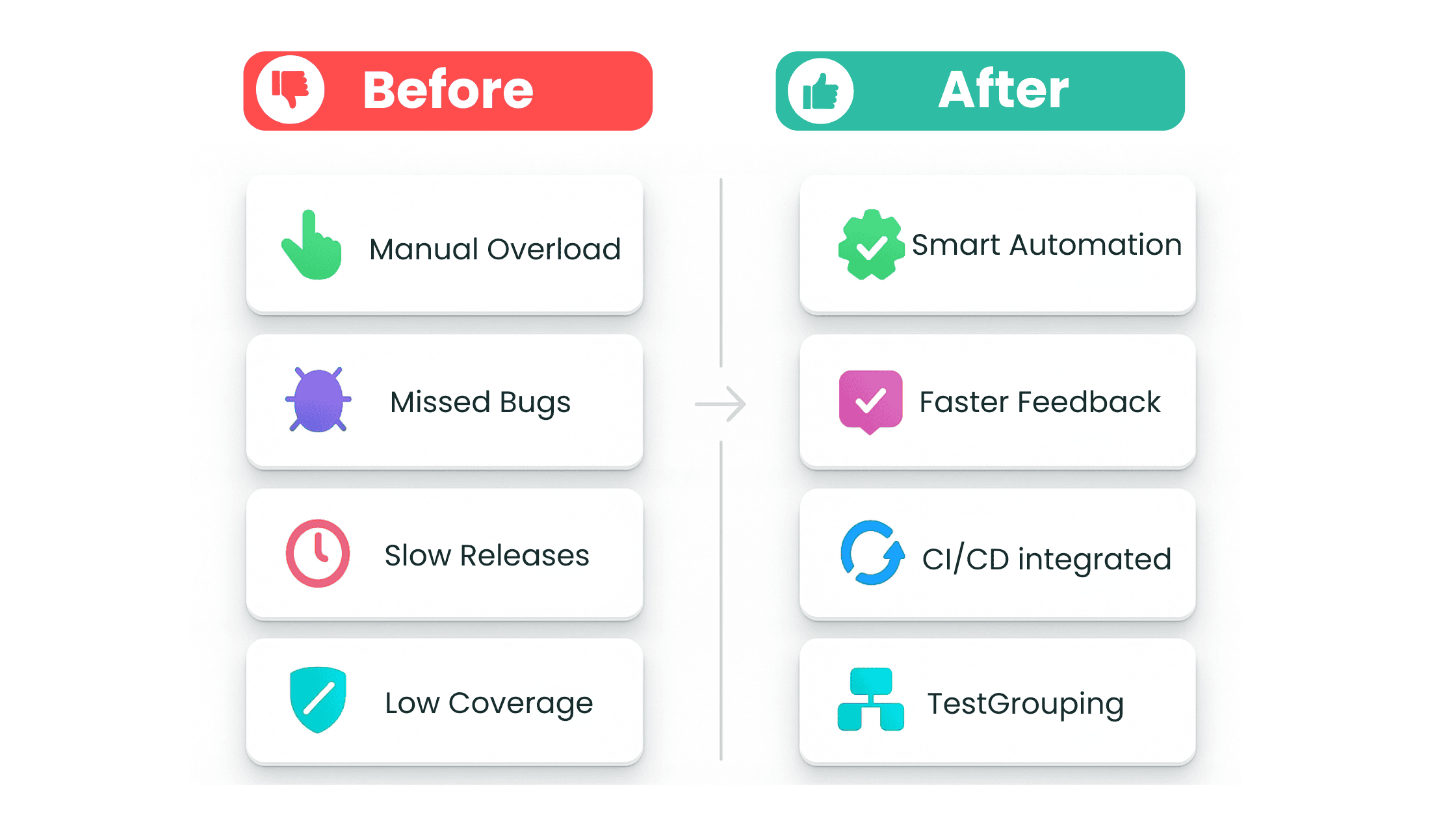

Here’s a list of all the problems they were facing:

- Manual testing of thousands of test cases created a massive bottleneck that slowed down the entire development process.

- Previous automation attempts fell short, forcing the team back to time-consuming manual testing.

- Critical software updates and new features stayed in testing far too long, frustrating both developers and customers.

- The QA team struggled to catch issues early in development when fixes would be faster and less expensive.

Solution

ThinkSys introduced a structured automation solution:

- Tool Selection: ThinkSys chose Playwright as their automation framework based on several key advantages. The tool offers exceptional speed in test execution, supports multiple browsers seamlessly, and provides robust API testing capabilities along with powerful debugging tools, all while being open-source.

- Framework Development: The team created a custom automation framework tailored to specific testing requirements. This framework incorporated best practices and was designed to be maintainable and scalable for future testing needs.

- Test Case Optimization: Through careful analysis and strategic grouping, the team consolidated 8,000 micro-test cases into approximately 2,000 automated test cases. This optimization improved efficiency while maintaining comprehensive test coverage.

- Strategic Implementation: The initial automation phase focused specifically on scenarios marked as critical, highest, and high-priority. This approach ensured the most important functionality was tested thoroughly from the start.

- Pipeline Integration: Working closely with the DevOps team, automated tests were integrated into the CI/CD pipeline. This integration enabled automatic test execution in both development and staging environments, providing quick feedback on code changes.

Results and Future Opportunities

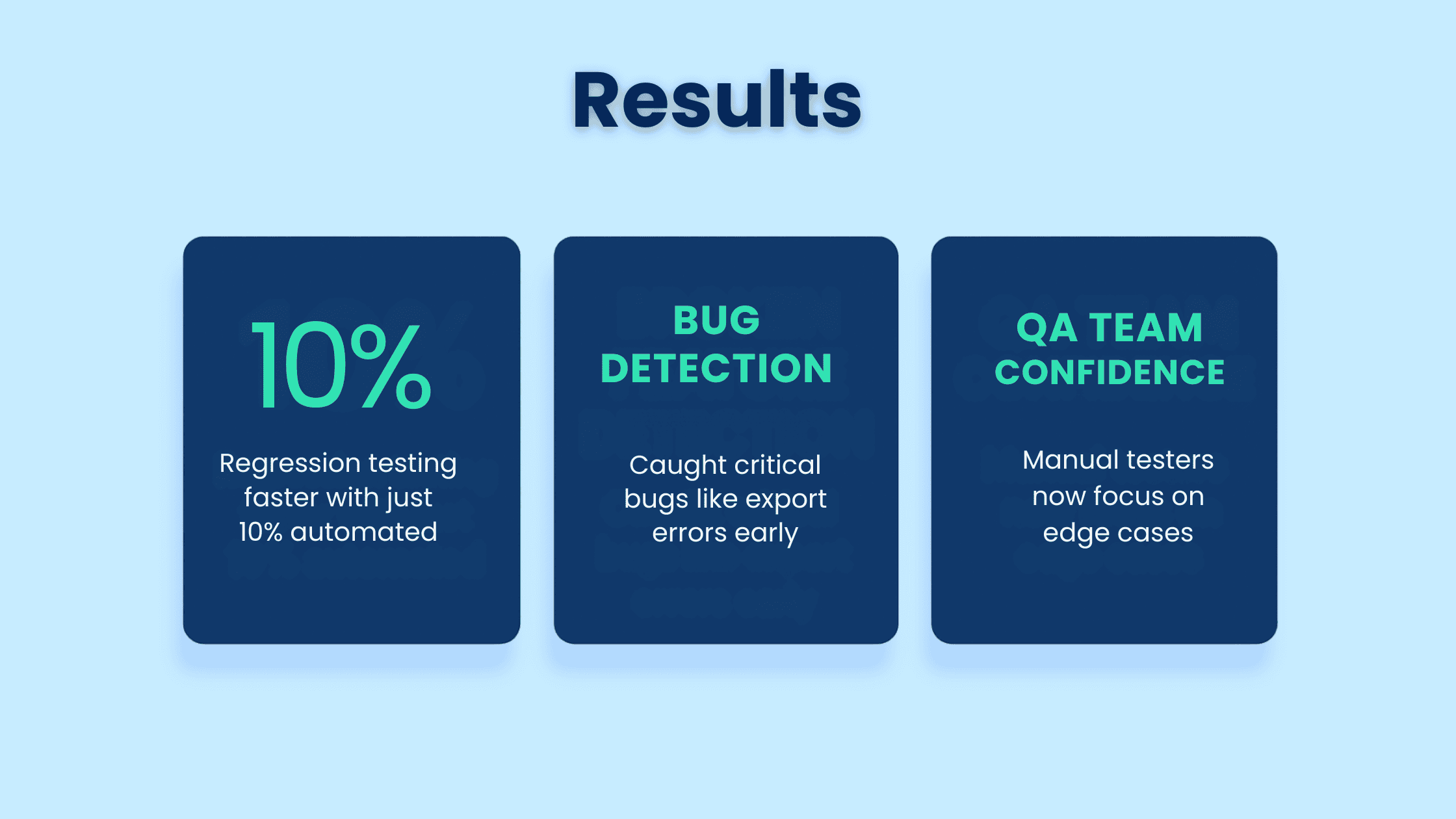

When all the changes were implemented, the difference was evident:

- Automation Progress: Even automating just 10% of the test suite in the initial phase made a noticeable difference. Regression testing became faster and less of a burden on the team.

- Caught Major Issues Early: The automated tests picked up on important problems—like broken export features,before they reached production. That helped avoid bigger headaches down the line.

- Error Message Testing: Tests revealed inconsistent error messages that needed standardization and clarity improvements across the application.

- Data Sorting: Automated checks spotted incorrect sorting algorithms that manual testing might have missed in complex data scenarios.

- More Confidence for the QA Team: With automated checks handling the basics, the manual testers could focus on tricky edge cases. That made the whole team feel more confident about the quality of the software.

- Regression Testing: The final testing phase runs more efficiently now that automated tests handle repetitive checks, letting manual testers focus on edge cases.

Automation Implementation Steps

Step 1: Cleaned Up and Restructured the Test Suite

Step 2: Selected the Right Tool

Step 3: Built a Custom Automation Framework

Step 4: Prioritized Critical Scenarios in Phase 1

Step 5: CI/CD Pipeline Integration

Conclusion

Thanks to ThinkSys’ help with test automation, FreshTracks Canada made big improvements to their QA process. Their team now spends less time on repetitive tasks and more time focusing on complex testing. That’s led to smoother releases and happier customers.

Want to see similar results? ThinkSys can help you catch bugs early, speed up testing, and make your releases more reliable—so your team can focus on building great software.

Share This Article: