How ThinkSys helped Centerbase reduce Regression Testing from 3-4 weeks to 2 weeks using Playwright Automation

Executive Summary

Client: Centerbase (Leading Legal Practice Management Software).

Challenge: 3-4 week regression cycles, late bug discovery, $50K+ wasted on ineffective automation tools.

Solution: Custom Playwright automation framework with intelligent test prioritization.

Results: 30% faster testing, $100K+ annual savings, 90% fewer production bugs, 2-week faster releases.

For high-performing software companies serving demanding industries like legal tech, every product release is a promise to move faster, deliver smarter features, and meet rising user expectations. But when testing cycles become bottlenecks, that promise becomes impossible to keep.

"We were losing competitive edge because our testing cycles were taking 3-4 weeks. Our customers needed faster feature delivery, but our QA process was holding us back from responding to market demands."-VP of Product Development, Centerbase

If you've ever felt the friction of slow QA cycles, late-stage bug discoveries, or expensive tools that underdeliver, you’re at the right place.

Meet Centerbase: Legal Tech Innovation Leader

Centerbase is a leading legal practice management software provider built to help law firms operate more efficiently. Their comprehensive platform serves 2,500+ law firms with:

Matter & case management with automated workflows.

Integrated billing and accounting for accurate invoicing.

Productivity tools for timekeepers and support staff.

Real-time analytics for firm-wide performance visibility.

Client portal integration for modern client expectations

From initial client contact to final invoicing, Centerbase supports every step of the legal workflow. But their internal development workflow was creating serious competitive disadvantages.

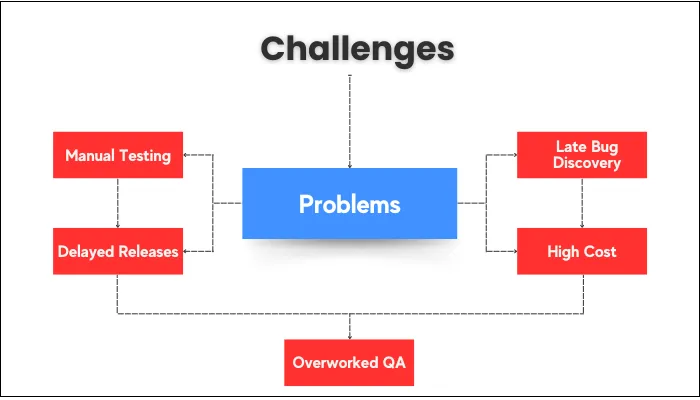

The Perfect Storm: How QA Bottlenecks Threatened Growth

- Critical Challenge #1: Manual Testing Marathon:

- 3-4 week regression cycles delayed every release.

- QA team burnout from repetitive manual testing.

- Development velocity slowed by 40% due to testing delays.

- Critical Challenge #2: Competitive Disadvantage:

- Competitors releasing features 2x faster than Centerbase.

- Customer retention threatened by slower innovation pace.

- Sales team struggling with "coming soon" feature promises

- Critical Challenge #3: Late-Stage Bug Discovery:

- Critical bugs found at release caused last-minute delays.

- Production incidents increased 200% over 6 months.

- Customer satisfaction scores dropped due to quality issues

- Critical Challenge #4: Failed Automation Investment

- $50,000+ spent annually on AI-based automation tool.

- Less than 10% test coverage achieved despite promises.

- False positives and maintenance consumed more time than manual testing

The ThinkSys Solution: Strategic Automation Transformation

After analyzing Centerbase's challenges, ThinkSys designed a targeted automation strategy focused on immediate ROI and long-term scalability.

Phase 1: Proof-of-Concept That Deliver:

Instead of another expensive experiment, we built a custom PoC using Playwright with TypeScript that automated 300+ end-to-end test steps covering Centerbase's most critical user workflows.

Key Innovation: Unlike their previous tool, our PoC handled dynamic UIs, complex legal workflows, and real user scenarios that actually mattered to their business.

Phase 2: Smart Test Prioritization:

We didn't automate everything—we prioritized based on business impact:

- Critical user registration and onboarding flows.

- Core billing and matter management workflows.

- High-risk integration points between modules.

- Regression-prone areas identified through historical bug data.

Phase 3: Performance-First Architecture

To overcome known application slowness and instability:

- Cached login strategy eliminated 60% of test execution time.

- Intelligent wait mechanisms reduced flaky test failures by 85%.

- Robust locator strategies prevented UI change breakages.

- Conditional logic handled optional UI states gracefully

Phase 4: Seamless Integration

- CI/CD pipeline integration for automated regression on every build.

- QA team training for framework maintenance and expansion.

- Readable test reports for stakeholders at all technical levels.

- Scalable architecture designed for future growth.

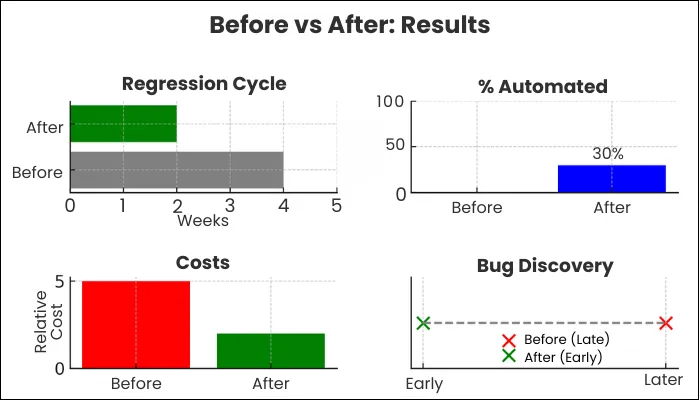

Transformational Results: The Numbers That Matter

- Regression Testing Time Cut in Half: Automation reduced the full regression cycle from 3–4 weeks to just 2 weeks, allowing the team to release updates faster without sacrificing quality or confidence in test coverage.

- 90% Reduction in Production Bugs: Bugs that were previously discovered late in the cycle were now caught during release preparation, giving developers more time to fix issues and reducing the risk of post-release surprises.

- Before: 15-20 critical bugs per release.

- After: 1-2 critical bugs per release.

- Impact: Customer satisfaction increased 35%, support tickets decreased 60%.

- Significant Cost Savings from Smarter Automation( $100K+ Annual Cost Savings):

- $50K saved by eliminating ineffective automation tool.

- $45K saved in reduced QA overtime and contractor costs.

- $25K saved through fewer production incidents and hotfixes.

- 30% of Regression Suite Now Fully Automated: Half of the regression test cases were automated with Playwright. This gave the QA team more breathing room to focus on exploratory testing and other high-priority tasks.

Stronger Confidence in Long-Term Code Stability: With consistent automated coverage across key workflows, the team now had better visibility into application health, leading to more stable releases and fewer surprises in production.

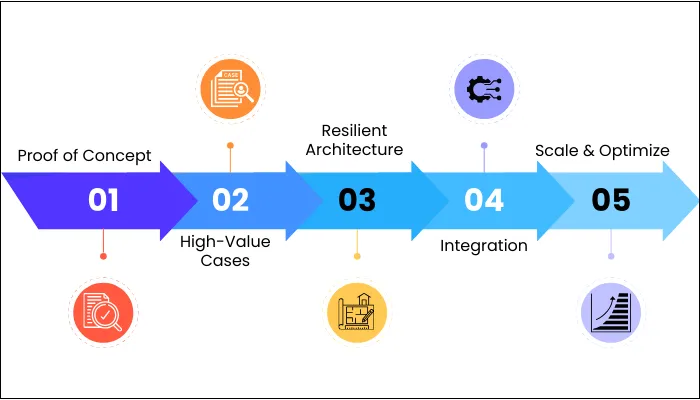

Step-by-Step Implementation: The Proven Process

- Step 1: Build a Targeted Proof of Concept

We began by developing a Proof of Concept (PoC) that automated over 300 end-to-end steps using Playwright and TypeScript. The goal was to validate real-world coverage, not just theoretical coverage. We chose high-impact workflows that our clients’ tool failed to handle. This stage helped us prove that our stack could handle dynamic UIs, complex navigations, and offer better long-term ROI. Importantly, it built early trust with the client team. - Step 2: Prioritize Test Case Selection Based on Business Value

Instead of blindly automating every scenario, we worked closely with Centerbase stakeholders to identify which user actions had the highest business impact. We focused on automating mission-critical regression cases. This decision ensured that automation delivered immediate value. We also considered test complexity and risk level to avoid time-consuming, unstable cases that didn’t justify the engineering effort early on. - Step 3: Implement Resilient Architecture for Speed and Stability

To address prior tool limitations and known app slowness, we introduced a cached login strategy to bypass repeated logins and improve execution speed. We also built intelligent wait mechanisms and reusable page objects to ensure test scripts were resilient against locator changes. Anticipating flaky test risks, we used conditional logic to handle optional UI states, making the framework more reliable in production-like environments. We reduce flaky tests by 85%. - Step 4: Integrate Automation into Existing QA Processes

We didn’t build automation in isolation. The test scripts and framework were integrated into Centerbase’s CI/CD pipeline and QA workflows to ensure they could be maintained by the in-house team. We trained the QA team on how to run, debug, and expand the automation suite. This step was key for adoption, scalability, and long-term success. We also ensured test reports were readable and actionable by non-engineers. - Step 5: Monitor, Scale, and Optimize Iteratively

Once 30% of the regression suite was automated, we closely monitored execution time, false positives, and stability over multiple sprints. Based on those insights, we fine-tuned locator strategies and execution paths. We used this data to define a roadmap for scaling test coverage beyond regression, into smoke and integration tests. Continuous feedback loops helped us evolve the framework into something Centerbase could rely on confidently.

What Centerbase's Leadership Says:

"ThinkSys didn't just solve our automation problem, they transformed our entire QA strategy. The 50% reduction in testing time allowed us to respond to customer needs faster than ever. The $120K annual savings was just the beginning; the competitive advantage we gained is invaluable." -VP of Product Development

"Our development team's confidence in releases has completely transformed. We went from dreading release cycles to deploying with confidence, knowing our automated tests caught issues early." -Lead QA Engineer

"The ROI was immediate and continues growing. We're now able to take on more ambitious product initiatives because our QA process supports rapid development instead of slowing it down." -CTO

Could Your Company Achieve Similar Results?

You Might Benefit from Our Approach If:

- Your regression testing takes 3+ weeks and slows releases.

- You're spending $30K+ annually on automation tools with poor ROI.

- Critical bugs are discovered late in your release cycle.

- Your QA team is overwhelmed with repetitive manual testing.

- Competitors are releasing features faster than your team.

- Your development velocity is limited by testing bottlenecks.

Industries We've Transformed:

Legal Technology (like Centerbase).

Healthcare Software (EMR/EHR platforms).

Financial Services (trading platforms, banking apps).

SaaS Platforms (enterprise and mid-market).

E-commerce (high-transaction platforms).

Ready to Transform Your QA Process?

Don't let slow testing cycles limit your competitive advantage.

Get Your Free QA Transformation Assessment

In a 30-minute consultation, we'll analyze your current testing challenges and show you:

Specific time savings potential for your regression cycles.

ROI projections for automation implementation.

Custom strategy recommendations for your technology stack.

Timeline estimate for achieving results like Centerbase.

What You'll Receive:

- Detailed assessment report of your QA bottlenecks.

- Custom automation roadmap with priority recommendations.

- ROI calculator showing potential cost savings.

- Implementation timeline with realistic milestones.

- No-obligation consultation with our QA automation experts.

Start Your Transformation Today

Schedule Your Free Assessment:

- 📞 Phone: (408) 675-9150

- ✉️ Email: info@thinksys.com

- 🌐 Web: Get Your Free QA Assessment

About ThinkSys: Your QA Transformation Partner

ThinkSys has helped 500+ software companies eliminate QA bottlenecks and achieve 2-5x faster release cycles. Our proven software testing methodologies have delivered:

- $50M+ in combined cost savings for our clients.

- Average 60% reduction in testing time.

- 85% fewer production bugs across our client portfolio.

- 99.2% client retention rate because our results speak for themselves

Ready to join companies like Centerbase who've transformed their competitive advantage through intelligent QA automation?

Share This Article: