How to Implement a Comprehensive QA Strategy for Enterprise Software

Inefficient enterprise software is more common than most people think, slurping up a company’s revenue and prospects. One of the primary causes of poorly performing enterprise software is ineffective QA strategy, leading to gaps in testing coverage and resulting in frequent bugs and crashes.

This article explores the implementation of a comprehensive QA strategy for enterprise software and why your organization must have one.

Understanding Enterprise QA

Whenever enterprise software is mentioned, many people quickly assume that an enterprise resource planning (ERP) software is being referred to. However, ERP is a type of enterprise software, but it’s not the only one. Any software designed for use by an organization rather than a single user is considered enterprise software.

Enterprise software offers numerous features to organizations, some of which are highly essential. And, if any of these features are broken, it’s the organization that has to face the toll. Enterprise QA objectives involve a strategic testing approach that ensures the program remains stable and bug-free.

Unlike ad hoc testing approaches, a comprehensive QA strategy offers structured, proactive quality control across the entire software lifecycle. Here’s how this helps an organization:

- A well-defined QA strategy catches issues early, avoiding system downtime, data loss, compliance violations, and reputational damage.

- Translates business goals into testable objectives and ensures quality is maintained across business-critical workflows, aligning QA with business objectives.

- Ensures robust integration testing and compatibility validation, ensuring seamless interaction with numerous third-party tools, legacy systems, and APIs.

- With modern enterprise development adopting Agile and DevOps practices, a comprehensive QA strategy enables continuous testing and integrates seamlessly with CI/CD pipelines.

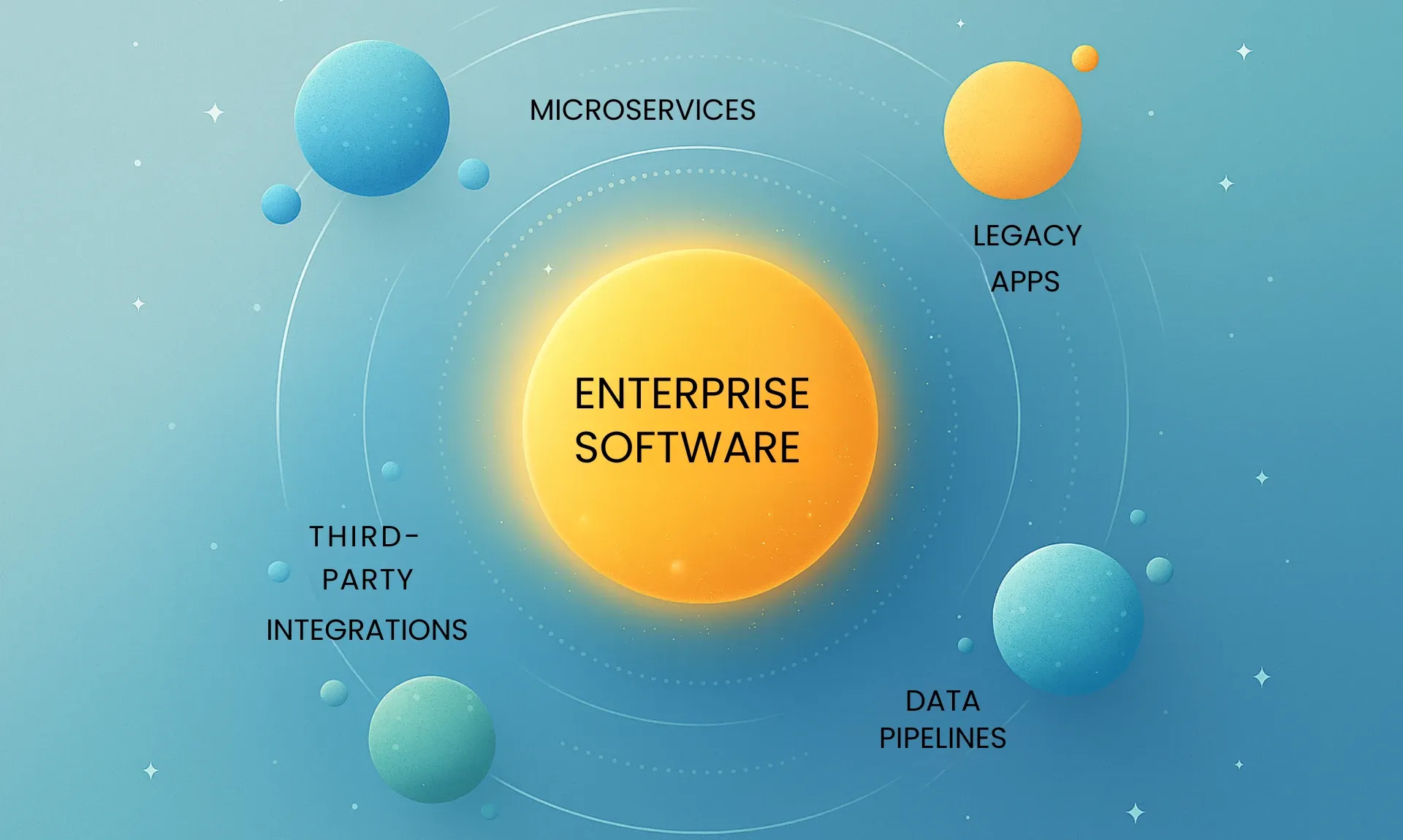

Scalability, complexity, and criticality are elements associated with enterprise software. Unlike consumer-grade applications, enterprise software must support mission-critical operations, comply with strict regulatory standards, and work across diverse infrastructures. All of these raise the stakes for QA teams, where they may face the following challenges:

- Complex system architecture involving microservices, legacy apps, third-party integrations, and data pipelines.

- Frequent and parallel releases in short cycles.

- Executing a high volume of test cases.

- Cross-team and cross-department coordination.

- Ensuring a consistent and intuitive UX across all variations.

To overcome these challenges, organizations must adopt a structured, end‑to‑end QA framework that integrates quality into every stage of the software lifecycle. Here, you’ll explore the key components and best practices for implementing a comprehensive QA strategy, ensuring your enterprise software not only meets today’s rigorous standards but remains adaptable and reliable as your business grows.

1. Define Your QA Objectives

Before introducing any tools, test cases, or automation frameworks, it is crucial to establish clear QA objectives. These objectives serve as a strategic foundation, aligning the QA effort with business goals and ensuring that quality is defined, measurable, and actionable throughout the entire enterprise software lifecycle.

But first, you need to translate your business objectives into QA goals. And to do that, you need to:

- Understand the business context: QA leaders must engage with stakeholders, including product managers, business analysts, and compliance officers, to identify critical business processes and their impact.

- Map business-critical features to QA focus areas: For instance, if a business goal is to reduce order processing time by 30%, the QA goal may be to validate performance improvements across the entire order management workflow.

- Prioritize test coverage based on business value: Implementing risk-based testing involves focusing on areas with the highest business impact of failure risk.

With these actions, you can create a well-articulated set of QA objectives that help answer critical questions:

- What does quality mean for this system?

- Do you want to enhance automation?

- Are you looking to minimize defect leakage?

- What risks are you trying to mitigate?

A mature QA strategy balances different layers of testing, ensuring the software is not just functionally correct but also performs well, is secure, and delivers business value. For that, it’s necessary to maintain a balance between functional, non-functional, and business-driven testing objectives.

Functional testing focuses on certifying system behavior against requirements and user stories, non-functional testing covers performance, scalability, security, usability, and accessibility, and business-level testing objectives ensure software meets business scenarios across departments and user roles.

Striking a balance between these three is essential to ensure that enterprise QA covers every aspect of the software. The following considerations can help you in your balancing strategy:

- Risk-Based Allocation: Assign more testing effort to areas that are business-critical or high-risk.

- Cross-Disciplinary Collaboration: QA should coordinate with DevOps, security, UX, and business teams to set cross-functional quality benchmarks.

- Matrix-Based Planning: Your test matrix should span functional, non-functional, and business workflows.

2. Evaluate your Existing QA Process

Before creating a new QA strategy, it’s crucial to assess what already exists. However, this doesn’t apply to organizations implementing QA for the first time. On the other hand, if your team already has any form of QA activity, be it manual test cases or automated, it’s pivotal to evaluate and refine them.

With the correct evaluation, you ensure strength retention, identify inefficiencies, and address gaps systematically. Furthermore, a structured QA process review helps secure buy-in from leadership by demonstrating where improvements are required.

- Performing QA Maturity Assessment: Performing a QA maturity assessment helps evaluate the effectiveness of your existing QA practices. Also, you can gain a structured approach to understanding where your organization stands in terms of QA capabilities and the steps needed to achieve a higher maturity level.

- Define Levels: Utilize a recognized QA maturity model, such as the Capability Maturity Model Integration (CMMI) for Development or a similar framework. These models define several levels, each with specific characteristics related to processes, tools, and metrics.

- Collect Data: Data will help you in gaining clarity on the QA steps taken, activities planned, testing teams' skills, defect reports, and tools used. Make sure to attain this data through multiple channels.

- Analyze and Map Findings to Maturity Levels: In this step, you need to synthesize all collected data. Compare existing QA practices against the chosen model’s characteristics for each maturity level.

- Create a QA Maturity Assessment Report: With all the information gathered above, compile a detailed report that includes an executive summary, methodology, current state analysis, identified gaps, the assessed maturity level with justification, and prioritized, actionable recommendations for improvement to guide future strategy.

Document Gaps: Documenting gaps is vital for justifying changes, prioritizing improvements, and planning the QA roadmap. Recording gaps in a structured, objective, and categorized format enables traceability and actionable resolution. Follow the template below to document gaps:

Gap Description Impact Cause Affected Area Current State vs. Desired States

Categorize the identified gaps to facilitate analysis and prioritization:- Process Gaps: Issues related to missing, unclear, or inefficient QA processes.

- Data Gaps: Insufficient or unreliable data for decision-making.

- Tooling Gaps: Lack of appropriate tools.

- Communication Gaps: Breakdowns in communication or collaboration between teams.

- Skill Gaps: Deficiencies in the knowledge or expertise of the QA team.

3. Risk-Based Testing

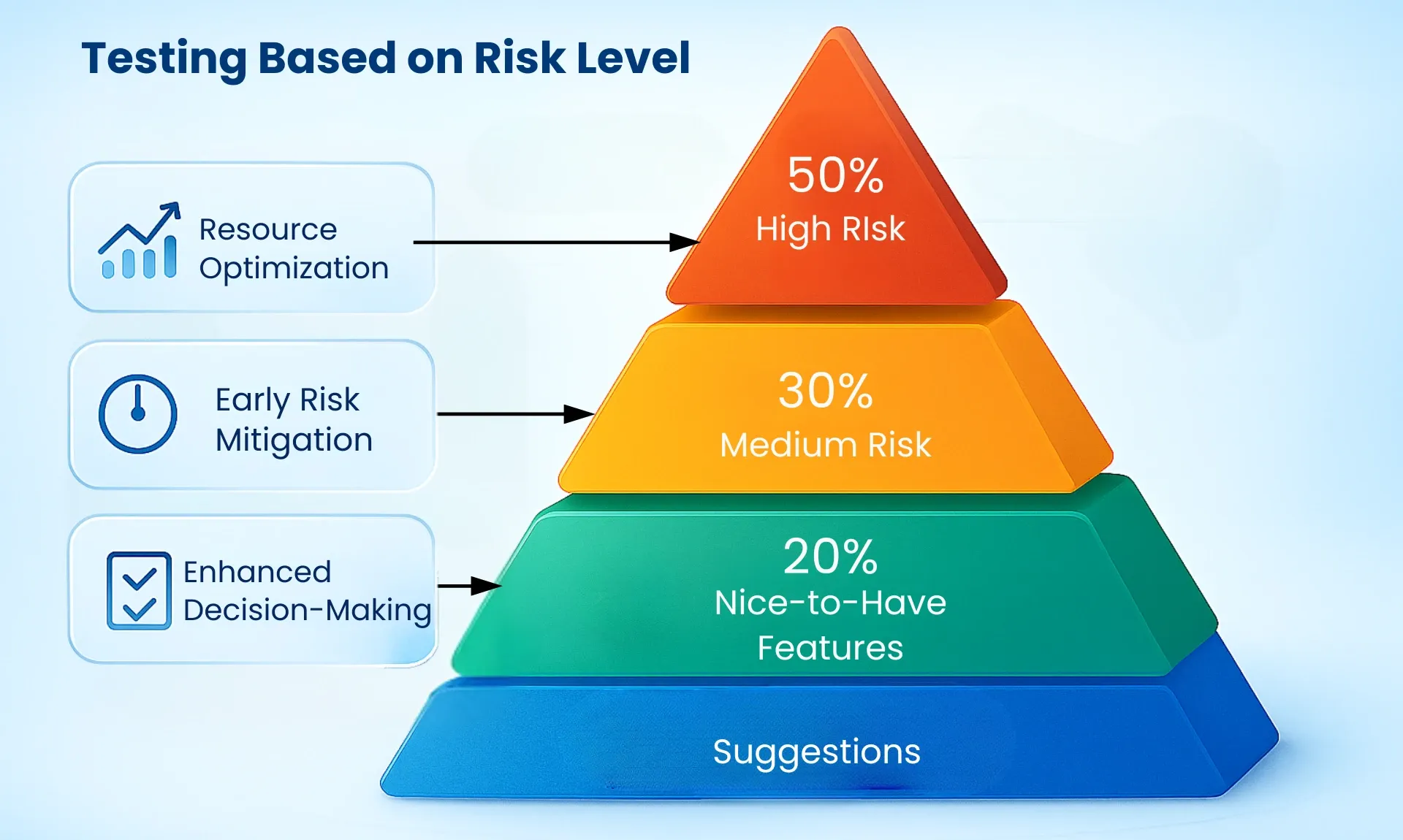

Testing any module randomly will ensure it works flawlessly. But what if you tested a good-to-have feature and left the essential feature for a later stage? Such a situation can delay the release and may even waste time, which could be utilized to fix core functionalities. Risk-based testing (RBT) eradicates such scenarios by prioritizing tests based on the areas that matter the most.

- Early Risk Mitigation: With early identification and prioritization of high-risk areas, you can focus on preventing defects, leading to fewer issues leaking into production.

- Enhanced Decision Making: The RBT provides stakeholders with clarity on the risks associated with different parts of the software, enabling informed decisions regarding acceptable risk levels and areas to invest in for future testing.

- Better Resource Allocation: RBT ensures that valuable resources are concentrated on the most critical functionalities, maximizing the return on testing investment.

- Assessing Various Modules: Assessing individual modules or features for risk involves evaluation of various factors that contribute to potential failure and its impact.

- List all key components, APIs, third-party integrations, and workflows.

- Now assess the likelihood of failure (LOF) based on factors including code complexity or change frequency, defect history, technology, technical debt, team expertise, and dependency on external systems.

- The next step is to evaluate the impact of failure based on its business impact. This could include financial loss, user experience disruption, operational interruption, or compliance exposure.

- Based on all the above-mentioned factors, you need to score the modules. For each factor, assign a numerical score. The scoring criteria should be consistent across all modules.

- Combine the scores to obtain an initial risk rating for each module, facilitating a preliminary ranking.

Building a Risk Matrix: A risk matrix is a visual tool that maps the likelihood of a risk occurring against the potential impact it would have if it were to happen, and offers a structured way to prioritize risk mitigation.

Likelihood/Impact 1-Negligible- 2- Low 3- Moderate 4-High 5-Critical 5- Very likely Medium Risk (5) High Risk (10) High Risk (15) Critical Risk (20) Extreme Risk (25) 4- Likely Mediu Risk (4) Medium Risk (8) High Risk (12) High Risk (16) Critical Risk (20) 3- Possible Low Risk (3) Medium Risk (6) Medium Risk (9) High Risk (12) High Risk (15) 2- Unlikely Low Risk (2) Low Risk (4) Medium Risk (6) Medium Risk (8) High Risk (10) 1- Rare Minimal Risk (1) Low Risk (2) Low Risk (3) Medium Risk (4) Medium Risk (5) - Allocating QA Resources based on Risk: Upon identifying and prioritizing the risks, you need to allocate your QA resources tactically to maximize testing effectiveness.

- Extreme Risk: Allocate the most significant portion of your QA resources to this category and perform complete regression, integration, exploratory, and non-functional testing. As this is your top priority, you can allocate 38-42% of QA resources here.

- Critical Risk: This is another important area of QA where you need to prioritize regression, end-to-end, API testing, and performance testing. Being the second highest priority, you can allocate 28-32% of QA resources here.

- High-Risk: For high-risk areas, you can allocate mid-level testers or QA generalists to perform periodic exploratory tests, positive path testing, general functional testing, and essential integration checks. Assigning 13-17% of QA resources here is recommended.

- Medium Risk: For medium risk modules, you can assign junior testers or perform manual smoke tests and sanity testing post-deployment. You can allocate 8-10% of your QA resources for this category.

- Low Risk: Low-risk modules can wait a while for testing and involve mostly user acceptance testing, and none of the critical features. For this, you can assign 3-5% of your QA resources.

4. Implement a Test Automation Plan

Automation helps in the efficient and practical testing of enterprise software. Accelerating feedback cycles, improving regression testing reliability, freeing up manual testers, and boosting testing speeds are some of the reasons why automation should be part of your enterprise QA plan. Successful automation requires strategic planning and disciplined execution, in addition to using the right tools.

- How to Determine What to Automate?

The worst thing you can do is automate everything. Neither is it feasible nor desirable for the best outcome. Strategic selection of test cases for automation is crucial for maximizing ROI and maintaining an effective testing pipeline.- Prioritize Based on Risk: Earlier, you learned about risk-based testing, and it is time to implement it. You need to automate critical paths, high-impact functionalities, and areas prone to severe defects if they fail. In addition, automate key functional flows and regions with a moderate change frequency.

- Automate Based on Test Case Characteristics: Automate repetitive test cases, stable functionalities, tests with predictable results, high-volume data tests, time-consuming, and performance or load tests.

- Automated Based on ROI: Consider analyzing the ROI of automating vs. manually performing testing. Automate tests where the automation effort is low relative to high manual execution frequency.

- Designing a Test Automation Framework: Flaky tests, technical debt, and bottlenecks in scaling are standard in automation testing. These happen due to an inefficient test automation framework. A well-architected automation framework will avoid such scenarios from occurring, making your QA strategy effective.

- Implement Modular Designs: The framework should be modular and reusable. To achieve this, you can implement a page object model (POM) and data-driven capabilities.

- Error Handling and Reporting: Implement mechanisms to handle unexpected errors and failures during test execution gracefully. By using ExtentReports, Allure, or other tools, you can generate comprehensive and easily understandable test reports.

- Integration with CI/CD Pipelines: CI/CD integration is a vital part of automation, allowing for automated execution on every code commit or build.

- Clear Structure and Naming: Ensure to implement consistent naming conventions for test cases, functions, variables, and files for enhanced readability and collaboration.

- Quality Criteria for Automation: Poor automation leads to false positives, wasted debugging time, and reduced trust. The following factors will help you evaluate the quality of your automation framework:

- Consistent results and reliability.

- Reusability of test components.

- Tests should be understandable by other QA team members.

- Tests should run quickly.

- Tests should run regardless of the machine or environment.

- How to Maintain and Expand Test Automation Coverage

Automation doesn’t require constant human intervention, but it still requires ongoing maintenance for long-term success. The following methods are effective for expanding test automation coverage.

Automation is an ongoing task that requires ongoing maintenance for long-term success. The following methods are beneficial for expanding test automation coverage.- Regular Review and Refactoring: Periodically review existing automation scripts for efficiency, readability, and adherence to best practices. Furthermore, refactor outdated or overly complex scripts.

- Immediate Fix for Broken Tests: With frequent reviews, you’re bound to identify broken tests, if any. Treat failing automated tests with the same urgency as failing application code and fix them immediately to avoid red build blindness.

- Evaluate Reports: By evaluating test execution reports, you can look for patterns in failures, execution times, and flakiness, allowing you to improve both tests and the application.

5. Collaboration between Teams

Identifying bugs and ensuring high-quality enterprise software is not just the QA team’s responsibility, but a shared task. This integrated approach fosters a culture of quality, leading to more robust and reliable software.

- Embedding QA Roles and Activities Across the Entire SDLC: To achieve enterprise-grade quality, QA activities must be woven into every phase of the SDLC.

- Requirements Gathering Phase: To embed QA early, ensure QA engineers are present in all initial product and requirements discussions. Also, formally review designs with QA to spot ambiguities, define clear acceptance criteria, and guarantee testability from day one

- Development Phase: During development, build collaboration by having QA pair with developers on feature implementation and test creation.

- Testing Phase: Here, the QA team should design, execute, and automate test cases, and conduct exploratory and performance testing.

- Deployment Phase: For releases, QA’s tasks involve performing release validation and smoke testing while ensuring that the release meets entry/exit quality criteria.

- Shift-Left Practices: Shift-left means moving the QA activities towards the left of the SDLC, which is at the beginning. The aim is to identify and avoid issues at their source, reducing the cost and efforts required to fix them. Below are the top shift-left practices you can implement:

- Test-Driven Development (TDD): Encourage teams to use TDD, where they write test cases before writing code, for critical business logic components, to improve design, and reduce bugs.

- Static Code Analysis: QA should integrate tools that analyze source code for potential defects, security vulnerabilities, and adherence to coding standards during development.

- Peer Code Reviews: In addition to self-review, the teams should build a culture of peer code reviews where developers scrutinize each other’s code.

- Automated Unit and Integration Testing: Make sure to write robust unit and component tests that are integrated into the build process.

- Shift-Right Strategies: While shift-left aims to prevent defects, shift-right focuses on understanding and enhancing software quality in production. It involves gaining insights from real user behavior and system performance in the live environment.

- Prioritize A/B Testing: Compare different versions of the app to validate changes in a controlled production environment before a full roll-out to determine friction areas.

- Production Monitoring: Implement robust Application Performance Monitoring, logging, and alerting systems to monitor the application's health, performance, and user experience in real-time.

- Toggle Features: Using feature toggles allows you to enable or disable any feature remotely in production. With this control, you can test in production without impacting all users and quickly roll back if issues arise.

- Gain User Feedback: Identify usability issues, common pain points, and underlying defects by collecting and analyzing user behavior data, feedback, and support tickets.

- How to Build and Enforce Quality Gates in CI/CD Pipelines?

Quality gate is more like a benchmark within the CI/CD pipeline where the code can only move to the next stage when it meets the necessary criteria. They are crucial for enforcing quality standards and preventing defects from progressing downstream. You need to follow these steps to build quality gates in the CI/CD pipeline.

- Define Criteria: The initial step is to define clear criteria and what quality means at each stage of your pipeline. Make sure that you remain specific and add measurable criteria with clear pass/fail conditions.

- Automate Gate Enforcement: You need to embed automated quality tools such as Jenkins or GitLab into your CI/CD pipeline. Moreover, configure the pipeline to automatically fail the build and block progression if any quality gate criteria aren't met.

- Implement Progressive Gates: As the code moves closer to production, implement increasingly stringent quality gates. This means that earlier stages may have foundational checks, but later stages will involve comprehensive and time-consuming validations.

- Frequent Reviews: Building gates is an ongoing task that requires continuous attention. Regularly monitor the effectiveness of your gates by analyzing their pass/fail rates and observing if critical issues continue to slip through.

6. Metrics and KPIs

When it comes to understanding the effectiveness of your QA strategy and driving continuous improvement, measuring the right things is your best course of action. Without clear and measurable QA goals, metrics, and KPIs, QA efforts can become stagnant, making it challenging to demonstrate value.

- Essential QA Metrics and KPIs to Measure Success

Measuring the right metrics is the first step towards improving the overall QA of your enterprise software. The following QA metrics examples represent a balanced set of indicators you should track:- Defect density rate - measures the number of defects per unit.

- Defect escape rate – showcases the percentage of defects that escape detection during testing and are found in production.

- Test coverage – measures the overall test cases executed against the application's code.

- Test automation rate – measures the ratio of test cases that are automated versus those that are executed manually.

- Defect resolution time – measures the time consumed to fix the issue after it’s reported.

- Test execution time – the time it takes to execute a complete set of tests.

- Using Metrics for Continuous Feedback

Collecting metrics takes you halfway towards improving your QA process. The other half is about using them to drive continuous improvement. Metrics serve as a feedback loop, guiding adjustments to your QA strategy and development process. Use the collected metrics to:- Identify Bottlenecks: Certain metrics, such as high defect resolution time or low test automation coverage, might showcase resource constraints in development. In case any metric is lagging frequently, you can isolate the cause of the issue.

- Identify Trends and Patterns: Don't just look at individual data points. Analyze metrics over time to identify trends and patterns, such as specific modules or teams consistently showing higher defect rates.

- Set Incremental Goals: To improve overall QA, you need to set incremental goals. These improvement goals can be increasing automation coverage from 50% to 75% in 3 months, reducing escaped defects by 30% over the subsequent 2 releases, or any other.

7. Continuous Improvement and Optimization

Actions that are proven effective today may not provide the results later. With that in mind, a comprehensive QA strategy must evolve with the changing dynamics of users and regulations. In enterprise software, continuous improvement ensures that QA remains effective, efficient, and aligned with business outcomes.

In this section, you’ll understand the ways to monitor and evolve your QA practices.

- Keeping Up with the Latest Trends: The foremost step towards continuous improvement is staying updated with the latest trends. You need to be open to constant learning and adapting to new technologies, methodologies, and industry shifts.

- Subscribe to Reputable Publications and Blogs: Follow prominent QA, DevOps, and software engineering blogs, newsletters, and journals, as many provide curated content on the latest trends, case studies, and practical advice. The top names here are Ministry of Testing, Lambda Test Blog, TestGuild Blog, and Google Testing Blog.

- Engage with Industry Communities: Actively participating in online forums, professional groups, and attending leading industry conferences or virtual summits offers invaluable insights into emerging tools, best practices, and innovative approaches from peers and thought leaders.

- Identify Areas of Improvement: No process is perfect, and there’s always room for improvement. With the proper practices, you need to look for opportunities where your QA can take the next step towards progress.

- Root Cause Analysis: For every critical defect found, you should perform a thorough root cause analysis. This process helps identify not just the immediate cause of the bug, but also the underlying process, tooling, or communication gaps.

- Internal Feedback: Gaining insight and ideas from various teams, including QA, development, and product, can help you identify a better course of action for the future. Combine that with anonymous surveys to gather broader perspectives on pain points and suggestions.

- Benchmark Against Industry Standards: Periodically compare your QA practices against recognized industry best practices and maturity models to ensure alignment and continuous improvement. Doing so will provide an external perspective that can highlight areas where your processes lag.

- Integrating Customer and Stakeholder Feedback

The ultimate measure of quality is customer satisfaction. Integrating their feedback along with other stakeholders directly into your QA process is vital for building truly user-centric software.- Establish Clear Feedback Channels: Providing feedback should be seamless for everyone. With that in mind, it is essential to have clear feedback channels, such as customer support tickets, surveys, user forums, and informal channels like direct communication with product teams.

- Manage Feedback Efficiently: Collect, store, and categorize feedback efficiently for further assessment using tools such as SurveyMonkey, Typeform, Qualtrics, Hotjar, UserVoice, Canny, and Userback. Ensure to tag feedback by feature, severity, and customer segment to facilitate structured analysis.

- Analyze Feedback: You need to analyze collected feedback to identify common pain points, usability issues, missing features, or performance concerns. These qualitative insights will help you make actionable improvements for the development and QA teams, prioritizing them based on customer impact and business value.

- Close the Loop and Communicate Changes: If the user feedback has proven to be helpful or has led to significant changes, make sure to communicate how their input influenced product changes or testing priorities to build trust.

- Staying Compliant with Regulatory Requirements: Adhering to regulations and standards is non-negotiable, especially if your organization falls under mandatory compliance frameworks. You need to take the following actions to keep enterprise software compliant:

- Identify Applicable Regulations: You have to identify the regulations that apply to your software. It can be HIPAA, GDPR, PCI DSS, ISO, or any other regulation, depending on your industry and features offered.

- Compliance by Design: It means building regulatory requirements directly into early design and development. Your QA should review these requirements for testability and ensure that corresponding test cases are designed to adhere to specific regulations.

- Implement Compliance-Specific Testing: You should have dedicated test cases and scenarios aimed at compliance. These could be security testing, data privacy checks, accessibility testing, and audit trail validation.

- Maintain Documentation: Maintaining documentation of QA activities is a significant requirement for most compliance regulations. You need to maintain documentation and trails of detailed test plans, test cases linked to specific regulatory requirements, test execution records, and defect logs.

- QA Training and Change Management: A successful and continuously improving QA function relies heavily on a skilled team and the ability to manage organizational change effectively. The following actions will help the team develop its skills and manage change effectively.

- Implement structured training and certifications for QA covering new technologies, methodologies, domain knowledge, and soft skills.

- Create internal platforms and mentorship programs to disseminate best practices and learnings across all teams.

- Ensure teams receive necessary training, tools, documentation, and ongoing support during transitions.

- Regularly assess whether training improves skills and if changes are adopted using feedback mechanisms and performance metrics to guide adjustments.

8. Special QA Considerations

In addition to the core principles of QA, enterprise environments present unique challenges that demand specific attention. The following are some special considerations for enterprise software that must be handled with rigor for comprehensive QA execution.

- Managing Test Environments and Data: For enterprise software, it is necessary to have access to stable, realistic test environments and relevant test data. Without these, even the best test cases can’t guarantee meeting software quality targets.

- Strategize Test Environments: Enterprises need multiple environments that mirror production as closely as possible in terms of hardware, software, and network configurations. You can automate environment setup using Terraform, Ansible, or any other IaC tool of your preference to ensure consistency and quick provisioning for varying testing needs.

- Implement Test Data Management: Creating and maintaining realistic test data is a significant challenge. Develop strategies for data generation, data masking/anonymization, and data subsetting. These strategies could be synthetic data generation, data masking, and implementing data refresh and reset mechanisms.

- Security and Compliance Testing: For software, especially those that handle sensitive information, security and compliance testing are foundational tasks that should never be overlooked. The following tests safeguard against breaches, ensure data integrity, and meet the necessary compliance requirements.

- Shift Security Testing Left: Rather than waiting for the end to test software security, move the entire security testing to the initial development stages. Integrating security during the planning and development stage will allow you to have a clear understanding of your security practices and the steps you need to take to enhance them.

- Penetration Testing: In this testing type, the security professionals attempt to penetrate the security layers using real-world simulation attacks to identify any underlying security gaps.

- Data Privacy and Compliance Testing: The majority of compliance is created to protect user data. With that in mind, you need to focus on tests specifically designed to verify adherence to data privacy regulations and industry standards. Some of the areas where you need to focus on include data masking, consent mechanisms, access controls, audit trails, and data retention policies.

- Tool Selection and Integration: Selecting suitable QA tools and ensuring they integrate seamlessly into your ecosystem is vital for an efficient and scalable testing operation, especially when there is a broad array of options to choose from. In that case, you need to take a strategic approach for the betterment of your QA process.

- Commercial vs. Open-Source: One of the biggest dilemmas is to pick one between commercial and open-source tools. Without a doubt, both offer excellent features unique to each method. In commercial tools, you’ll get comprehensive features, dedicated support, a polished UI, and a mostly finished product. In contrast, open-source tools offer better flexibility, cost savings, and community-driven development.

- Technology Stack and Team Skills: Pick tools that are compatible with your application's programming languages, frameworks, and deployment environment. More importantly, choose tools that align with your team's existing skill sets or for which training resources are readily available.

- Integration Capabilities: Integration will enhance the overall functionalities of various tools and frameworks. Make sure to evaluate how well a tool integrates with your current CI/CD pipelines, project management systems, and other QA tools.

Conclusion

Developing an effective QA strategy’s goal is not just to eradicate bugs, but also to protect your business and build user trust. The core elements of achieving this emphasize that quality is not a one person’s job, but a shared responsibility, deeply integrated into every step of the SDLC.

From understanding QA objectives to evaluating metrics and QA KPIs for software, every step is equally significant for implementing a comprehensive QA strategy for enterprise software. Exploring each step ensures that your enterprise software remains aligned with your business needs while providing the utmost value to the users.

Ultimately, investing in a comprehensive enterprise QA strategy yields a significant ROI. It leads to fewer costly post-release defects, faster time-to-market driven by quicker, more confident releases, improved customer satisfaction and retention, and a stronger reputation and financial stability for your organization. This makes QA a strategic investment rather than a risk-based one.

Every organization has different goals and resources. If a strategy worked for one organization, it’s not a guarantee that it will provide the same results to another enterprise. Creating the right approach requires experience and expertise in enterprise QA.

With over 12 years of industry experience, ThinkSys will provide you with the expertise you need for your enterprise QA. From understanding your requirements to building a tailored implementation plan, our experts will ensure your enterprise software meets its goals.

Frequently Asked Questions (FAQs)

Share This Article: