Mobile App Testing Checklist 2026

Introduction

With today’s high-performance mobile chipsets, smartphones now support complex tasks once reserved for desktops. The mobile application market size is expected to exceed $600 billion by 2030, making it one of the most impactful sectors in the technology industry. To succeed in this industry, your application should have top-notch and useful features with utmost stability and bug-free performance. This level of quality is achievable only through rigorous mobile application testing—and it starts with a well-defined mobile app testing checklist.

Smartphone applications are primarily of two types: Native and Cross-Platform. While native applications give the best experience with custom features specific to each operating system, cross-platform applications use a single codebase with minimal code modifications to run on Android and iOS devices.

Owing to cost savings and a unified codebase, many organizations prefer cross-platform development. However, testing these apps introduces unique complexities—such as plugin inconsistencies and gesture behavior differences—that must be addressed for effective outcomes.

Android’s open-source ecosystem supports thousands of models from various manufacturers with unique configurations and their custom OS skins. On the other hand, iOS offers a more controlled environment, but frequent OS updates, strict UI/UX standards, and closed system architecture can restrict cross-platform mobile testing. This fragmentation leads to challenges, including:

- Test coverage should include a wide array of devices, OS versions, screen sizes, and hardware capabilities.

- With platform-specific APIs, permissions, and background process handling, some features may behave differently.

- Testing platform-specific and native features with different UI/UX is highly challenging.

- Apple’s App Store has more stringent policies than the Google Play Store. Features that may pass the Play Store’s policies may fail the App Store’s policy check. While performing mobile usability testing, this could lead to delays and deeper customization regarding respective stores to ensure a similar experience on both platforms.

Fewer than 0.5% of mobile apps achieve long-term success. This staggering statistic is not meant to alarm, but to emphasize that applications released without value or with unresolved issues are unlikely to succeed.

Testilo revealed that 94% of users uninstall mobile applications within 30 days due to poor performance, lack of engaging content, and underlying bugs; most of these signal one thing: inadequate testing. When mobile application testing is not up to standard or rushed, it can lead to negative reviews on app stores, damage to reputation, security and compliance risks, and a loss of revenue. It may even result in removal from app stores.

Conducting mobile application testing thoroughly and on time not only allows you to release a stable and user-friendly app, but also puts you in the league of 0.5% of apps that succeed.

Challenges in Android and iOS Testing

Though the apps may seem similar to the user on both Android and iOS devices, the backend is where the difference lies. Testing applications for both these platforms is challenging, but their challenges differ.

Android | iOS |

Android runs on thousands of device models from dozens of manufacturers, each with its own screen sizes, hardware capabilities, and custom UI layers, making testing highly complex. | Strict guidelines from the App Store regarding UI/UX, performance, privacy, and content necessitate multiple reviews, which can delay releases. |

Multiple Android versions are active currently, with Android 8 Oreo (released in 2017) being the oldest. Performing backward compatibility testing requires additional QA efforts. | iOS is known for providing timely updates, and for older devices as well. Due to this, previous-gen devices remain updated, but this introduces the possibility of breaking apps working on earlier iOS versions. |

Different Android versions and OEMs may handle permissions differently. Some manufacturers’ pre-block or auto-reset permissions, causing features to fail silently without proper error handling. | Apple restricts access to certain APIs and background processes, especially for security and privacy. Bluetooth access, background location tracking, or file sharing features are subject to tight sandboxing, requiring complex testing approaches. |

Android devices allow users to install applications from third-party stores or OEM app stores. However, this poses additional testing paths for application updates and security. | Testing iOS applications is possible only on Apple devices, limiting testers to Apple’s ecosystem. They can only use macOS, Xcode, and real devices for testing. Though simulation is possible, there is limited support for hardware features. |

Learn more:- Challenges in mobile app testing

Your Mobile App Testing Checklist for High-Quality Releases

1. Common Testing Requirements Across Platforms

Companies prefer to release their apps for both Android and iOS devices. Although Android and iOS differ in several ways,several testing requirements remain consistent across both platforms. These requirements focus on functionality, quality, usability, and performance of mobile applications, regardless of the underlying OS. With that in mind, here is the breakdown of these testing requirements:

- Core Functionality Validation: Most applications aim to offer similar core functions across platforms. Core functionality validation is all about making sure your app's essential features actually work. For instance, a photo editing application will provide tone adjustment, crop, color modification, temperature adjustment, and other features on both Android and iOS versions.

In other words, core features are high-impact features that, if broken, would immediately degrade the user experience or prevent users from using the system altogether. Testing these features involves verifying that these flows execute reliably across different user inputs, device states, and network conditions. Key areas to focus on here:- Testing buttons, forms, navigation, and data input/output.

- Ensuring that user actions consistently produce expected results.

- Checking that permission-based features (like camera or GPS) work properly.

- Ensuring the app behaves correctly with different data inputs and edge cases.

Note: Use tools like Appium, Espresso (Android), or XCTest (iOS) to automate core functionality tests.

- UI/UX Consistency Principles

A smooth and intuitive yet simple user interface makes navigation on your mobile app simpler and convenient. Users can switch from Android to iOS at any time. However, if the app offers different UI/UX on platforms, the users may feel confused and may even look for other alternatives. Due to this, the best option is to offer a similar experience to the users, which is why UI/UX consistency testing is common in both platforms.- Platform Guidelines: Android has Material Design and iOS has Human Interface Guidelines that offer documentation on how UI elements should look and behave. You must consider button placement, navigation patterns, and use of standard controls, alerts, and modals that behave natively without sacrificing consistency.

- Layout Adaptability: Devices on both platforms vary in screen size, so your app should adapt according to the screen size, resolution, and orientation of the device it’s being used on.

- Gestures and Animations: Smooth animations have become a significant part of mobile apps as they signify a well-optimized and better-performing application. Transitions, scrolls, and swipes should respond naturally to the user. Use Android Studio Profiler and iOS Instruments to test animation responsiveness.

- Cross-Device Performance Standards

A mobile app that appears laggy or choppy may underwhelm users, leading them to believe it's not optimized for their device. Users expect apps to perform smoothly, and cross-device compatibility standards ensure this consistency by evaluating the app on varying hardware, OS, and network conditions. Here’s how these standards come to life:- Startup and Touch Interactions: In most cases, the app should launch in under two seconds and under one second on high-end devices. Furthermore, UI interactions such as taps and swipes must respond within 100-200 milliseconds.

- Frames Per Second: With screens becoming smoother and refresh rates increasing to 144Hz, your app should be capable of providing at least 60 frames per second for smoother animations.

- Resource Usage: Your application should be feature-packed enough to remain relevant for the user while consuming as little memory as possible to ensure smooth performance on every device. The average RAM consumption of your app should remain under 100MB to prevent crashes.

- Battery Usage: Users prefer applications that minimize battery drain. Newer devices may not have an issue with such apps as they not only have bigger batteries, but fresher batteries as well. People with older devices already face a quick battery drain due to battery degradation over time, and apps that drain the battery quickly are rarely on their download list. A key metric is keeping the app’s energy impact score below 20 (on a 0–100 scale) for iOS, as measured by Xcode’s Energy Impact tool, and ensuring CPU usage remains under 15% during active use on Android, as tracked by Battery Historian.

Note: Use tools like Firebase Performance Monitoring and Android Vitals to evaluate these metrics.

- Security Baseline Requirements

Insufficient testing may leave security gaps, allowing attackers to find their way inside your application. Malware attacks, data leakage, unauthorized access, and code tampering are the top attacks affecting mobile applications on both Android and iOS devices. Mobile application security validation involves meeting specific standards and practices that are implemented across platforms.- Testing Secure Data Storage and Transmission: Your app will be storing and transmitting user as well as internal confidential data. The foremost step in preventing breaches in data transmission or storage is implementing end-to-end encryption. Your mobile app must use TLS 1.3 for network communications and AES-256 to encrypt stored data. Testing this mechanism involves using Wireshark or any other encryption integrity testing tool, allowing you to detect unencrypted transmissions. Use MobSF or SonarQube for static code analysis.

- Authentication and Session Management Check: Whether it’s the backend or the user profiles, only the authorized person should access the account. With that in mind, OAuth 2.0, biometric login, or other strong authentication mechanisms should be integrated within applications. Your testing strategy should involve confirming that authentication tokens are securely stored in the respective key storage (Android Keystore and iOS Keychain).

- Vulnerability Scanning: Attackers search for vulnerabilities within the system to gain data access. With regular scanning for vulnerabilities, you can prevent common attacks listed on the OWASP Mobile Top 10 guidelines, protecting your app from attacks. Synk, Dependabot, or any other automated scanners, especially when integrated into CI/CD pipelines, can help you identify and flag issues before they become serious problems, ensuring consistent security across platforms.

- Platform-Specific Permissions Handling: Every functional app requires specific permissions before accessing sensitive features. Your job is to ensure that your application asks for necessary permissions only. Furthermore, the app behaves correctly when denied permissions, and the message is communicated clearly to the user.

- API Integration Testing Approach

Rather than adding a feature from scratch, modern mobile apps integrate APIs, enabling seamless functionalities and data exchange across iOS and Android. With the right testing standards, QA teams can verify that APIs function flawlessly on both platforms.- Functional Validation: Every integrated API should function as anticipated, and functional validation testing identifies that. APIs should deliver accurate responses per defined specifications. Your app should send correct requests and handle the responses returned by the API.

- Performance Testing: The integrated API should not only accomplish the desired task but also do so with utmost performance. Identifying how well APIs perform includes executing tests, such as load and stress testing using JMeter or other testing tools. Your APIs should respond under 500ms for every request, even under heavy load.

- Error Handling and Fallback: APIs are not crash-proof, and they may fail under specific circumstances. In case your API fails, your app should display clear and user-friendly error messages. Moreover, it should retry appropriately to complete the task without crashing the application, thereby preventing confusion among users.

Note: Ensure proper handling of HTTP status codes (e.g., 200, 400, 500) with relevant user messaging and logging for diagnostics.

2. Mobile Testing Automation

As mobile applications grow in complexity and user expectations increase, performing tests manually becomes tedious, delaying the release. Implementing automated testing becomes essential for not just quality, but for faster releases too. However, automating mobile tests is not as straightforward. As Android and iOS differ significantly in terms of automation tools, test environments, execution, and integration pipelines, you must work across platforms and understand platform-specific requirements to build scalable apps.

Interesting read:- how to select the best tools

- Platform-Specific Automation Tools: While cross-platform frameworks aim to unify development, the underlying operating systems still demand specialized approaches for effective automation. Understanding and strategically utilizing platform-native or platform-optimized automation tools is crucial for achieving deep, reliable, and performant test coverage that truly mirrors the user experience on each OS.

- Android Automation Testing Tools

- Espresso: Espresso is Google's official UI testing framework for Android applications, designed for fast, reliable, and white-box style testing within a single application. It's part of the AndroidX Test library and encourages developers and QA to write tests in Kotlin or Java, the same languages used for Android app development. It offers features including Automated Synchronization, Concise and Readable API, Hermetic Testing, and seamless integration with Android Studio.

- UI Automator: Provided by Google, this Android testing framework focuses on black-box functional UI testing. Designed for scenarios where you need to interact with elements outside your app under test, this can help you test system settings, notifications, or other third-party apps. Cross-app integration, UI Automator Viewer Tool, System UI interaction, and device interaction APIs are some of the features you get with this tool.

- iOS Automation Testing Tools

- XCUITest: Apple’s native UI testing framework, XCUITest, can be integrated with Xcode, allowing you to write automated UI tests for iOS apps using Swift or Objective-C. This tight integration allows XCUITest to understand the iOS UI hierarchy and behavior directly. XCUITest operates directly at the UI layer of the iOS operating system, providing direct access to iOS native UI elements, their properties, and their interactions.

- XCUITest: Apple’s native UI testing framework, XCUITest, can be integrated with Xcode, allowing you to write automated UI tests for iOS apps using Swift or Objective-C. This tight integration allows XCUITest to understand the iOS UI hierarchy and behavior directly. XCUITest operates directly at the UI layer of the iOS operating system, providing direct access to iOS native UI elements, their properties, and their interactions.

- Android Automation Testing Tools

- Appium Implementation Strategies: Appium is among the most widely used open-source tools for cross-platform mobile automation. Using this tool, you can write a single test script that can run on both Android and iOS, using JavaScript, Java, Python, or Ruby. Though it may sound uncomplicated, getting the most out of this tool requires dedicated strategies. With that in mind, the following are the top Appium testing strategies that will help you perform cross-platform testing efficiently.

- Prioritize Effective Element Identification: UI elements on Android and iOS often have different native attributes or IDs. Relying on fragile locators, such as XPath, can lead to brittle tests that break with minor UI changes. You should embed unique and stable content-description/resource-id (Android) accessibilityIdentifier (iOS) attributes into every testable UI element. As these IDs are designed for accessibility and are generally stable across UI changes and platform versions, Appium can locate them reliably on both OS.

- Implement the Page Object Model (POM) for Maintainability: POM is a design pattern where each screen or significant component of your application is represented by a separate class, which captures the UI elements and the methods (actions) that can be performed on that page. Here, you need to abstract the platform-specific locators within a single Page Object. When the page UI is modified, the code on the page object should be updated, and the tests should remain unchanged. Due to this, the test script and its locators are left distinct. Doing so makes test cases more readable, enhances maintainability, reduces duplication, and offers better collaboration within the team.

- Use Real Devices: Device fragmentation is a significant issue in testing, particularly when testing Android devices. Emulation can surely solve this problem to some extent, as you can basically have any device with any configuration of your choice to test your app. However, emulation is not effective in testing the hardware capabilities of the app, making testing on real devices necessary. Even though it is time-consuming, you need to write test cases and run them on real devices as much as possible to discover underlying hardware issues. For that, you can use the cloud-based testing offered by Appium.

- Use Logging: Once you run a test script, Appium provides a detailed log, giving you information on what’s happening. Initially, you may feel daunted by the tremendous amount of information given. However, you need to learn how to read and use this information for better testing. Appium logs display both desired and default capabilities, which you can use to understand the behavior of the Appium server. In addition, you also receive stack traces of errors, which you can use to diagnose and fix in the app. Though by default, timestamps are disabled, you can activate them manually by adding -log-timestamp at the beginning of each Appium server.

- Cloud Testing for Enhanced Device Coverage

Real device testing is a crucial component of mobile application testing. You may have access to the latest and popular devices, but having every device currently in use is nearly impossible. In that case, using cloud testing is the perfect choice. Here, you use cloud-testing tools to perform testing on real devices using the cloud. No matter what device you want to test on, you can access it and perform the desired tests, enhancing your test coverage.- Testable Application Builds: Before you can begin testing, you need to have testable application builds for each platform that the cloud service will install on the devices: APK for Android and IPA files for iOS. Make sure to be clear about the type of build you’re testing, be it's a debug build or a release build. Also, ensure any build-specific configurations are set correctly. For automated UI testing, your app's UI elements should ideally have unique and stable accessibility identifiers, as these are crucial for reliable element identification by automation frameworks.

- Defined Device and OS Coverage Strategy: Rather than testing on every device, you need a clear understanding of your target audience’s device usage, including the OS and version, device model, memory, screen size, and other relevant details. You can use Google Play Console, Firebase, and Apple Metrics to identify the most popular devices for your app and determine the test devices accordingly. In addition, your strategy should include the recommended tests for real devices.

- Test Scripts: You need to have pre-existing automated test scripts developed using a compatible framework and language. These automated tests must be stable and reliable, avoiding flakiness that can waste cloud resources and obscure bugs. In case you plan to leverage the cloud's parallelization capabilities, your test scripts must be designed to run independently without interfering with each other or relying on shared state across concurrent runs.

- Varying Tests: The tests you perform on real devices using cloud testing should cover major areas of the application. Some of the parts that you test include installation and launch, UI consistency, poor network handling, touch and gesture behavior, push notifications, and navigation.

- CI/CD Integration: CI/CD integration for mobile testing refers to the practice of embedding automated mobile builds and tests directly into a Continuous Integration and Continuous Delivery pipeline, ensuring that every code change is validated immediately and automatically. The reasons for integrating CI/CD into your mobile testing include early defect detection, significantly faster feedback loops for developers to facilitate quicker remediation, consistent quality across all builds through standardized automated checks, increased efficiency by automating repetitive tasks, and ultimately, accelerated release cycles for mobile applications. Integrating a CI/CD pipeline for mobile testing requires understanding and implementing several key components and processes in a logical sequence to achieve continuous quality and efficient delivery.

- Version Control System: To integrate a CI/CD pipeline, the first step is to establish a version control system (VCS), such as Git. Your mobile app’s source code must be managed in a well-structured version control system (VCS), which will act as the central hub for all code changes. Its events serve as triggers that initiate the automated continuous integration/continuous deployment (CI/CD) pipeline.

- CI/CD Platform: The next thing you need to know is to choose a CI/CD platform. You can select from Jenkins, GitLab CI/CD, CircleCI, Bitrise, or Azure DevOps, as these are the top names here. Once selected, you need to configure the platform to connect to your VCS, defining the build, test, and deployment states within a pipeline configuration file.

- Automated Mobile Application Build Process: The pipeline must include automated steps to compile and package your mobile app into a deployable APK and IPA. Here, the CI environment should be precisely set up with the necessary mobile SDKs, build tools, and secure management of signing certificates and provisioning profiles to ensure successful and consistent build generation.

- Integration of Automated Testing: You need to integrate various automated test types so that CI/CD pipelines can execute them. You can run fast unit tests and integration tests early in the pipeline for rapid feedback on code correctness. For UI and compatibility testing, the pipeline can be integrated with cloud-based mobile testing services such as BrowserStack or Sauce Labs, enabling the automatic execution of UI automation scripts across a range of devices.

- Reporting and Feedback: To monitor the performance and make the necessary changes, you need to have the desired reports. Your CI/CD pipeline should be designed to efficiently collect, aggregate, and present test results. For that, it should parse standard test reports and leverage Allure Report or any other advanced reporting tool to generate an interactive and detailed dashboard that provides logs, screenshots, and video recordings from test runs. Additionally, the pipeline should monitor build and test performance metrics, such as execution times and resource utilization, to identify and address bottlenecks.

- Test Reporting Differences by Platform: When conducting automated testing, the generated test reports and diagnostic data exhibit notable differences between the two platforms. These distinctions originate primarily from the platform’s unique native testing frameworks and their approaches to capturing and organizing test results. Understanding these variations is essential for accurate test analysis and effective debugging.

- Android: Espresso and UI Automator are the two native frameworks of Android, and they provide test results in XML files. These files adhere to the JUnit XML format, a far-famed and easily parsable standard for test reporting. The XML output contains test case details, pass/fail status, error messages, and execution duration. While console logs offer real-time output during execution, the structured XML serves as the definitive record. Diagnostic information, such as screenshots, is captured by explicit cells within the test code, generating separate image files that are referred to or linked within the XML report.

- iOS: Automated tests on iOS, leveraging the native XCUITest framework, generate a comprehensive proprietary .xcresult bundle upon execution. It is a self-contained package that embeds detailed test logs, step-by-step execution traces, screenshots, crash reports, and metrics. While .xcresult provides in-depth diagnostic insights when viewed within Xcode, its proprietary nature means that for broader compatibility with non-Apple test analysis systems, the data often needs to be programmatically extracted and converted into more universal formats.

3. Special Testing Considerations

Without a doubt, testing the core functionality and performance of the application is crucial. However, you cannot ignore the other aspects that contribute to the app's success on the online store. Features such as offline capability and low battery consumption enhance the overall experience, making it better and more user-friendly. Testing these special features requires a special testing approach, which is discussed below:

- Offline Functionality Testing: Even though the world is now connected to the internet more than ever, users may not always remain connected to the internet. It could be due to poor network conditions or limited internet plans. Nonetheless, the app should offer something to the user, even when the internet is unavailable. Offline functionality testing, also known as disconnected or low-connectivity testing, is a specialized quality assurance process that evaluates a mobile application's ability to maintain its core functionality and deliver a consistent user experience when internet access is completely unavailable, intermittent, or operating under very low bandwidth conditions.

- Environment Simulation: Mobile app offline functionality testing requires simulating various network environments, including network unavailability, intermittent connections, and varying bandwidths or latencies. One of the best ways to do this is by using the built-in network condition emulation features available in Android Emulators and iOS Simulators within Xcode, which allow you to set specific network profiles, such as 2G, 3G, 4G, and 5G speeds. As Android and iOS handle network loss differently, especially in the background, you need to capture platform-specific nuances to ensure optimal performance.

Android apps can continue to use WorkManager for offline job queuing, while iOS apps rely on BGTaskScheduler, which is stricter and more battery-conscious. The tests should confirm the app does not crash, stall, or display misleading errors during these state transitions. - Local Data Capture: The moment the device loses connection, the app must continue capturing user input and store it safely locally. Here, your goal is to test local storage mechanisms, which could range from simple SharedPreferences for Android or UserDefaults for iOS for key-value pairs, or more complex local databases like SQLite, Realm, and Core Data.

To perform this, you need to interact with the app while it is disconnected, make data changes, and then inspect the device's local storage to ensure data integrity, persistence across restarts, and adherence to security protocols. - Testing Sync Logic: When data is stored locally due to a lack of internet connection, it should be sent to the server upon resumption of connectivity. Through simulation, you need to create scenarios such as initial data synchronization, bidirectional changes where both device and server data are altered, and crucially, direct conflicts where the same data is independently modified and confirm whether the data is sent automatically, uses retry mechanisms, and whether it respects operation sequence and data integrity. Furthermore, if a record is edited both offline and online, the app must decide how to resolve it: overwrite with the latest version, merge fields, or prompt the user.

- Environment Simulation: Mobile app offline functionality testing requires simulating various network environments, including network unavailability, intermittent connections, and varying bandwidths or latencies. One of the best ways to do this is by using the built-in network condition emulation features available in Android Emulators and iOS Simulators within Xcode, which allow you to set specific network profiles, such as 2G, 3G, 4G, and 5G speeds. As Android and iOS handle network loss differently, especially in the background, you need to capture platform-specific nuances to ensure optimal performance.

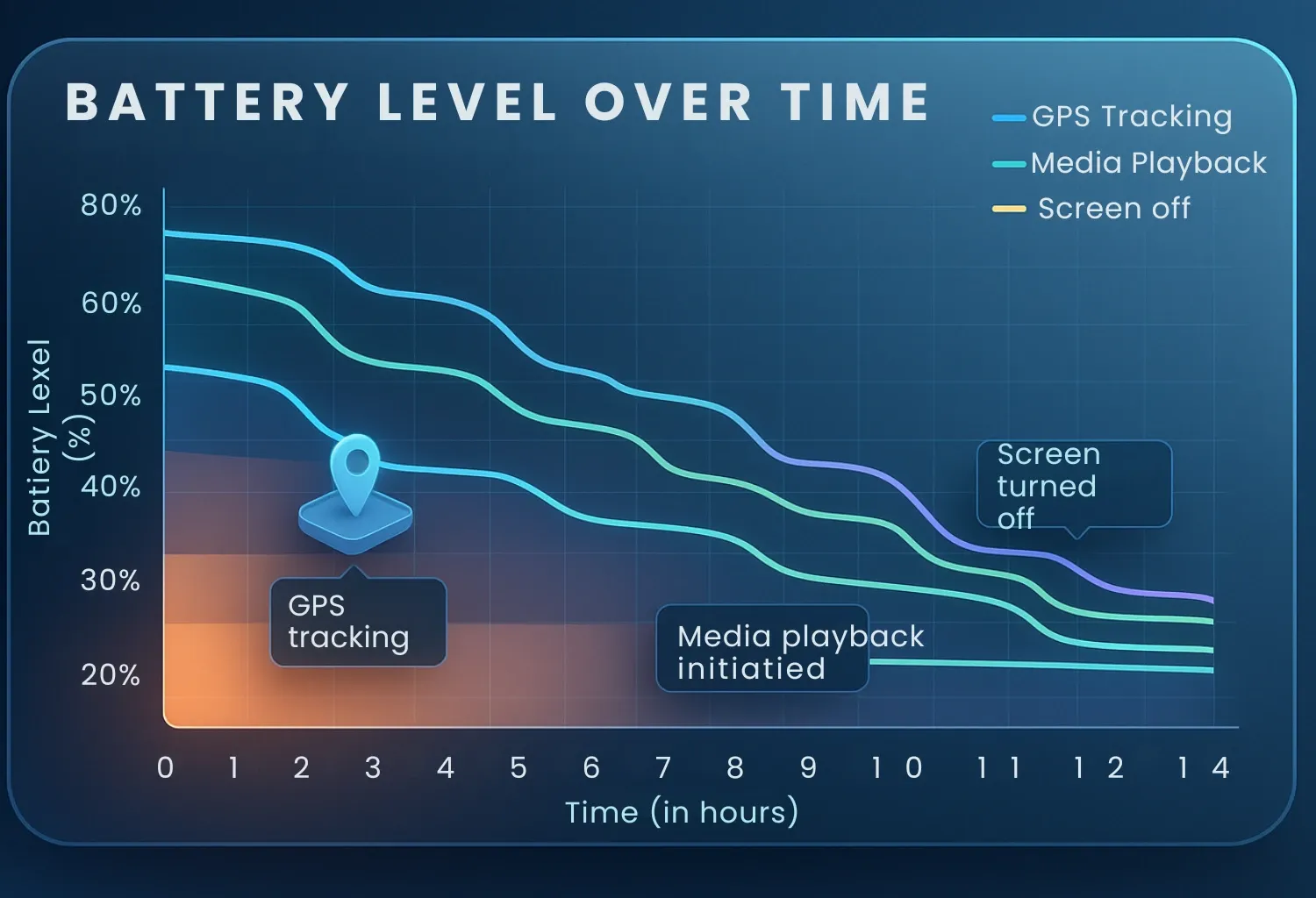

- Battery Consumption Testing: Users want apps to consume as little battery as possible. Not only that, but the App Store and Google Play Store prefer apps that consume minimal battery, and apps that drain battery may face rejection from the store listing. Battery consumption is a critical quality benchmark, especially for apps involving location tracking, background sync, media playback, or frequent API polling.

- Identify Power-Consuming Elements: Not every component consumes battery equally in an app. Before you begin battery consumption testing, identify the power-hungry components. These may include background location services, push notification polling, continuous network requests, media playback, or alarms, among others. Android and iOS offer the necessary tools to identify these elements.

On Android, you can use Battery Historian, ADB dumpsys batterystats, and Android Studio Profiler to track app-specific energy draw, whereas you need to use Xcode’s Energy Log, Instruments (Time Profiler, Activity Monitor), and the Console for background task diagnostics in iOS. Once identified, run the app under normal, idle, and stress conditions to determine which components remain active and how long they hold system resources, such as GPS, CPU, or wake locks. - Background Activity: Modern mobile operating systems aggressively throttle background apps to conserve battery. Android leverages Doze Mode and App Standby Buckets, while iOS employs Background App Refresh and Task Expiration APIs. If an app improperly handles background scheduling, it can get penalized or force-stopped. Performing this test involves using simulation tools to run the app in low power mode, background fetch during poor connectivity, and sleep-wake cycles to confirm that scheduled background tasks are infrequent, optimized, and necessary.

- Usage Testing Across Device Conditions: An app consuming 3% battery in an hour on one device may consume 4% or 1.5% on other devices. Battery behavior can vary significantly across OS versions, hardware profiles, battery health, and app states. Here, you need to perform long-duration tests (1–4 hours) with battery usage logging enabled to validate the battery impact under realistic usage scenarios, such as scrolling, watching media, using maps, or messaging.

- Identify Power-Consuming Elements: Not every component consumes battery equally in an app. Before you begin battery consumption testing, identify the power-hungry components. These may include background location services, push notification polling, continuous network requests, media playback, or alarms, among others. Android and iOS offer the necessary tools to identify these elements.

- App Update Testing Procedures: Updating an application is just a few clicks for the users, but it’s a whole different story for the development organization. New updates can introduce bugs, glitches, and may even make the application unusable, leading to uninstallation and negative reviews. Testing the app rigorously before releasing a new update will ensure that your app does not follow this path.

- Backward Compatibility and Data Migration: Whenever the user updates the app, they expect their data to be retained, allowing them to continue where they left off. With that in mind, your app must contain compatibility with existing user data and preferences from older versions. For that, you need to install a range of older app versions on test devices, populate them with diverse user data, and initiate the update to the latest version. In a perfect scenario, all the user data and preferences will be accurately migrated without loss or corruption, and all app functionalities should work correctly.

- Update Path Validation: Some users update the app the moment a new version is available, while others may avoid it till the version they’re using becomes obsolete. In short, users can update from any previous version. Due to this, you need to test different update scenarios, including minor updates and major upgrades, while skipping versions. The best way to achieve this is through simulation, as it allows you to upgrade from various versions on multiple devices.

- Interrupted Update Recovery: During the update process, any interruption, such as a poor network connection, app crash, or power loss, may cause the installation to be hindered. In that case, the app should roll back to the previous version without any data loss. To ensure that your app remains stable even after interruption during update, you need to deliberately introduce disruptions by toggling device settings or force-closing the app during installation. The app should either resume the update, rollback to the previous version, or provide an accurate error message to the user.

- Regression Testing: Even if data migrates successfully, new updates can introduce regressions in existing features or degrade performance. Here, you need to perform regression tests on the updated app, covering all core functionalities and critical user flows. And, automation frameworks and profiling tools can help in executing tests quickly and monitoring performance. After test completion, your app should function as expected, and its performance metrics should be maintained according to the set benchmarks.

- Data Synchronization Testing: The mobile’s local state and your backend data should be in sync to provide the right information and service to the user. An app that fails to sync this data accurately can result in data inconsistencies and loss. Data synchronization testing helps you analyze your app’s ability to consistently and accurately reconcile data changes between the device and the remote server.

- Sync Accuracy: The primary objective in synchronization testing is to verify that data is synced completely and accurately between the client and server. Here, you need to simulate initial sync, offline modifications, server-initiated changes, and bidirectional sync scenarios by manipulating network states and directly altering data on both the device and backend. The outcome should be high data consistency, performance, and correctness across various data volumes and synchronization frequencies, confirming the app handles all expected data flow paths gracefully.

- Conflict Resolution: There may be circumstances where data is updated on both an offline device and the server simultaneously, resulting in data conflicts. In such cases, your app should be capable of implementing the right conflict resolution strategy to resolve this conflict and update the data accurately. Last-write wins (LWW), server-authoritative, and user-mediated resolution are the top strategies employed here. You need to update data on both mobile and server simultaneously, and determine whether your mobile app updates the data according to the determined resolution strategy.

Conclusion

There are over 5 million apps available collectively on the Google Play Store and the Apple App Store. However, only a few make their mark on the users. The factors that differentiate an average app from an excellent one are its functionalities, security, stability, and UI/UX. All that can only be achieved when the app undergoes rigorous testing and quality checks. Mobile application testing demands a thorough understanding of platform-specific behaviors, testing automation strategies, and critical edge-case handling. From validating cross-platform consistency to managing updates, sync, and offline modes, each testing layer ensures stability and trust across iOS and Android ecosystems.

To streamline this process, you should build a mobile testing matrix that defines test cases across various device types, OS versions, network conditions, and user states. Creating an effective mobile testing matrix involves:

- Identifying Testing Dimensions: The first step here is to classify the testing dimensions of the devices that you’re going to test. These include platforms, operating system versions, devices, network conditions, user states, app conditions, and more.

- Analyze App Features and Requirements: Make sure to review your app’s features and technical requirements, identifying functionalities that are device-dependent, OS-version-dependent, or exhibit different UI behaviors across various screen sizes and resolutions.

- Categorize Test Cases: You will perform various tests on your app to ensure its stability. For easier understanding, it is best to categorize the tests according to their purpose. For instance, non-functional tests may include testing battery, performance, and network behavior.

- Prioritize and Refine the Matrix: The final step involves prioritizing the test cases and environments within the matrix based on risk, user impact, and business value. High-priority features and critical user flows should be tested on the most impactful device and OS combinations and under the most challenging network conditions. It is recommended to regularly review and update the matrix based on new OS releases, device launches, changes in user demographics, and evolving app features to keep the matrix relevant and efficient.

Sample Test Matrix

| Feature/Test Case | OS Version (Android) | Device (Android) | OS Version (iOS) | Device (iOS) | Network | Battery Level | Expected Behavior |

| User Login (Valid Credentials) | Android 14 | Samsung S24 Ultra | iOS 17 | iPhone 15 Pro | Wi-Fi | >50% | Successful login, and dashboard loads within 3s. |

| User Login (Valid Credentials) | Android 12 | Redmi Note 11 | iOS 16 | iPhone SE (3rd) | 4G | >20% | Successful login and dashboard load within 5 seconds. |

| Offline Article Viewing | Android 14 | Pixel 8 Pro | iOS 17 | iPad Air 5 | No Net | >10% | Pre-downloaded articles display fully, with no error messages. |

| Image Upload (High Res) | Android 13 | OnePlus 10 Pro | iOS 17 | iPhone 14 | 3G | >30% | Image uploads occur within 10 seconds with an accurate progress indicator. A message is displayed upon completion. |

| Background Sync (New Data) | Android 12 | Samsung A53 | iOS 16 | iPhone 13 | Intermittent | >40% | Sync completes successfully upon reconnection, all new data is visible, and there is no data duplication/loss. |

| Push Notification Reception | Android 14 | Samsung S24 Ultra | iOS 17 | iPhone 15 Pro | 5G | >10% | Notifications are received instantly, and the app opens to the correct screen. |

| App Update (1.0 to 1.1) | Android 13 | Pixel 7 | iOS 16 | iPhone 12 | Wi-Fi | >60% | Update successful while preserving the entire user data. Existing features remain functional. |

| Video Playback (Full HD) | Android 14 | Google Pixel 8 | iOS 17 | iPhone 15 | 4G | >70% | Smooth playback without buffering issues. Battery drains remain minimal. |

| GPS Tracking (Background) | Android 14 | Samsung S23 | iOS 17 | iPhone 14 Pro | 4G | >50% | Accurate location updates every 5s, minimal battery drain, no foreground notification unless required. |

| Payment Gateway Integration | Android 14 | Any Flagship | iOS 17 | Any Flagship | Wi-Fi | >30% | Transaction completes successfully with secure payment flow. A confirmation is displayed within 2 seconds. |

When considering iOS vs. Android testing differences, both platforms have some similarities and differences. Optimizing mobile app quality in a multi-platform environment necessitates a strategic balance between testing efforts common to both Android and iOS and those unique to each platform to maximize test coverage, ensure consistency, and optimize resource allocation. The core goal is to identify commonalities that can be tested efficiently across platforms, while dedicating focused effort to platform-specific behaviors and user experiences, ultimately delivering a high-quality app on both operating systems.

Striking the balance between platform-specific and shared testing methods requires identifying the features that fall in the right category. Shared functionalities mostly include backend API integrations, core business logic, data processing, user authentication flows, and non-platform-specific calculations. On the other hand, platform-specific elements encompass native UI/UX interactions, device hardware integration, push notification handling, and adherence to platform-specific design guidelines (Material Design for Android, Human Interface Guidelines for iOS). The key is to avoid duplicating efforts where the logic is identical, while deliberately filling the gaps where OS-level behavior differs.

The last decade has witnessed the most drastic changes in how smartphone apps perform. From the introduction of splitscreen to biometrics, these features have introduced new guidelines for testing. If you’re not keeping up with the latest and upcoming updates and requirements, there is a high possibility that your app will face rejection.

The primary method for staying current is consistently monitoring official developer resources. For iOS, it's Apple Developer Program news, and for Android, it's Android Developers News, Android Developers Blog, and Google Play Console announcements. These channels provide crucial information on upcoming OS features, API changes, deprecations, and policy updates, often months in advance.

In addition, you can become part of platforms like Stack Overflow, Reddit communities, Ministry of Testing's Club forums, and platform-specific groups on sites like Meetup.com to discuss new features, common pitfalls, and solutions to emerging issues.

Apart from that, you can attend major mobile development conferences such as Apple WWDC, Google I/O, Droidcon, and Appdevcon to stay informed about the latest OS versions, deep-dive sessions on new APIs, performance optimizations, and changes in app store guidelines.

In essence, mastering mobile app quality is an ongoing journey that requires continuous adaptation and a comprehensive testing strategy. By understanding the mobile app testing requirements, your team can navigate the complexities of the mobile ecosystem with confidence, ensuring your apps consistently deliver exceptional experiences to users worldwide.

Undeniably, mobile app QA can be intimidating, especially when considering the rapid pace of OS updates. From rigorous functional and performance testing to offline capabilities, ThinkSys, with its mobile app testing service, will help you overcome the complexities of mobile QA to deliver comprehensive testing solutions tailored to your app's unique needs.

Share This Article: