Is Test Automation Always the Best Choice?

In today’s fast-paced software world, test automation feels like a given. Everyone's doing it. Or at least, everyone thinks they should be. But just because you can automate something doesn’t always mean you should. Like most tools, automation shines brightest when it’s used deliberately—not blindly.

So, in 2025, when does test automation make sense? When is manual testing still worth the time? And how do you find that balance without overengineering your QA strategy?

This guide is for software QA leaders, engineering managers, and development teams deciding between automated and manual testing in 2025. It answers questions like:

- When should I automate my tests?

- Are there cases where manual testing is better?

- What’s the ideal balance for a modern QA strategy?

Key Takeaways: When Test Automation Makes Sense

There are a few places where automation just makes sense. No debate:

- Regression testing: Running the same checks after every code change? Automate that.

- Smoke testing: Quick sanity tests to make sure your app hasn’t completely imploded.

- API testing: Fast, consistent, script-friendly.

- Cross-browser/device testing: Honestly, who wants to do this manually anymore?

Thanks to tools like Playwright, Cypress, Testim, and Autify writing and maintaining these tests is smoother than ever. They hook right into CI/CD pipelines, handle things like auto-waiting and parallel runs, and even support visual testing. It’s a huge step up from the flaky Selenium scripts of the past.

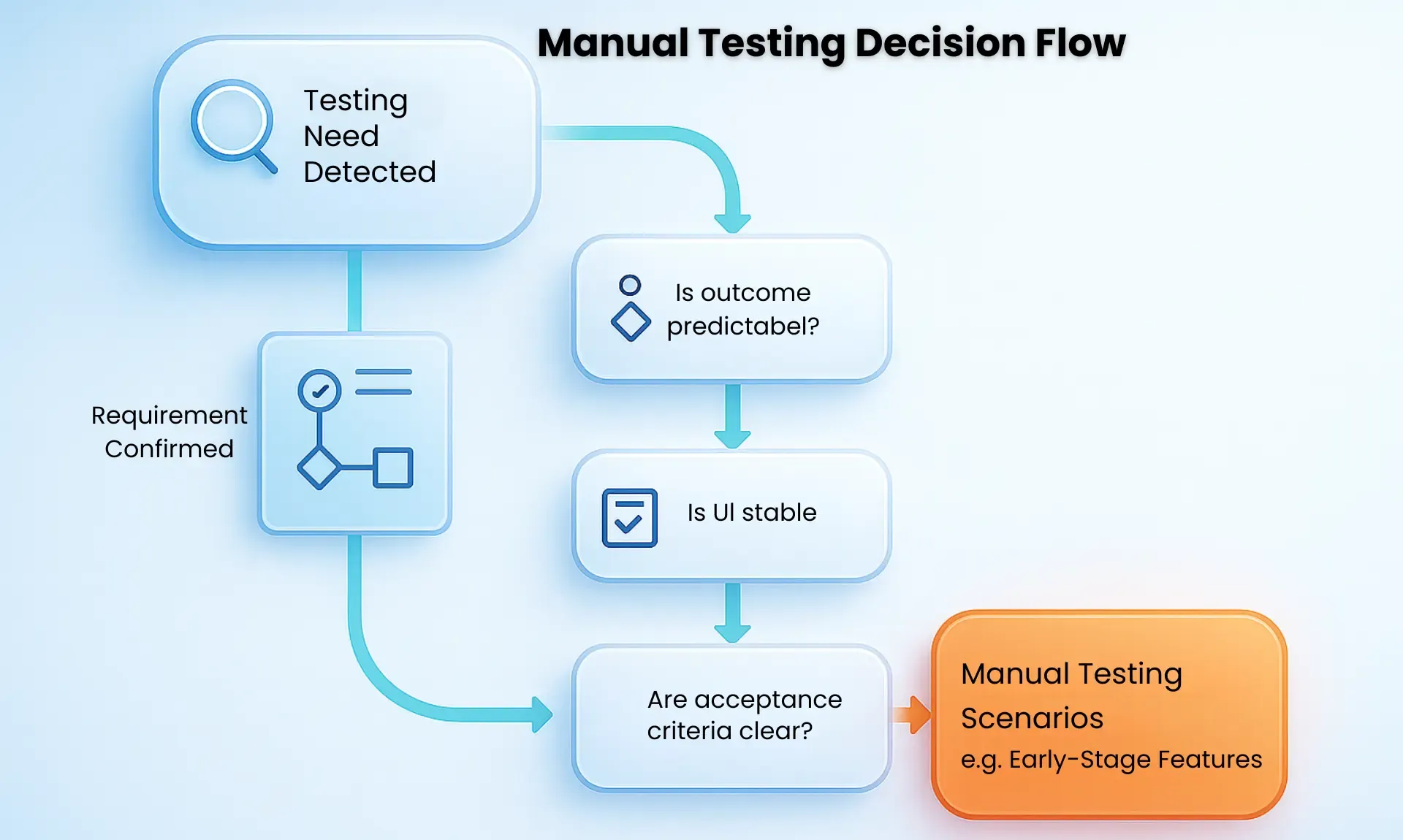

When Manual Testing Is Still the Best Choice

Despite the tech, there are still situations where manual testing quietly saves the day:

- Exploratory testing: When you don’t know what you’re looking for until you find it.

- UI/UX validation: Automation can check pixels, sure, but can it tell you if something feels wrong?

- Early-stage features: If the UI changes daily, it’s faster to click through than rewrite scripts.

- Complex logic flows: Especially ones with unpredictable outcomes or fuzzy acceptance criteria.

Manual testers bring context. They think critically, adapt quickly, and catch things automation just isn’t wired to understand yet.

The Hidden Costs of Automating Everything

It’s easy to fall into the trap of automate everything. But reality isn’t that clean. Automation has its own maintenance load:

- Tooling and infrastructure costs (cloud services, device farms).

- Time spent updating scripts as the product evolves.

- Training new folks on frameworks and best practices.

- Debugging false positives (or worse, false negatives).

And poorly scoped automation? That can eat up more time than it saves. Worse, it gives a false sense of coverage.

How Leading QA Teams Find the Right Balance (2025 Best Practices)

In 2025, the savviest QA teams aren’t choosing sides. They’re combining forces:

Task Type | Best Approach |

| Repetitive checks. | Automate. |

| One-time/unstable flows. | Manual. |

| User experience testing. | Manual. |

| API regression tests. | Automate. |

| Critical business flows. | Both. |

AI is adding another layer too. Modern AI powered tools can autogenerate test cases from user behavior, heal broken locators when the UI changes, and even flag risky areas based on commit history. It sounds like magic—but it still needs human oversight.

Conslusion:

Test automation is incredibly useful. But it's not magic. And it's definitely not free.

Over-automating can slow teams down just as much as under-automating. The goal isn’t to write the most scripts. It’s to catch the most bugs, as early as possible, with the least effort.

So if you're building a test strategy this year, don’t ask, "Should we automate this?" Ask, "Does it make sense to automate this right now?"

Need help building a future-ready QA strategy?

Contact our team for expert guidance on balancing automation and manual testing for 2025 and beyond.

Frequently Asked Questions

Share This Article: