How to Improve Test Coverage without Slowing Down Development?

Before releasing software for the users, it undergoes testing to ensure uninterrupted service. But how do you know that software is tested sufficiently?

Test coverage helps answer that by showing how much of the code has been tested. Facing poor test coverage can lead to frustrated users, causing monetary and reputational loss to an organization.

With that in mind, this article will help you improve your test coverage without compromising time-to-market.

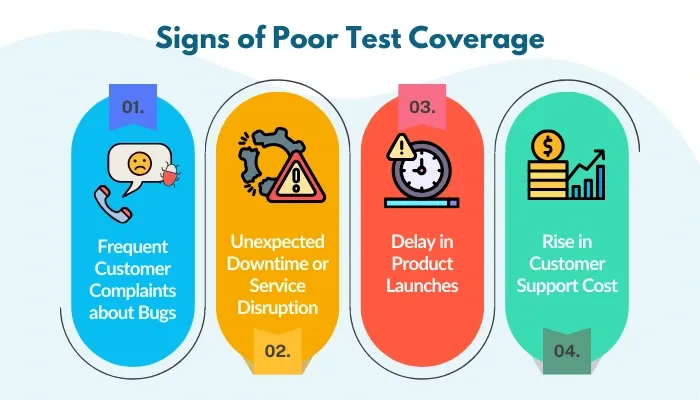

Signs of Poor Test Coverage

Lack of test coverage leaves a significant mark on an organization, and these can be the signs that your test coverage is not adequate:

- Frequent Customer Complaints about Bugs: If your customers constantly report issues with your product, it is a clear indicator that these issues bypass your testing. More bugs mean more complaints, eroding customer trust and leading to lost sales.

- Unexpected Downtime or Service Disruption: When your systems go down unexpectedly, it's a sign that critical functionalities weren't adequately tested. One prime example is the 2024 CrowdStrike incident, which crashed over 8 million systems. CrowdStrike pushed a faulty update to its Falcon Sensor security software, leading to issues with Microsoft Windows software. Experts believe that the primary cause of this faulty update was a lack of test coverage.

- Delay in Product Launches: If your product releases are frequently pushed back due to last-minute bug fixes, it suggests that testing isn't happening early enough or isn't comprehensive enough. Cyberpunk 2077, one of CD Projekt Red's most highly anticipated games, was delayed multiple times and eventually released with numerous issues. CD Project Red accepted that they did not test their game enough on older generation consoles, specifically PlayStation 4 and Xbox One, resulting in issuing a public apology for the unstable game post-release.

4. Rise in Customer Support Cost: When your customer support team spends most of their time dealing with bug-related issues, it is a sign that your testing process lacks adequate coverage and efficiency. When bugs slip through, support teams spend more time fixing problems than assisting customers, driving up costs and harming satisfaction.

Why is Testing Considered Slow?

Many believe that slow testing is the primary cause of project delays. Before moving further, it is crucial to understand why testing is considered slow:

- As per a recent report, over two-thirds of software testing organizations implement a 75:25 (manual: automated) ratio. Moreover, 9% of companies perform only manual testing. With most testing performed manually, it is considered time-consuming, hence blamed as the culprit for slowing down the development.

- Several organizations perform testing at the end of the SDLC. Due to this, bugs are identified at this stage, contributing to the delay in the release process and making testing appear as a hurdle in quick delivery.

- Sometimes, companies prefer to test everything rather than areas that need testing genuinely. This lack of prioritization results in redundant test cases.

All of this contributes to making the process slower and reduces the test coverage that could be achieved with the right approach.

Improving Test Coverage without Compromising Speed

Given these challenges, here are some key ways to improve test coverage without slowing development down.

1. Automate Testing: 30% of companies have reported that manual testing is the most time-consuming activity in the testing phase. By implementing automated testing, you can perform a higher number of test cases, leading to better test coverage. Additionally, automating tests can increase speed and efficiency and detect bugs early. The top automation testing tools are Selenium, Appium, Test Studio, Lambda Test, and Cypress.

You can expect an initial increase of 20% to 60% or more in budget depending on factors including:

- Tool costs

- Infrastructure setup

- Training and skill development

- Initial test script development effort

While automation testing requires an initial investment, 60% of organizations see a substantial return on investment. The result? Not only do they achieve better application quality, but they also enhance testing efficiency, ultimately making the upfront costs worthwhile.

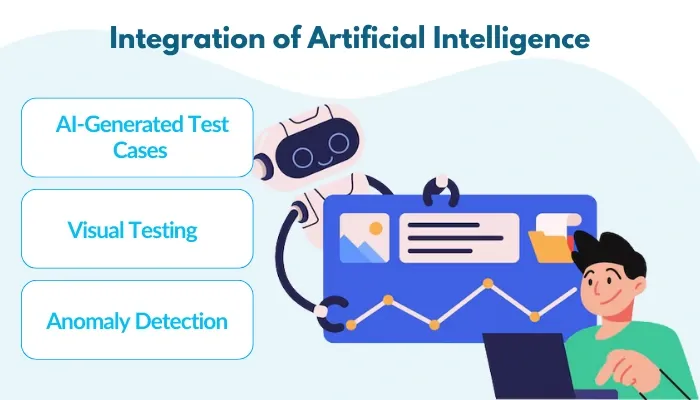

2. Integration of Artificial Intelligence: Artificial intelligence has been widely adopted in several industries, including testing. The World Quality Report 2024-25 revealed that 71% of organizations have integrated AI and Generative AI into their testing operations.

Here are some ways how AI helps improve test coverage:

Test Case Generation: By analyzing customer inputs, customer journeys, popular features, and other relevant data, AI can help identify the pivotal parts of the software, such as key user interfaces, high-traffic functionalities, security-sensitive areas, and integration points, allowing you to prioritize test cases. In addition, it can generate test cases faster than manual methods, saving you time.

Interesting Read:- How to write effective test cases?

- Visual Evaluation: AI can evaluate visual elements such as screenshots and videos and identify any inconsistencies, including missing buttons, varying fonts, and misaligned text, among others, which can negatively impact the user experience. Testers might miss these small details, but AI tools, such as Applitools and Functionize, ensure that visual analysis can cover them all.

- Detecting Anomalies: Testers can utilize AI to evaluate vast amounts of data generated, including metrics, KPIs, and logs. AI can use this data to identify any unusual pattern, such as sudden spikes in error rates, unusually high response times, discrepancies between expected and actual outputs, or irregular system resource consumption. These patterns could indicate a defect, saving the tester time and enhancing the overall test coverage.

3. Don’t Leave it for Testers Only: Undeniably, the primary job of a tester is to test the product as much as possible, but identifying and reducing bugs shouldn’t fall solely on their shoulders. Testing should ideally be a collaborative effort, with each role contributing to minimizing errors. What other roles can do?

- Developers: Writing clean, optimized code and using practices like TDD can improve reliability and testing. While developers aim for high-quality code, evolving project requirements, maintaining consistency across large codebases, and collaborating with multiple teams can be challenging. Tools like GitHub Actions, Jenkins, SonarQube, and BitBucket help automate code reviews, enforce standards, enhance collaboration, and integrate testing into the development pipeline.

- Business Analysts: Business analysts are crucial in defining precise, concise, and testable requirements that guide successful projects. However, as business needs evolve and new insights emerge, requirements may need to be adjusted. By leveraging their expertise in requirement gathering and validation and using tools like Jira and Trello, business analysts can adapt to these changes seamlessly, ensuring clarity, alignment, and smooth project progression.

- Product Owners: Product owners are responsible for defining clear and actionable acceptance criteria, which serve as a benchmark for developers and testers to understand when a feature is complete and meets business requirements. Well-defined criteria ensure that development stays aligned with user needs and business goals.

However, balancing technical feasibility with business priorities can be challenging. Collaboration tools like Aha! or Productboard help streamline this process by facilitating clear communication, prioritizing features, and aligning teams around shared objectives, ensuring that acceptance criteria are practical, achievable, and well-understood by all stakeholders. - Testers: Testers are expected to review requirements and identify risks. However, involving them only in the final stages of development can limit their ability to ensure comprehensive test coverage. Engaging testers from the beginning allows them to review requirements, identify potential risks early, and collaborate closely with developers to build high-quality software with robust test coverage.

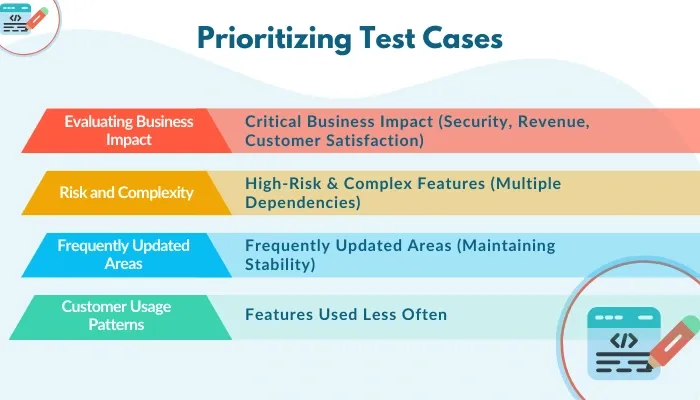

4. Prioritizing Test Cases: Prioritizing test cases is not mandatory for small software. However, ensuring high test coverage becomes complex as the software scales with new feature releases. Test case prioritization helps improve test coverage, allowing you to test essential cases first. Prioritizing test cases involves:

- Evaluating Business Impact: Prioritize business cases based on how critical a feature is to your business. If a failure could result in lost revenue, security breaches, or customer dissatisfaction, it should be tested thoroughly and frequently.

- Risk and Complexity: Test cases covering complex features with many dependencies should be prioritized higher, especially if they interact with multiple parts of your system.

- Frequently Updated Areas: Frequently updated software parts can introduce new issues. To maintain stability, such areas should be given priority over others.

- Customer Usage Patterns: Make sure to focus on the features your customers use most. For instance, if 80% of users interact with just 20% of your app, those high-traffic areas should be tested first.

- Tools: Tools such as BrowserStack Test Management, Xray, and TestRail can help prioritize and manage test cases.

5. Develop a Plan and Determine the Right Goals: Enhancing test coverage requires a well-defined strategy that covers all the aspects of the program under test.

- Define various aspects which you want to test in your application. These could be device type, operating systems, and features your customers use, among others.

- Go beyond standard tests and consider real-world conditions such as network fluctuations, battery constraints, different user environments, and more.

- Make sure to consider the estimated time and budget for the test. Fixing the desired time and budget beforehand allows you to complete testing within the right timeframe while preventing overspending.

- Set a dedicated goal for the test coverage percentage that your app should meet. The industry standard is 70-80% of test coverage.

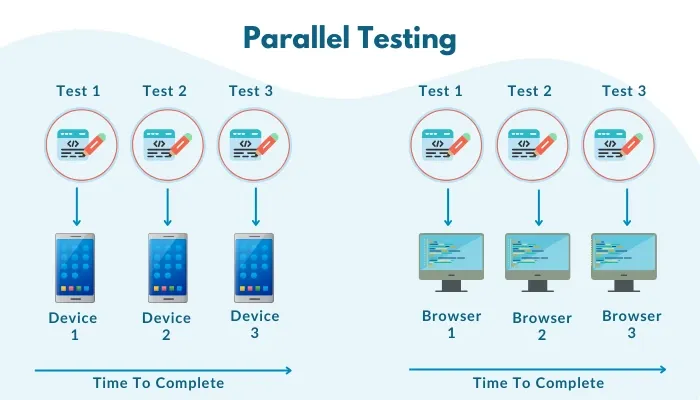

6. Parallel Testing: Parallel testing is a powerful approach to increasing test coverage while maintaining rapid development cycles. By running multiple test cases simultaneously across different environments, browsers, or devices, parallel testing ensures that software is thoroughly validated while reducing the testing time.

As per Artem Golubev, CEO and Co-Founder, testRigor, Parallel testing is a sure shot for comprehensive & quick test coverage and is particularly beneficial for large projects with extensive test cases, as it enables faster feedback and quicker identification of issues.

7. Use a Test Coverage Matrix (TCM): The TCM ensures that various scenarios of a feature are tested to identify hidden bugs and issues. It aligns business priorities with testing efforts, helping teams maximize coverage without unnecessary effort.

Let’s take an example of an e-commerce platform planning to release a new payment system. The business wants to ensure high test coverage while optimizing resources. Here is a TCM for its credit card transactions module:

Case Type | Test Case |

Default case | Verify successful payment with valid card details. |

Boundary | Test maximum and minimum transaction limits. |

Negative | Attempt payment with an expired card. |

Negative | Attempt payment with the wrong CVV. |

Negative | Attempt payment with an incorrect card number format. |

Negative | Use a blocked card. |

KPIs to Measure the Effectiveness of Your Test Coverage

After applying the right practices, how can you measure whether your test coverage is actually adequate? That’s where KPIs come in. Tracking the right test coverage KPIs helps businesses:

- Identify gaps in testing before they impact users.

- Improve software quality by catching defects early.

- Optimize testing efforts to focus on critical areas.

Let’s break down the most crucial test coverage KPIs, their ideal targets, and why they matter.

1. Test Coverage Percentage

- The percentage of functional requirements covered by test cases.

- Ideal target is 90% or higher.

- A high test coverage percentage means your team is testing most of the app’s functionality, reducing the risk of untested features failing in production.

- For example, if your e-commerce platform has 100 business-critical user flows, at least 90 should have test cases.

2. Code Coverage

- This measures how much of the source code is executed by automated tests.

- The ideal target is 80% to 90%, but 100% for critical business logic.

- Ensures key parts of the code are tested, reducing post-release defects.

3. Defect Detection Efficiency (DDE)

- DDE helps measure the defects caught during testing versus those found in production.

- Formula: DDE = (Defects found in testing/total defects [testing + production])*100.

- The ideal target is 85% or higher.

- The higher the DDE, the fewer defects slip into production, leading to lower support costs.

4. Test Execution Coverage

- It measures the percentage of planned test cases that are executed.

- The ideal target is 95% or higher.

- If test cases aren’t executed, test coverage becomes meaningless. This KPI ensures teams are testing what they planned, avoiding any last-minute gaps.

- For example, if your test plan involves 500 test cases, at least 475 should be executed before a release to maintain a high-quality standard.

5. Requirement Coverage

- This KPI measures the percentage of business and functional requirements covered by tests.

- The ideal target is 90% or higher.

- It ensures that all core features and critical business processes are tested.

6. Automation Coverage

- Here, you’ll measure the percentage of test cases automated versus total test cases.

- The ideal target is 70% or more.

- Higher automation coverage means faster, more consistent testing and reduced manual effort.

- The right approach to increase automation coverage is by automating critical workflows, high-risk areas, and repetitive tests.

Key Challenges in Test Coverage

Achieving high test coverage sounds excellent in theory; after all, the more you test, the fewer surprises in the production, right? However, in reality, ensuring comprehensive test coverage is easier said than done. With that in mind, here are some hurdles you may face while accomplishing high test coverage.

1. Defining Enough Test Coverage

One of the biggest struggles is figuring out how much testing is enough. Should you aim for 100% test coverage or focus only on critical business functions?

- The reality is that 100% test coverage is unrealistic and doesn’t guarantee bug-free software.

- Some low-risk areas may not need extensive testing.

- Coverage should be risk-based, focusing on business-critical and frequently used features.

2. Keeping Up with Rapid Releases

In Agile and DevOps environments, releases happen daily, weekly, or sometimes multiple times a day. Ensuring optimum test coverage in this rapid environment is a significant challenge.

- Implement parallel testing to run multiple test cases simultaneously and save time.

- Shift test left and right to catch issues throughout the lifecycle.

3. Handling Legacy Systems & Third-Party Integration

Modern applications don’t exist in isolation as they rely on legacy systems, third-party APIs, and external services, making it challenging to ensure test coverage. Moreover, older systems may not have proper documentation, making it hard to write test cases.

- Maintain an API contract with third-party vendors and monitor for changes.

- Use service virtualization to simulate external dependencies in test environments.

- Gradually increase test coverage for legacy systems instead of trying to overhaul everything at once.

4. Test Data Management Issues

Good test coverage requires realistic and diverse test data. However, managing test data is one of the most overlooked challenges. Manually creating test data is time-consuming and error-prone, and production data can’t always be used due to privacy and compliance restrictions.

- Use data generation tools like Mockaroo, Datafaker, Synthea, or Faker.js to create diverse and anonymized test datasets.

- Automate test data provisioning to ensure fresh and relevant data for every test run.

- Test across different data scenarios, including large datasets, missing values, and extreme inputs.

Conclusion

By now, you have a robust understanding of what test coverage is, how to spot weak coverage, ways to improve it, and the challenges that come with it. Keep in mind that the key is not to chase numbers but to test the right things in the right way to catch issues before they become costly failures.

Balancing automation with manual testing, using smart data generation tools, and focusing on high-risk areas can make a huge difference. And if it feels overwhelming, working with a professional testing company can help you fill gaps, refine your strategy, and speed up the process.

Frequently Asked Questions (FAQs)

Share This Article: