The Expert Guide to Enterprise Software Testing

Who Needs to Read This?

Honestly, if you have any hand in software—whether you’re managing quality assurance, building products, making business decisions, or just looking to expand their knowledge of enterprise software testing—this guide is for you. It’s not just about the technical nuts and bolts (though there’s plenty of that); it’s about understanding why testing large, complex systems is so different from what most people imagine.

And, really, why quality assurance isn’t just a checkbox, but a core piece of keeping businesses running smoothly. You’ll see how testing has changed—tools, methods, even the mindset. But more importantly, you’ll see why it matters. A lot.

The Cost and Consequence of Software Failures

It’s hard to overstate how much is at stake. Every year, enterprise software failures drain more than $60 billion from the global market. That’s not just a made-up number for drama; it’s hundreds of millions of developer hours, gone. Lost to bugs, downtime, and the slow churn of trying to fix problems that, honestly, might have been caught earlier.

These aren’t just technical hiccups, either. When something goes wrong, the ripple effect can be huge: productivity grinds to a halt, new ideas get shelved, and businesses—big and small—lose momentum.

Take the Microsoft Azure Cloud Server outage, for example. The fallout wasn’t abstract. Flights got cancelled. People couldn’t access their bank accounts. Even hospital staff, for a while, were cut off from patient records. It’s kind of unsettling when you really think about it—just one missed issue can cascade into so many corners of everyday life.

The takeaway? For enterprise software, a single error can stop vital functions in their tracks. The cost isn’t just financial; it’s deeply practical, and sometimes, even personal.

Why a Single Defect Can Become a Business Crisis?

It’s easy to think of software bugs as just technical problems, but in enterprise settings, they’re often much more than that. Here’s what a single overlooked defect can trigger:

- Operational chaos: Suddenly, teams can’t do their jobs. Productivity tanks.

- Security risks: Sensitive data gets exposed. The fallout can last years.

- Compliance nightmares: Penalties rack up fast if you’re not careful.

- Trust issues: Customers lose confidence—and it’s incredibly hard to earn back.

That’s why testing can’t just be a box to check. It’s not an afterthought. It’s really a business strategy at this point—a way to protect the company, the people who rely on it, and its reputation. Some might even say it’s the backbone of continuity.

How Enterprise Software Testing Has Evolved?

Testing used to be mostly manual—someone, somewhere, going through checklists and trying to break things before release. Now, that’s only a fraction of what’s going on. The approach is a lot more sophisticated:

- Testing shifts left and right: It’s part of every stage, not just the end.

- CI/CD pipelines: Continuous integration and delivery catch problems early and often.

- Automated regression suites: Machines handle the routine, so people can focus on the complex.

- AI-powered test generation: Coverage gets smarter, faster, and more adaptable.

- Cloud-based environments: Tests can scale to match real-world conditions—no more guessing.

Who Is This Guide For?

Whether you’re managing a QA team, writing code, making business decisions, or just trying to get a better handle on software quality, this guide is meant for you. You’ll find:

- Core methodologies that actually work

- The newest tools and frameworks (without the marketing fluff)

- Best practices you can use, not just read about

- A look ahead at where enterprise testing is headed

Because, let’s face it—understanding how to test enterprise software isn’t just helpful anymore. It’s mission-critical. And in a world that runs 24/7, there’s really no room for error.

Understanding the Enterprise Software Testing Landscape

Enterprise software is everywhere. It keeps day-to-day operations moving, handles the money, and helps manage big-picture business goals. But because these systems are so versatile—and so absolutely critical—testing them is a whole different challenge compared to smaller, consumer apps.

Let’s break down why it’s such a tough job.

Key Challenges in Enterprise Software Testing

- Integration Complexities: Here’s the thing: enterprise systems almost never stand alone. They connect with old databases that have been around forever, plug into a mix of cloud services, interact with third-party APIs, and somehow need to play nicely with a bunch of other internal tools.

So, what does that mean for testing?- You have to make sure all these moving parts keep talking to each other without missing a beat.

- Data has to flow smoothly, no matter how many platforms are involved.

- And when you roll out updates or bring in something new from the outside, everything still needs to work—no glitches, no dropped connections.

It sounds simple in theory, but in practice, integration testing is usually one of the biggest headaches.

- Security and Compliance:Then there’s security. Enterprise applications deal with a ton of sensitive information—customer data, financial records, sometimes even healthcare details.

A few things to keep in mind:- These systems are high-value targets for attackers, which means security can never be an afterthought.

- Testing isn’t just about making sure features work; you have to consider every kind of data, every user scenario, and how someone might try to break in.

- Compliance isn’t optional. Laws like GDPR or HIPAA come with strict requirements, and the cost of slipping up is high—sometimes painfully so.

- Complicated Roles and Business Logic: One last thing: user roles and business rules.

Small apps might serve a handful of people with similar needs. Enterprise software? You’ve got employees, customers, vendors, partners—each with their own permissions and ways of interacting with the system.

Testing here means:- Validating that everyone only sees what they’re supposed to, and nothing more.

- Checking that all the workflows make sense for every role (because a process that works for one group might be a disaster for another).

- Making sure the software enforces complicated business rules and logic, no matter who’s using it or what they’re trying to do.

Understanding these challenges was the initial step in gathering information on testing enterprise software, but the next part is differentiating between enterprise and small-scale application testing.

Understanding the Key Differences

| Feature | Small-Scale Applications | Enterprise Software |

| Number of Users | Few users, usually with similar needs | Large, diverse user base with unique requirements |

| Complexity | Fewer features, simple business logic | Many features, multiple roles, complex logic |

| Test Environments | Just dev & prod, maybe a staging area | Multiple: dev, staging, UAT, prod mirrors |

| Risk | Failures hit a small group; limited impact | Failures can disrupt business, cause financial loss, and even legal trouble |

Testing for a handful of users with one or two main use cases is one thing. But testing for thousands—or even millions—of users, each with their own roles, permissions, and expectations? That’s a whole different challenge. It’s like comparing a bike tune-up to maintaining an airplane. Both are important, but the stakes (and complexity) aren’t remotely the same.

How Enterprise Software Testing Has Evolved

Back in the day, testing was performed at the end, after the entire development process. This methodology is called the Waterfall model. However, that is the past now for most applications. The waterfall model is now replaced with Agile methodology, which focuses on iterative development and enhancing collaboration between the teams.

But that’s not all, enterprise software development has witnessed the following changes for the good:

- Rise of Automation: Being significantly larger, enterprise testing often requires more effort than other types of testing. Automation has replaced manual testing as the normal practice and is now the recommended approach for testing redundant test cases.

- Scripted to Intelligent Testing: Traditional scripted test cases are being replaced or enhanced by AI-powered testing tools that auto-generate tests, prioritize them based on risk, and self-heal broken tests, making testing faster, more intelligent, and more adaptive.

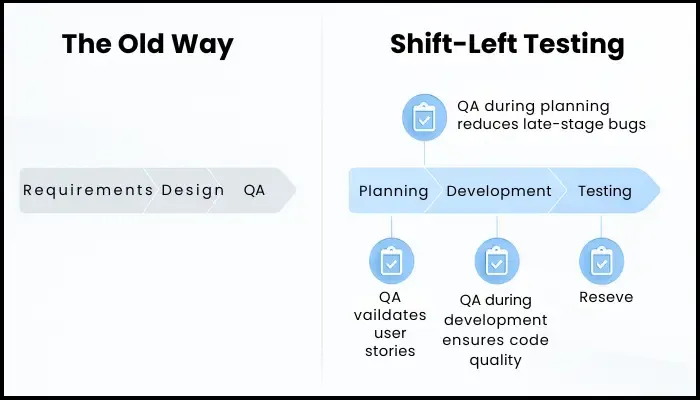

- Shift-Left/Right Approach: Testing has moved from being a post-development task to a continuous process. While shift-right focuses on incorporating testing into production, shift-left prioritizes testing in the early phases of development.

Without a doubt, advancements in enterprise software testing surely enhance the effectiveness of testing. But what if the tests performed are not up to the mark of the testing itself is inadequate?

The Real Cost of Inadequate Enterprise Testing

It’s tempting to skip thorough testing, especially when deadlines loom. But the consequences can be serious:

- Financial losses due to frequent bugs, system downtime, lost sales, reduced productivity, and increased operational expenses.

- Reputational damage, leading to customer churn and difficulty attracting new clients.

- Operational disruptions leading to delays, missed deadlines, and a significant impact on service delivery.

- Frequent data breaches due to a lack of security testing.

- Increased technical debt by releasing the product without proper testing.

Modern testing—automation, AI tools, and continuous quality processes—isn’t just “nice to have.” It’s the foundation for protecting revenue, brand reputation, and customer trust in a world where software has to work all the time.

Our Comprehensive Approach to Enterprise Testing

When it comes to enterprise software, there’s no one-size-fits-all approach to testing. These systems are just too complex. So, if you’re involved in QA, engineering, or even the business side, it’s important to recognize that a solid testing strategy needs multiple layers—everything from the basics all the way to the advanced stuff. We recommend a holistic, future-ready testing strategy, refined through years of real-world delivery for Fortune 1000 clients. Here’s how it breaks down:

- Functional Testing Approaches for Enterprise Applications: First, you need to make sure the core features work—no exceptions. But with enterprise systems, it’s rarely just a handful of features; it’s often a sprawling, interconnected set. That’s where functional testing comes in.

- Unit Testing: This is where you get right down to the smallest parts of the application. For enterprise systems, unit tests tend to be pretty involved—lots of mocking and stubbing to account for all those dependencies that pop up.

- Smoke Testing: Before going deep, you need to know the basics aren’t broken. Smoke tests check the critical functions, acting as a quick “sanity check” before more thorough testing starts.

- Sanity Testing: After you make a change—maybe a bug fix or new feature—sanity testing quickly checks if that specific part works, and that you haven’t introduced any new, glaring issues.

- Non-Functional Testing Requirements: Functionality is just the beginning. Enterprise software has to perform, scale, and stay secure. That’s where non-functional testing comes in—basically, “Does it work well, not just work?”

- Security Testing: With so much sensitive data on the line, you can’t cut corners. This means vulnerability scans, penetration testing, data privacy checks, and more.

- Performance Testing: How does the app hold up under pressure? You’ll want to test for speed, scalability, and stability with everything from load to endurance testing—especially since these systems often deal with heavy, unpredictable usage.

- Usability Testing: Fancy features don’t matter if people can’t use them. Usability testing checks that the application is actually easy (and efficient) for everyone, including users with disabilities.

- Compatibility Testing: Enterprises often have a wild mix of hardware, operating systems, and environments. You need to make sure the software plays nicely with all of them.

- Integration Testing: Enterprise apps are rarely “standalone.” They’re stitched together with other modules, APIs, and legacy systems. Integration testing is all about making sure these connections work—because even one broken link can cause headaches.

- Is data flowing correctly between systems?

- Are dependencies managed, so changes in one spot don’t break something else?

- Do error handling and rollback mechanisms kick in when they’re supposed to?

- And is the whole chain, end-to-end, actually functioning as it should?

- System and End-to-End Testing: At some point, you have to zoom out and see if everything works as a whole.

- System Testing: Does the entire application—every feature and non-functional aspect—meet expectations? This usually happens in a setup that mimics the real world.

- End-to-End Testing: Here, testers walk through real business processes from start to finish, often uncovering issues that only appear when everything interacts together.

- User Acceptance Testing (UAT): This is where the real-world test happens. It’s not just about passing technical requirements, but about whether the software actually fits business needs and workflows.

- Business-Acceptance Testing: Real users and business experts run through their everyday scenarios to confirm the software really does solve their problems.

- Cross-Departmental Involvement: Since enterprise apps usually touch multiple teams, you need input from all sides to ensure you’re covering everyone’s needs.

- Beta Testing: Before launch, the software is given to a select group (sometimes internal, sometimes external) for one last round of feedback. Often, this is where you catch the “it only happens to me” bugs.

No single method will cover it all. But by layering functional, non-functional, integration, system, end-to-end, and user acceptance testing, enterprises can deliver software that’s not only robust and secure, but also genuinely useful—meeting real business requirements and keeping users happy, even as things scale.

Shift-Left Testing in Enterprise Environments

Let’s talk shift-left testing—because honestly, it feels a bit like trying to bake a cake while still mixing the ingredients. You want quality baked in from the start, not just inspected at the end.

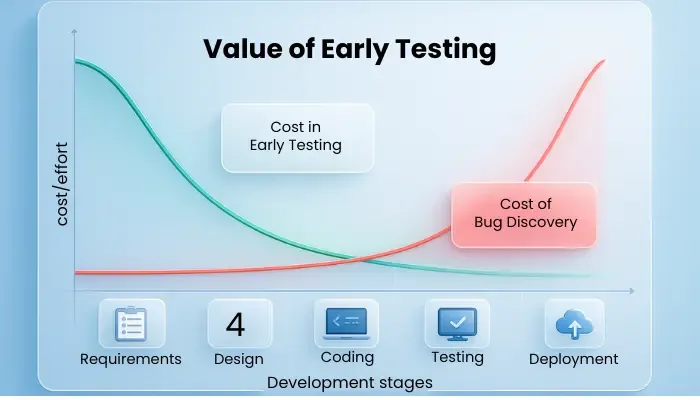

For years, we’d build full features and toss them to QA like handing off a half-finished painting for critique. But things have changed—big time. Shift-left testing says: let’s catch bugs early, not after everything is built. And that shift comes with some real upsides:

- You spot defects before they turn into monsters.

- You avoid costly rework cycles.

- Quality improves because tests are part of the process, not an afterthought.

- You ship faster—fewer surprises, smoother releases.

- Teams talk more. Devs, QA, and security folks start collaborating earlier.

- Test coverage goes up because you’re not scrambling to test at the end.

- Start Testing Early in the SDLC: Shift-left isn’t magic. You need a plan.

Start by bringing QA into requirement-gathering sessions. Think of it like planning a road trip with someone who’s great at spotting potholes and detours. They’ll flag edge cases and missing validations before code even exists.

Once development begins, integrate automated unit and integration tests into your CI pipeline. Every commit triggers tests. Every break gets flagged fast.

Static analysis tools like SonarQube and Fortify help too. They scan for code quality and security issues before you even hit “run.” Meanwhile, QA can write and run tests in parallel with development—not stuck waiting at the end of the line.

- Developers: Quality Starts With You: One big misconception? That testing is QA’s job alone.

In shift-left, developers take the front seat. That means:- Writing and maintaining unit tests.

- Practicing secure coding to prevent issues upfront.

- Doing peer reviews and pair programming—not just for knowledge sharing, but as built-in bug hunts.

It’s a mindset shift: quality isn’t added later. It’s built in from line one.

- TDD: Red, Green, Refactor: Test-Driven Development (TDD) follows a simple rhythm:

- Red: Write a test that fails. You haven’t written the code yet, so of course it fails—but it helps define what you’re building.

- Green: Write the minimum code to make it pass. It might be messy, but it works.

- Refactor: Clean up the code. Simplify it. Just don’t break the test.

In big orgs, TDD can be a lifesaver—especially for critical systems. It takes discipline, and not every team sticks the landing on day one. But over time, it pays off in cleaner design and more reliable code.

- Use the Right Tools: Best practices don’t mean much without solid tools. Here’s what teams often rely on:

- Static Analysis: SonarQube, Fortify

- Test Management: Zephyr, TestRail, PractiTest

- CI/CD: Jenkins, Azure DevOps, GitLab CI

- TDD-Friendly IDEs: IntelliJ IDEA, Visual Studio, Eclips

- Unit Testing Frameworks: JUnit, NUnit, PyTest, TestNG

Also worth mentioning: load testing and security scans should shift left too, where possible. Tools like JMeter or OWASP ZAP can fit into early pipelines with some effort.

At the end of the day, shift-left testing isn’t about more meetings or fancy buzzwords; it’s about weaving quality into every step. It may feel awkward at first—like learning to juggle while riding a bike—but once you get the hang of it, you’ll wonder how you ever did without it.

Automated Testing Frameworks for Enterprise Applications

Alright, now let’s shift gears to talk about automated testing frameworks in enterprise settings—because, well, manual scripts can only take you so far when you’ve got dozens of modules to validate.

Sometimes it feels like running a marathon by yourself; you can do it, but wouldn’t you rather have a pace team? Automation frameworks are that pace team—yes, they cost time and money to set up, but you get dividends in faster releases and fewer facepalms down the road.

- Building Scalable Test Architectures: Think of your framework as a set of Lego blocks. You want modular pieces—Page Object Models, keyword-driven bits—so when the UI does that thing it always does (move a button) you’re not rewriting every test. And remember the testing pyramid? Unit tests forming the bedrock, API tests in the middle, and UI or E2E tests at the top. If you flip it, you’ll crash—too many brittle UI tests, too few solid unit checks.

Cloud environments are the unsung heroes here. Containerize your tests, run ’em in parallel, spin up Windows, Linux, who-knows-what, all at once. It’s like having dozens of helpers instead of just you and your coffee mug. - Picking Your Automation Battles: Not every test is automation-worthy. Repeat offenders—those smoke tests you run after every build—absolutely. Critical-path functionality, yes. Performance stress tests (manually? Ha!). Cross-browser runs across Chrome, Firefox, Safari—gotta be automated. And if you’re dealing with piles of data, manual steps will kill you; let your framework handle the grunt work.

- Taming Test Data: Here’s where many projects stumble: test data. If your data’s a mess, your tests are brittle. Keep your datasets relevant and versioned in source control—no more mysterious dependencies on "that one record in staging." Make each test stand on its own; if Test A leaves leftovers, Test B shouldn’t trip over them.

- Proving the ROI: At the end of the day, your CFO doesn’t care about fancy frameworks—she cares about dollars saved and faster rollouts. Track defect detection rates, time-to-run comparisons versus manual, reduction in release cycle times, and overall test coverage percentages. Show the numbers: that’s how you turn a technical initiative into a boardroom win.

And there you go—automation frameworks aren’t just for the big leagues. With the right architecture, selective focus, solid data management, and clear ROI metrics, you’ll automate like a pro—and maybe, just maybe, you’ll have more time for that second cup of coffee.

Performance and Load Testing for Enterprise Systems

Switching gears again, let’s tackle performance and load testing—because when your app starts feeling like a crowded subway at rush hour, that’s when headaches kick in.

Enterprise systems juggle tons of functionality. More features means more users hammering the same doors at once—if your code can’t handle the crush, your business eats the fallout.

- Mapping Out Your Performance Campaign: Don’t just wing it. Kick off with clear objectives: “We need sub-200ms on this checkout flow under 1,000 concurrent users”—that sort of thing. Tie each goal to an SLA or threshold. Next, model real-world usage: what pages and processes do users slam repeatedly? Then decide your weapons: stress tests, spike tests, volume bursts, scalability checks—pick the right flavor for each scenario.

- Load Testing Tactics for High-Volume Havens: Load testing is your rehearsal for opening night. Simulate peak traffic—thousands of users logging in or transactions ticking off at once—to see if the stage buckles. Test different roles concurrently: admin, power user, guest. Establish a baseline performance, then compare every run to spot regressions. And don’t forget capacity testing: ramp up until the system groans, so you know your absolute limits.

- Pushing Limits: Stress and Endurance: Load tests cover the expected. Stress tests break past it. Push your system until it snaps—then observe how it heals. Endurance (aka soak) testing holds a steady load for hours or days to unearth slow leaks: memory hogs, database pool exhaustion, creeping latency. It’s not glamorous, but it’s where real-world gremlins hide.

- Metrics That Matter: Numbers speak louder than buzzwords. Track response times, concurrent user caps, CPU and memory footprints, error rates under every condition. Then marry those metrics: did CPU spikes align with response lags? Use dashboards to overlay baselines, peaks, and breaks. Finally, distill it into action items—highlight the hotspots, recommend optimizations, and let the dev team work their magic.

And that’s the gist: when you plan rigorously, simulate faithfully, push boundaries, and decode the data, your enterprise system won’t just limp along—it’ll sprint, even under the heaviest crowds.

Security Testing in Enterprise Testing Context

Okay, lastly—let’s talk about security testing in enterprise land, because if your code is a castle, security tests are its moat and drawbridge.

When data breaches are climbing like unwelcome ivy, and regulators are breathing down your neck, you need security underwriting at every QA step. It’s not a checkbox—it’s the backbone of trust.

- Weaving Security into QA: Remember shift-left? Security gets the same treatment. Involve security folks at design time, not just after everything's built. Devs should practice safe coding—sanitize inputs, use strong encryption, enforce access controls—and those checks get codified as SAST scans in your CI/CD. Meanwhile, DAST tools should barge in during runtime, poking for holes your code analysis might miss.

- Leaning on Security Frameworks: You don’t invent your own security universe—use the tried-and-true:

- OWASP Top 10: Your devs’ cheat sheet for the web’s greasiest exploits.

- NIST Cybersecurity Framework: Big-picture guidelines to shape your org-wide defenses.

- CWE Top 25: The programmer’s hall of shame for the most dangerous slip-ups.

Map your testing efforts to these lists—if you don’t test for Injection, XSS, or Broken Auth, you might as well leave the front door open.

- Pen Testing: The Ethical Hack

Think of pen testing as hiring a friendly burglar to break in so you can fix the locks. Pick your flavor:- Black box: Zero knowledge—like a stranger prowling around.

- White box: Full source-code access—your own dev team turned red team.

- Grey box: A little peek under the hood—simulating an insider threat or partner.

Each uncovers different weaknesses, so mix and match over time.

- Compliance and Checklists: Regulations aren’t just red tape—they’re guardrails:

- HIPAA for health data.

- PCI DSS for payment card info.

- GDPR when you touch EU personal data.

- ISO 27001 for an all-hands-on-deck security program.

Compliance testing means running audits on logs, encryption standards (TLS/SSL everywhere), and proof that your data trails can’t be tampered with.

- Wrangling Vulnerabilities: Found a hole? Don’t let it fester:

- Prioritize by risk: Critical vuln in auth beats a typo in UI code every time.

- Team up: Dev, security, and QA all hands on deck for fixes.

- Retest and regress: Every patch needs unit tests and a quick regression sweep.

- Keep watch: After launch, tools like RASP or WAFs monitor live traffic for anomalies.

And there we have it—security isn’t a one-off pen test or a quarterly audit. It’s a continuous cadence, embedded early and iterated often, so your systems don’t just survive—they stand strong against whatever the internet throws at them.

Test Management Strategies for Enterprise QA Teams

Now, let’s wrap up with the unsung hero behind QA success: test management strategies—because even the best tests need organization, or they turn into spaghetti code and chaos.

Building a robust enterprise QA practice isn’t just about fancy tools; it’s about people, processes, and a dash of project wrangling.

- Assembling Your QA Avengers: Your QA squad needs variety—like a superhero team, each with unique strengths. You’ll want:

- A QA manager to steer the ship (think Nick Fury).

- Manual testers for those one-off, edge-case sleuthing missions.

- Automation engineers to crank out scripts that never sleep.

- Performance test engineers so your app doesn’t keel over when things get intense.

- QA analysts to slice and dice test results into actionable insights.

- Security test engineers for the moat-and-drawbridge ops.

- Test data engineers who make sure your tests aren’t running on junk data.

- Documentation: Your QA North Star: Without proper docs, you’re navigating in the dark. Keep these on ice:

- Test strategy and test plan to sketch the high-level map.

- Test cases and scripts—the step-by-step guides.

- Test data specs so you’re never guessing JSON payloads.

- Test logs and bug reports to track every hiccup.

- Test reports to close the loop and celebrate (or commiserate) on results.

Stash them all in a centralized repo—Jira, TestRail, Azure Test Plans—so nobody’s emailing spreadsheets back and forth.

- Keeping Environments in Line: A flaky test environment is like baking in a wonky oven—you’ll never know if it’s the recipe or the temperature. Lock down your test lands by:

- Assigning dedicated environments per project and team.

- Iterating on those environments—tweak configs, prune cruft, optimize costs.

- Staying transparent about who owns what and when they’re available.

- Tracking resource usage so you’re not overprovisioning (or scrambling for VMs at midnight).

- Taming the Defect Zoo: When bugs show up, you need a central HQ to manage them:

- A defect management system—your single pane of glass for all reported issues.

- A standard bug template: unique ID, steps to reproduce, expected vs. actual, environment details, screenshots—no mystery bugs allowed.

- Regular root cause analyses on stubborn or critical defects—because knowing why they happened stops them from returning.

- Metrics: Your QA GPS: You can’t improve what you don’t measure.

Keep an eye on:- Test coverage: What percentage of features or code paths are under test?

- Defect leakage rate: How many bugs slip to production?

- Automation coverage: How much of your test suite is automated?

- Execution rate: How fast are tests running versus plan?

- Defect aging: How long do bugs hang out before resolution?

- Cycle time: From test design to execution to closure.

Dashboards in Power BI, Jira, TestRail, or Grafana can turn raw data into storyboards, guiding your QA strategy forward.

And there it is—a wrap on test management that feels more like coaching a sports team than ticking boxes. With the right people in place, clear docs, stable environments, disciplined defect workflows, and sharp metrics, your enterprise QA game will be solid, scalable, and maybe even a little bit fun.

Enterprise Testing Tools

Not just the practices and methodologies, but tools also contribute significantly to making enterprise testing a success. Picking the right tools is a significant decision for any enterprise QA team. The following section includes enterprise testing tools comparison to help you pick the right one for your software

1. Test Management Platforms

Enterprise-grade test management requires centralized control, traceability, DevOps QA integration, and multi-team collaboration.

| Tool | Key Features | Best For |

| TestRail | Scalable test case management and integrations with Jira, CI/CD support. | Agile QA teams and mid to large enterprises. |

| Zephyr (Jira) | Native Jira integration, BDD support, and automation sync. | Teams deeply embedded in the Atlassian ecosystem. |

| qTest by Tricentis | Advanced test planning, real-time dashboards, and automated test orchestration. | Large teams needing cross-tool visibility. |

| PractiTest | Requirement-test-bug traceability, and audit logs | Regulated industries and ISO/SOC compliance use cases. |

| TestLink | Open-source alternative | Cost-sensitive teams with simple needs. |

2. Automated Testing Tools

Automation in enterprise QA spans unit, API, UI, and end-to-end testing and can be used individually or in a tech stack.

| Tool | Primary Use | Enterprise Strengths |

| Selenium | Web UI testing | Widely adopted, strong ecosystem, and supports most browsers. |

| Playwright | Cross-browser automation | Fast execution, auto-waiting, and better for modern JavaScript apps. |

| TestComplete | UI and keyword testing | Low-code interface, and strong for non-technical QA users. |

| Cypress | Frontend and integration testing | Great for modern web apps. |

| Postman / REST-assured | API testing and automation | Easy to use and ideal for microservice-heavy architectures. |

3. Performance and Load Testing Solutions

These tools assess system scalability, responsiveness, and failure thresholds under real-world or simulated stress.

| Tool | Use Case | Enterprise Features |

| Apache JMeter | Open-source, extensible, strong community, and supports distributed tests. | |

| Gatling | Load testing at scale | Scala-based, CI/CD friendly, and detailed visual reports. |

| LoadRunner | End-to-end performance testing | Mature platform that supports wide protocols. |

| k6 by Grafana | Scriptable load testing | Integrates well with major monitoring tools. |

| BlazeMeter | Cloud-based performance testing | Scalable, and integrates with JMeter. |

4. Security Testing Tools

Enterprise security testing must cover static, dynamic, and runtime analysis of applications, services, and infrastructure.

| Tool | Type | Enterprise Capabilities |

| OWASP ZAP | Dynamic analysis (DAST) | Free, powerful scanning engine, and automated spidering. |

| Burp Suite | Manual and automated testing | Ideal for web apps and APIs. |

| Snyk | SCA and container security | Dev-first and integrates with Git, Docker, and Kubernetes. |

| Checkmarx | Static analysis (SAST) | Code scanning for compliance-heavy organizations. |

Implementing Continuous Testing in Enterprise CI/CD Pipelines

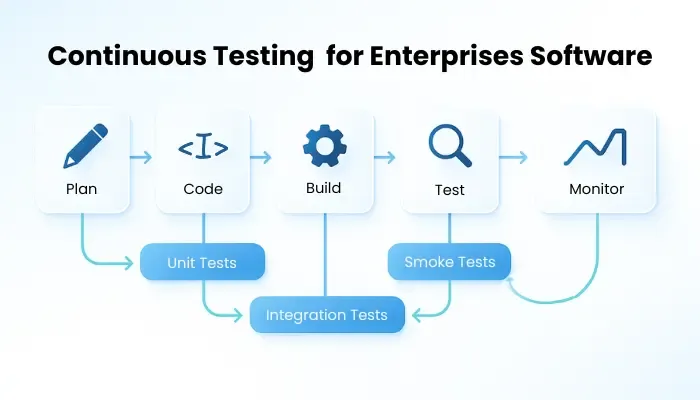

Let’s dive into the rollercoaster world of continuous testing in CI/CD pipelines—because squeezing quality into a workflow that moves at lightspeed takes both tech and culture shifts.

DevOps isn’t just a buzzword. It’s the glue binding devs, ops, and QA into one team. Testing isn’t a handoff—it’s everyone’s job, from the first commit to production monitoring. And continuous feedback loops? They’re your real-time mirrors, helping you steer clear of disaster before it hits.

- Stitching Tests into CI/CD Workflows: Think of your pipeline as a relay race. Each stage passes the baton—with testing baked in at every handoff:

- Version Control: Every push or PR starts the chain. No commit escapes untested.

- Continuous Integration: Builds run, unit tests sprint, linters clean up code smells, and dependency scans catch vulnerabilities.

- Continuous Delivery: Integration tests, API checks, security scans, and post-deploy smoke tests ensure each release is ready for action.

- Mastering Test Orchestration: Automation is powerful—but without orchestration, tests clash and waste time. Here’s how to keep things smooth:

- Group and Prioritize: Organize tests by risk, speed, or area.

- Run in Parallel: Use containers or cloud grids to run test suites side by side.

- Test Smarter: Use change impact analysis to only run what matters.

- Prep and Clean Up: Spin up mocks, fixtures, or services at the start—and clean everything after to avoid flaky leftovers.

- Juggling Test Environments: Your test environment is like an amusement park—if one ride breaks, the whole experience suffers. Keep it running with:

- Containers and VMs: Package apps and dependencies so you can deploy consistently, anywhere.

- Service Virtualization: Stub out third-party APIs and paid services using tools like WireMock, Mountebank, or Parasoft.

- Flaky Test Triage: Track and quarantine unstable tests fast. Confidence drops when “green” doesn’t mean “good.”

- Balancing Speed and Quality: You can’t have a drag race and an obstacle course at the same time—unless you plan for both.

- Follow the test pyramid: Mostly fast unit tests, a few integration tests, and limited E2E.

- Test what matters: Run only the relevant slices on each push.

- Set release gates: Enforce test pass criteria, coverage thresholds, or mutation scores before merging or deploying.

- Make it fast: Use parallel shards, smart test selection, and slim test scripts.

Continuous testing isn’t a rubber stamp at the end of a sprint—it’s the pulse of a healthy CI/CD pipeline. When devs, ops, and QA are in sync, environments stay stable, and testing is orchestrated right, you can deliver fast and deliver quality. No trade-offs needed.

Emerging Technologies in Enterprise Testing

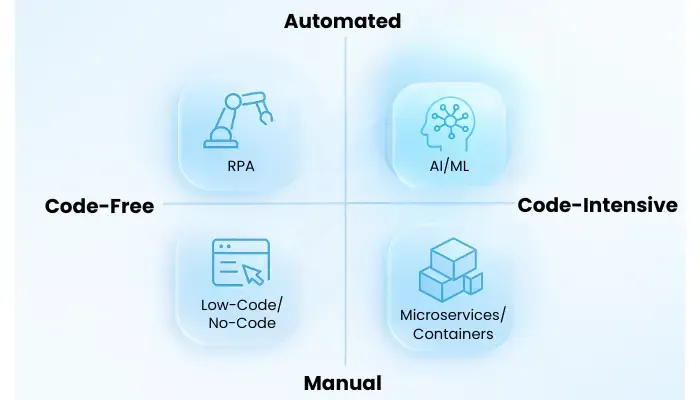

Let’s talk about what’s coming around the bend—because if you’re still running last year’s stack, you’ll miss the train. Enterprise testing is evolving fast, and staying ahead means embracing the tech that’s already changing the game.

- AI and ML in Test Automation: AI and ML aren’t just hype—they’re your sleepless QA assistant. Machine learning models can crunch test history to find weak spots and generate new test cases automatically. They prune flaky tests and flag redundant ones, keeping your suite lean.

Self-healing frameworks?

They fix broken locators when your UI shifts, saving you from brittle failures. And AI-powered test reports? They scan logs and tracebacks to suggest likely root causes before you’ve even started digging. - Robotic Process Automation (RPA) for Testing: RPA tools act like tireless bots for repetitive tasks. They’re great for UI-heavy regression tests, smoke checks, or data entry flows. And when modern frameworks struggle with legacy systems, RPA shines—it can drive old-school interfaces with pixel-level precision.

- Low-Code/No-Code Testing: Not everyone on your team needs to write code. LCNC tools like Katalon, Leapwork, TestSigma, and ACCELQ let testers (and even PMs) create automated flows using drag-and-drop UIs or Gherkin-style scripts.

The result? Faster test development, less dependency on engineering, and smoother cross-team collaboration. - Microservices and Container-Based Testing: Microservices give you modular freedom—but testing them gets tricky. You’ll need integration tests with service mocks or virtual APIs to make sure each piece talks to the others cleanly.

On the container side, it’s about scanning images, checking configs, and running runtime validations to confirm your services behave inside their sandboxed environments. - Blockchain App Testing: Blockchain apps bring security, immutability—and complexity. Smart contracts need deep testing for things like reentrancy bugs, overflows, or logic flaws. Tools like MythX, Slither, and Chaincode analyzers help.

Then there’s performance testing: can your app handle high transaction volume? And integration: does it sync correctly with off-chain systems and APIs?

Emerging tech isn’t an experiment—it’s your new standard. Whether it’s AI pruning your test suite, RPA bots handling legacy flows, low-code tools speeding up coverage, or blockchain-specific tests catching critical flaws, the future’s not coming—it’s here.

If your testing strategy isn’t evolving, your release pipeline is falling behind. So lean in, level up, and let tomorrow’s tools help you test smarter today.

Conclusion

Comprehensive enterprise software testing is not optional but a mandatory step for the betterment of the entire organization. With the right testing, you can observe faster release cycles, reduced defects in production, improve customer experiences, secure software, and lower long-term maintenance costs.

This article explored all aspects of enterprise software testing, including functional and non-functional testing, shift-left strategies, test automation frameworks, CI/CD, and emerging technologies in this space.

Initially, it may seem overwhelming in terms of costs and the testing effort. However, the long-term cost is always better, as it minimizes bug occurrence, reduces legal penalties, and lowers the cost of bug fixing, along with manual efforts.

If comprehensive testing seems too complex, the best approach is to outsource testing to a reputable enterprises software testing service provider.

Why Partner with ThinkSys?

- Proven Experience: With over a decade of hands-on experience, we’ve delivered testing solutions for some of the most complex enterprise systems—in finance, healthcare, e-commerce, and more.

- Real Results: Our clients consistently see 30–50% reductions in test cycle time, along with clear gains in software quality, stability, and user satisfaction.

- Flexible Partnership: We don’t just test—we integrate with your team. Whether you need onshore, offshore, or hybrid support, we adapt to your delivery model and budget.

Frequently Asked Questions (FAQs)

- Repetitive and stable

- High-risk or high-impact

- Core to revenue or critical workflows

- Performance- or volume-driven

- Data-driven or multi-configuration scenarios

- Better quality and reliability

- Lower defect-fix costs

- Stronger collaboration

- Early bug detection

- Built-in unit and API tests in CI/CD

- Defining clear pass/fail gates in your pipeline

- Giving devs instant, actionable feedback

- Prioritizing risk-based tests

- Automating the right tests

- Promoting a quality-first culture

- Test Management: Jira, Xray, qTest, Zephyr

- Automation: Selenium, Cypress, Playwright, TestComplete

- API Testing: Postman, SoapUI

- Performance: JMeter, Gatling, LoadRunner

- Security: OWASP ZAP, Burp Suite

- Defect leakage rate

- Cycle time reduction

- Test coverage rate

- Manual vs. automation effort

- Release frequency

- Embedded Agile/DevOps: QA in cross‑functional squads

- Centralized QA CoE: Governance and specialized services

- Hybrid: Embedded teams plus CoE oversight

- Product‑focused: Testers dedicated to specific products or domains

- Inadequate test data management

- Over-reliance on manual testing

- Unstrategic blanket automation

- Siloed QA teams

- Skipping non-functional tests

- Testing too late in the SDLC

- Unit and component tests per service

- Service virtualization or mocks for dependencies

- Chaos experiments for resilience

- Extensive API contract and integration tests

- Intelligent test-case generation

- Self-healing automation scripts

- Anomaly detection

- Risk prediction from defect history

- Smarter test data mgmt and UI visual diff checks

Share This Article: