How ThinkSys QA Automation Helped Boostlingo Cut QA Time by 90%

For fast-moving tech companies, nothing feels more frustrating than knowing your product is ready but your release process is not. Time gets eaten up by repetitive checks. QA teams get slower. And every new feature launch feels like a gamble.

If you’ve ever felt held back by slow test cycles or limited coverage, this story will feel familiar.

In this case study, you’ll see how Boostlingo, an AI-powered interpretation platform, improved its testing workflow. We'll walk through the challenges they faced, the steps we took together, and the results that now let them ship new features faster, safer, and smarter with every release.

Meet Boostlingo

Boostlingo is a platform built for one purpose, breaking down language barriers at scale. They offer on-demand interpretation, multilingual event support, and AI-powered captioning in over 300 languages. Their solution helps healthcare systems, legal teams, and enterprises provide inclusive communication globally.

But while their product was helping the world communicate faster, their internal QA workflows were holding them back.

Here’s what wasn’t working:

- Manual Testing Slowed Everything Down: Each new release required 5–7 full days of manual testing because they had to test 1200 test cases. Their team had to check dozens of user roles and combinations, which drained time and delayed delivery.

- Limited Coverage Meant Higher Risk: Because testing took so long, they had to skip edge cases and less-common workflows. Real-time features, like live calls, often went untested, raising the risk of bugs in production.

- Legacy Automation Was Weak and Outdated: They had automation in place using WebdriverIO, but it wasn’t scalable. The scripts were rigid, hard to maintain, and didn’t adapt well to product changes.

- Real-Time Features Were Painful to Test: Their app involved complex video/audio interactions requiring mic and camera access. These scenarios were tough to automate and even harder to test reliably with manual testing processes.

- The QA Team Was Overloaded: With a growing product and limited QA headcount, the team couldn’t keep up. Manual efforts were not enough, and the release process became a bottleneck.

Our Proposed Solution

After analyzing Boostlingo’s testing challenges, we outlined a plan to address each problem with targeted, scalable solutions. Here’s what we proposed:

- Switch to Playwright for a Modern Testing Stack: We recommended migrating from WebdriverIO to Playwright with TypeScript. This would give their test suite better speed, stability, and flexibility, critical for a fast-growing, evolving application.

- Broaden Test Coverage with Modular Scripts: To tackle missed edge cases and roles, we suggested designing modular test components. This would allow easy expansion of coverage without rewriting everything from scratch each time.

- Automate Real-Time Call Flows with Simulations: For their mic/camera-heavy features, we proposed simulating audio/video inputs using fake media streams and virtual signaling. This approach would let us fully automate previously untestable scenarios.

- Use AI Tools to Accelerate Test Development: We introduced the idea of using GitHub Copilot and Cursor to write large volumes of test code efficiently and writing testing scripts. These tools could spot errors, reduce repetition, and increase developer speed.

- Introduce Test Sharding for Parallel Execution: To reduce test run times, we proposed sharding (running multiple tests in parallel). This would drastically cut execution time, enabling faster feedback and more frequent release cycles.

Results: What Changed After Implementation

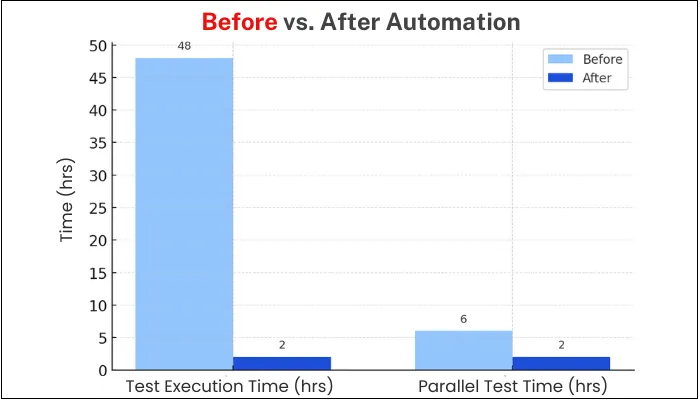

- Testing Time Dropped by 90%: What once took 5–7 days of manual effort now takes just 2 hours. Faster execution gave the team more time to test, iterate, and deploy.

- Test Coverage Expanded Across All Roles: Automated scripts now handle edge cases, real-time call flows, and all user combinations, something the client’s team couldn’t consistently manage before due to time and resource limits.

- Automation Became Reliable and Scalable: Using Playwright and TypeScript improved test stability. AI-assisted coding sped up new script creation saved 25% of our efforts.

- Release Cycles Got Shorter and Safer: With faster and more complete test runs, Boostlingo now pushes features more frequently, without cutting corners or risking production bugs slipping through.

- Time and Cost Savings: With sharding, we further optimized the entire automation suite. The same tasks which used to take 6 hours now take 2 hours without any failure. This level of optimization saved our client time and costs.

“I honestly did not know what to expect. This is very inspiring. Great job.” -Jake Orona, Senior QA Lead Engineer (Boostlingo)

Step by Step Implementation

Step 1: Migrating to a Modern Testing Stack

We started by replacing their legacy WebdriverIO setup with Playwright and TypeScript. This upgrade offered improved performance, better debugging tools, and more flexibility.

During the migration, we focused on script readability, modular structure, and long-term maintainability. A key challenge was ensuring feature parity, translating older tests without losing coverage. We also introduced standardized coding patterns to reduce inconsistencies and made sure the new stack was aligned with their existing CI/CD workflows.

Step 2: Designing Reusable and Scalable Test Architecture

Next, we built a scalable test architecture to handle the growing number of user roles and workflows. We created reusable helper functions and page object models, making the suite easy to extend without rewriting logic. To prevent technical debt, we added linting, folder-level organization, and custom logging.

We ensured that any team member, technical or not, could understand how tests were built, maintained, and extended. This architecture became the foundation for future coverage expansion.

Step 3: Automating Real-Time Scenarios with Simulated Environments

One of the biggest hurdles was testing real-time audio/video call flows. These required mic and camera access, which can’t easily be automated using real hardware.

We overcame this by integrating fake media streams and simulating signaling systems to mimic real-world behavior. This allowed full automation of call flows, even under edge-case conditions. We also tested under various browser/device combinations to ensure reliability. We made sure simulations worked consistently across environments and CI pipelines.

Step 4: Accelerating Development Using AI Tools

To scale faster, we used tools like GitHub Copilot and Cursor. These AI agents helped write test scripts, repetitive test cases, clean up old logic, fix typos, and suggest optimized code.

They were especially helpful for bulk editing across files, saving hours of effort. We still reviewed every suggestion for accuracy, but using AI meant we could focus more on complex logic instead of boilerplate code. The result was 25% faster delivery without compromising code quality.

Step 5: Speeding Up Execution with Sharding and Parallelization

Finally, we implemented test sharding, allowing the suite to run in parallel across multiple worker threads. This brought down execution time from 6 hours to under 2.

We integrated this setup into their CI to ensure real-time feedback on pull requests.

While setting this up, we made sure tests were isolated and stateless, so they wouldn’t interfere with each other when run in parallel. This step was really crucial to save time and money for our client. As a result, they can execute faster without spending more.

Conclusion

You’ve just seen how ThinkSys helped Boostlingo move from a slow, manual QA process to a fast, intelligent, and fully automated testing pipeline using playwirght. If you’re struggling with long release cycles, limited test coverage, or outdated tools, you’re not alone, and you don’t have to keep pushing through the pain.

ThinkSys helps growing teams test smarter, ship faster, and scale with confidence. If these challenges sound familiar, let’s talk about how we can help you next.

Share This Article: