How Modern Businesses are Using AI for Performance Testing, and Why You Should Too?

Leveraging AI for Performance Testing: Imagine the frustration when your carefully developed application fails under peak load or when performance bottlenecks go unnoticed until it's too late. These issues undermine user experience and damage your brand's reputation. The manual effort in traditional testing leads to inconsistencies and errors.

Traditional performance testing methods often need to catch up, struggling with scalability, accuracy, and the fast pace of technological advancements. Companies waste valuable time and resources trying to keep up, only to encounter more hurdles and inefficiencies.

Now comes Artificial Intelligence (AI). AI is transforming performance testing by automating complex processes, enhancing accuracy, and reducing the time and resources needed.

This blog explores AI-driven performance testing, showcasing powerful tools, offering tips, and highlighting best practices to elevate your test efficiency and effectiveness. So let's get started.

Understanding AI in Performance Testing

AI in performance testing uses machine learning, neural networks, and other AI techniques to automate and enhance the testing process. Unlike traditional methods, AI involves intelligent algorithms that learn from data, adapt to changing environments, and automate complex tasks. This dynamic and responsive testing allows AI models to predict issues, optimize test scenarios, and self-correct based on real-time data.

Why Use AI for Performance Testing?

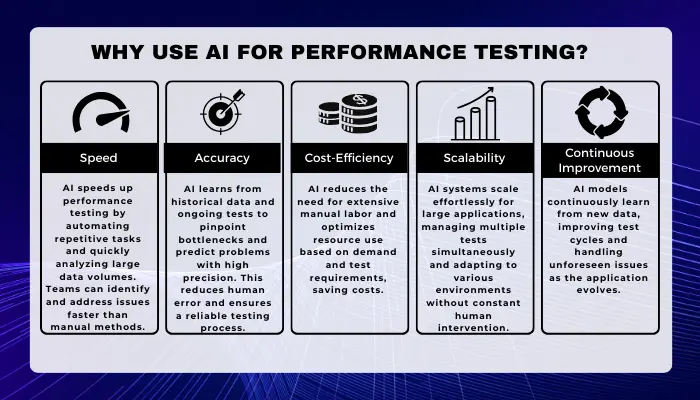

- Speed: AI speeds up performance testing by automating repetitive tasks and quickly analyzing large data volumes. Teams can identify and address issues faster than manual methods.

- Accuracy: AI learns from historical data and ongoing tests to pinpoint bottlenecks and predict problems with high precision. This reduces human error and ensures a reliable testing process.

- Cost-Efficiency: AI reduces the need for extensive manual labor and optimizes resource use based on demand and test requirements, saving costs.

- Scalability: AI systems scale quickly to accommodate large and complex applications. They manage and execute multiple tests simultaneously, adapting to different environments and configurations without constant human intervention.

- Continuous Improvement: AI models continuously learn from new data, improving test cycles and handling unforeseen issues as the application evolves.

Current Trends in AI Performance Testing

- Predictive Analysis: Companies use AI to predict how changes will affect application performance. This proactive optimization prevents issues from affecting users.

- Self-Healing Systems: AI-driven systems automatically detect and fix performance issues without human intervention, reducing downtime.

- Intelligent Test Generation: AI generates test cases based on user behavior and application data, ensuring relevant tests that cover various scenarios.

- Cloud-Based AI Testing: With cloud computing, many AI performance testing tools are now cloud-based, offering greater flexibility and accessibility for remote testing and resource scaling.

- Integration with DevOps: AI integrates into DevOps practices for continuous performance testing, maintaining high performance throughout the software development lifecycle.

Key Pain Points in Traditional Performance Testing

1. High Resource Consumption and Potential for Human Error:

Traditional performance testing relies heavily on manual processes, from setting up test environments to analyzing results. This consumes significant time and resources and introduces human error. Manually configuring test scenarios for diverse user loads can be inconsistent and error-prone. The repetitive nature of these tasks leads to tester fatigue, affecting accuracy and reliability. Businesses spend more on human resources, and lengthy processes delay product launches and updates.

2. Challenges in Handling Large-Scale Applications:

As applications grow, traditional performance testing needs to scale more efficiently. Simulating real-world traffic and usage patterns for thousands or millions of users requires a robust, scalable testing infrastructure, which is costly and complex. Traditional methods may not predict performance under peak loads or sudden user activity spikes, leading to potential downtimes and loss of customer trust.

3. Delays in Identifying Performance Bottlenecks:

Traditional performance testing methods often need to identify and resolve performance bottlenecks. The cycle of setting up tests, running them, and analyzing data can take days or weeks, especially for large applications. This slow turnaround makes it difficult for businesses to respond quickly to performance issues, affecting their ability to iterate rapidly and stay competitive. Bottlenecks may only become apparent after users are affected, damaging a company's reputation and user experience.

4. Inconsistent Results Due to Varying Test Conditions:

Real-world environments are dynamic, making it challenging for traditional performance testing to deliver consistent and accurate results. Variations in network conditions, server loads, user behavior, and minor application changes significantly affect performance test outcomes. This inconsistency makes it difficult for businesses to make informed optimization decisions. Traditional methods often need more adaptability, leading to a disconnect between test results and actual user experiences, undermining testing credibility.

AI-Driven Solutions for Performance Testing

- How AI Automates Performance Data Analysis: AI automates performance data analysis by handling repetitive and complex tasks. Traditional methods require manual intervention to comb through data, identify patterns, and interpret results, which is time-consuming and prone to error. AI uses algorithms to process and analyze vast datasets quickly. For example, AI can automatically detect anomalies in metrics like response time, throughput, and error rates. Machine learning models identify these irregularities faster than humans, leading to quicker troubleshooting and optimization.

- Using AI to Predict Potential Performance Issues: Predictive analytics is a powerful AI application in performance testing. By analyzing historical data, AI models forecast potential performance bottlenecks before they impact user experience. This proactive approach allows teams to address issues early, reducing downtime and improving application robustness. For instance, AI can predict a system's load capacity during peak usage, enabling teams to scale resources accordingly and prevent crashes or slowdowns.

- AI-Enabled Systems that Adapt and Fix Performance Issues in Real-Time: Self-healing systems use AI to detect and correct performance issues without human intervention. Through continuous monitoring and learning, these systems identify the root cause of a problem, apply a fix, and verify the solution in real-time. For example, if a memory leak causes an application slowdown, a self-healing system adjusts memory allocation on the fly to stabilize performance. This automation ensures applications run optimally, with minimal downtime and intervention.

- Machine Learning, Neural Networks, and Their Applications in Performance Optimization: Machine learning models are trained on historical performance data to recognize patterns and predict outcomes. These models adapt to new data, continuously improving their predictions and responses. Neural networks, a subset of machine learning, are effective in identifying complex patterns in large datasets. They simulate human brain processing to make sense of intricate data relationships, enhancing the ability to pinpoint and resolve performance issues. These AI techniques detect problems and optimize application performance for better user experiences, efficiency, and cost savings.

Top AI Tools for Performance Testing

This section introduces leading AI tools for performance testing and explores their features, advantages, drawbacks, and applications.

1. Applitools:

- Features:

- Visual AI: Automates visual testing by comparing screenshots to baseline images.

- Root Cause Analysis: Identifies issues by analyzing the DOM and visual changes.

- Cross-Platform Compatibility: Supports various browsers, devices, and operating systems.

- Pros:

- High accuracy in detecting visual discrepancies.

- Reduces testing time by automating repetitive visual checks.

- Integrates seamlessly with popular testing frameworks like Selenium and Cypress.

- Cons:

- Requires a solid baseline for accurate comparisons.

- It can be resource-intensive for large-scale applications.

- Learning curve for teams new to visual testing.

- Use Cases:

- E-commerce Platforms: Ensuring consistent user experience across devices and browsers.

- Mobile Apps: Detecting UI inconsistencies in app updates.

- Web Applications: Maintaining branding consistency during rapid development cycles.

2.Testim:

- Features:

- AI-Powered Smart Locators: Uses machine learning to locate elements reliably, reducing test flakiness.

- Codeless Test Creation: Enables testers to create tests visually without coding.

- Test Suite Management: Offers tools to organize, run, and analyze tests efficiently.

- Pros:

- Simplifies test creation and maintenance, especially for non-developers.

- Enhances test stability and reduces maintenance overhead.

- Allows easy integration with CI/CD pipelines.

- Cons:

- Limited customization for complex test scenarios.

- Can become costly for large teams or extensive test suites.

- Performance may vary based on web application complexity.

- Use Cases:

- Agile Development Teams: Quickly create and update tests in fast-paced environments.

- Quality Assurance Departments: Streamlining test workflows and collaboration.

- Startups and SMEs: Implementing reliable testing without large development overhead.

3. Functionize

- Features:

- NLP Test Creation: Allows testers to write tests in natural language, converting them into executable tests.

- Adaptive Event Analysis: Uses AI to understand user interactions and adjust tests.

- Cloud-Based Execution: Provides scalable testing environments in the cloud.

- Pros:

- Enables rapid test development with natural language processing.

- Offers high scalability for performance testing large applications.

- A continuous learning model improves test accuracy.

- Cons:

- Dependency on cloud infrastructure may raise concerns for sensitive data.

- NLP-based tests require clear and precise language.

- Initial setup and integration can be complex for legacy systems.

- Use Cases:

- Large Enterprises: Conducting performance tests on complex systems with varied user scenarios.

- Tech Companies: Using AI to predict and adapt to user behaviors in real time.

- Government and Healthcare: Ensuring robust performance under high-demand situations.

AI Performance Testing Tools Comparison:

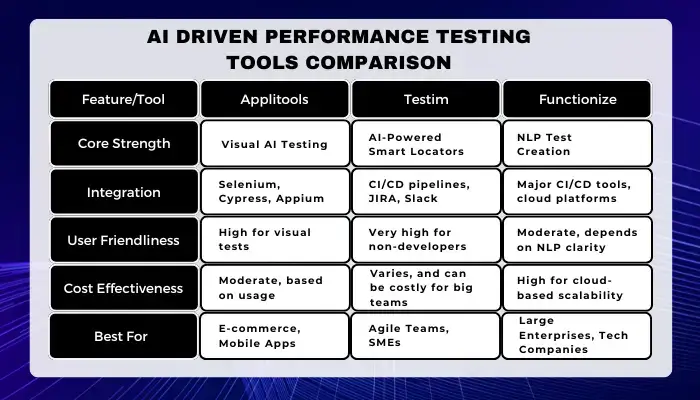

| Feature/Tool | Applitools | Testim | Functionize |

|---|---|---|---|

| Core Strength | Visual AI Testing. | AI-Powered Smart Locators. | NLP Test Creation. |

| Integration | Selenium, Cypress, Appium. | CI/CD pipelines, JIRA, Slack. | Major CI/CD tools, cloud platforms. |

| User Friendliness | High for visual tests. | Very high for non-developers. | Moderate, depends on NLP clarity. |

| Cost Effectiveness | Moderate, based on usage. | Varies, and can be costly for big teams. | High for cloud-based scalability. |

| Best For | E-commerce, Mobile Apps. | Agile Teams, SMEs | Large Enterprises, Tech Companies. |

Tips for Effective AI Performance Testing

- How to Integrate AI Tools with Existing Testing Frameworks: Integrating AI tools into existing performance testing frameworks is crucial for a seamless transition. Assess your current framework to understand its strengths and weaknesses. Identify areas where AI can provide the most value, such as automating repetitive tasks or analyzing complex data. Select compatible AI tools with APIs or plugins that facilitate integration. Conduct pilot tests to ensure the integration does not disrupt existing workflows. Train your team on how to use the new tools and monitor the integration process to adjust and optimize as needed.

- Tailoring AI Tools to Specific Project Needs: Every project has unique requirements, and AI tools should be customized to meet these needs. Define the specific goals and metrics that matter most to your project. Use these metrics to configure AI tools to focus on critical areas, such as response time or user load handling. Customize the AI's algorithms to learn from your project's data, ensuring they detect and respond to relevant patterns and anomalies. Regularly update the configuration as project requirements evolve to keep the AI tools aligned with your testing objectives.

- Ensuring High-Quality Data for Training AI Models: The accuracy and efficiency of AI-driven performance testing depend on the quality of the data used to train AI models. Collect comprehensive and representative data from your application under various conditions. Clean and preprocess the data to remove outliers and inconsistencies that could affect the AI's learning. Use diverse data sources to avoid bias and ensure the AI model understands the application's performance under different scenarios. Regularly refresh the training data to keep the AI model current and accurate.

- Implementing Continuous Learning for AI Systems to Adapt and Improve: AI tools in performance testing should not be static; they need to continuously learn and adapt to changing application behaviors and requirements. Implement mechanisms for the AI to update its models continuously based on new data. This could involve retraining the AI at regular intervals or using online learning techniques where the AI adjusts in real-time. Encourage a feedback loop where the AI's findings refine the testing process and vice versa. This ensures that the AI remains relevant and effective over time.

- Developing Collaboration between AI and Human Testers: While AI can automate many aspects of performance testing, human insight and experience remain invaluable. Create a collaborative environment where AI tools and human testers work together. Use AI to handle routine tasks, freeing human testers to focus on complex and creative testing strategies. Encourage human testers to provide feedback on the AI's performance and suggest areas for improvement. Regularly conduct joint review sessions where both AI-generated insights and human observations are discussed to enhance the overall testing strategy.

Enhancing Test Efficiency with AI

1.Optimizing Resource Allocation Using AI

Efficient resource management is crucial for maintaining a competitive edge. AI revolutionizes this by enabling smarter allocation of testing resources. Machine learning algorithms analyze historical data and current system performance to predict the optimal distribution of resources, such as server bandwidth, computing power, and human expertise. This ensures that each system component receives adequate resources without wastage, leading to more streamlined and effective testing processes.

2.Test Cycles and Time-to-Market

Accelerating time-to-market while ensuring product quality is a pressing challenge. AI in performance testing shortens test cycles by automating complex test scenarios and learning from past tests to identify and focus on critical areas quickly. This automation speeds up the testing process and reduces human error, ensuring software products can be released faster with confidence in their performance.

3.Lowering Costs Through AI Automation

Cost reduction is a key benefit of AI in performance testing. By automating routine and repetitive tasks, AI reduces the need for extensive manual labor, cutting labor costs. AI-driven predictive analytics prevent costly performance failures by identifying potential issues before they escalate. This proactive approach minimizes downtime and reduces expenses associated with fixing large-scale performance problems after deployment.

4. Managing Large-Scale Tests with AI Capabilities

As businesses grow, so do their systems and the complexity of performance testing required. AI scales effectively with these needs by providing adaptive testing mechanisms. It can simulate thousands of virtual users and interactions to test system performance under real-world loads. Additionally, AI can dynamically adjust testing parameters in response to changing system behaviors, ensuring consistent performance across all scales of operation.

Future of AI in Performance Testing

1. AI Advancements on the Rise

The AI is evolving, bringing innovative technologies that elevate performance testing. Sophisticated machine learning models can predict and identify performance issues before they manifest, enabling proactive testing strategies. NLP in performance testing interprets complex test scenarios described in human language, enabling quicker and more accurate test case creation and modification. The integration of AI with IoT devices allows for comprehensive real-world scenario testing, ensuring optimal application performance across various devices and network conditions.

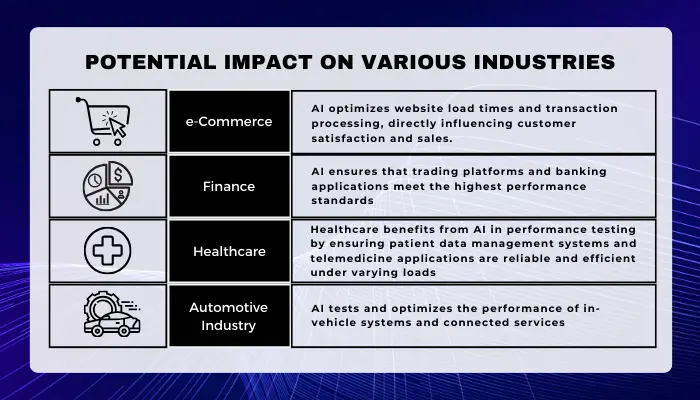

2. Potential Impact on Various Industries

AI in performance testing holds significant implications across multiple industries. In e-commerce, AI optimizes website load times and transaction processing, directly influencing customer satisfaction and sales. In finance, AI ensures that trading platforms and banking applications meet the highest performance standards. Healthcare benefits from AI in performance testing by ensuring patient data management systems and telemedicine applications are reliable and efficient under varying loads. In the automotive industry, AI tests and optimizes the performance of in-vehicle systems and connected services.

3. Expert Predictions on the Future of AI in Performance Testing

Experts predict that AI's role in performance testing will expand significantly. Fully autonomous performance testing processes are expected, where AI systems identify and diagnose performance issues and implement fixes in real time, reducing the need for human intervention. AI will play a crucial role in cross-platform testing, ensuring consistent performance across all devices and operating systems. Personalized performance testing will become more common, with AI tailoring test scenarios to reflect actual user behaviors, providing accurate and relevant results.

Conclusion

AI-driven testing capabilities help businesses handle large-scale applications efficiently, reduce manual effort, and improve accuracy.

In this article, we've explored top AI tools for performance testing. By integrating these tools, businesses can reduce test cycles, lower costs, and optimize resource allocation while scaling their testing efforts.

Staying updated with AI advancements is vital for leveraging the latest technologies to enhance performance testing. As AI evolves, it promises greater efficiencies and capabilities. By staying informed and adaptable, businesses can ensure they are setting the pace in their respective industries.

Reach out to our experts for guidance on implementing AI in your testing strategy.

FAQs

How can AI Performance Testing reduce costs for My Business?

What are the key challenges when integrating AI into existing Performance Testing Frameworks?

How does AI improve the accuracy of Performance Testing results?

Can AI-driven Performance Testing handle large-scale applications effectively?

What should businesses consider when choosing AI tools for Performance Testing?

Share This Article: