Vibe Testing: A New Way to Test Software in the AI Age

With vibe coding, you can build software faster. But as development accelerates, testing starts falling behind.

Despite rapid innovation in coding tools, testing remains rigid, manual, and slow. It’s the bottleneck in an otherwise fast-moving system.

That’s why we’re seeing the rise of its natural counterpart: vibe testing. It is an approach that evaluates software the way users actually experience it.

And we’ll tell you everything about it in this blog.

What You’ll Learn:

- Why traditional testing struggles to keep up with AI-driven development

- What vibe testing is, and what makes it different

- How vibe debugging turns insights into fixes

- Real-world examples of teams using it

- How your team can get started

Let’s start with the root of the problem.

The Problem: AI Has Outpaced Traditional Testing

AI flipped the software lifecycle. With vibe coding tools like GitHub Copilot, ChatGPT, and Cursor, developers can now build MVPs in 2-3 hours. Entire products come together in days, which used to take weeks or months to build.

But here things get interesting and concerning at the same time. The faster we build, the harder it gets to maintain quality. You’ll observe a pattern that testing has not only failed to keep up with the pace, it’s starting to fall behind.

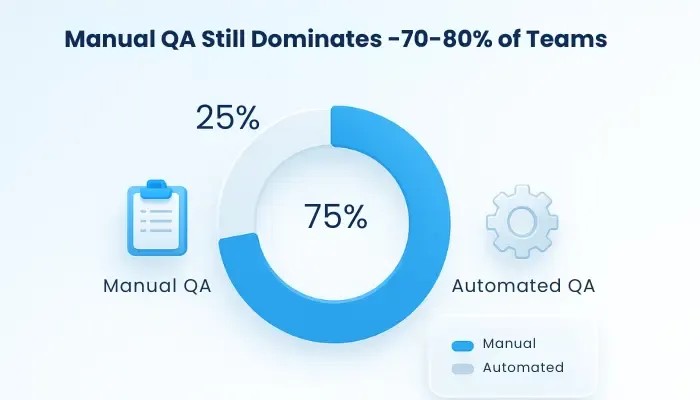

Today, nearly 70–80% of teams still rely on manual QA. Not because they prefer it, but because automated testing is often too fragile to be useful. One minor UI tweak, and scripts fail. And sometimes even entire flows break. Teams gather to fix tests that used to work. And they realize that the time that should go to feature development gets eaten up by maintenance.

On top of that, there’s AI-generated code that is fast, functional, and increasingly unfamiliar. You didn’t write every line. You didn’t trace every logic branch. So how do you validate something you didn’t build yourself?

That speed is the concerning part. Software development is moving faster than ever, but confidence in quality is slowing down.

We need to address two challenges here:

First, we need to test faster.

Second, we need to test smarter, like real users do.

That’s where vibe testing changes everything.

What Is Vibe Testing (Really)?

If traditional testing is about ticking off boxes, vibe testing is about checking the experience. It shifts the core question from “Did the button work?” to “Did this interaction make sense to the person using it?” Instead of analyzing whether the software functions, it checks whether it feels right.

This distinction is crucial because most user frustrations don’t come from crashes or major bugs. They come from micro-frictions like laggy transitions, awkward modals, confusing flows, and missing feedback.

These are the small, frustrating moments that break the app and quietly kill adoption. Traditional frameworks miss them because they’re designed to verify logic instead of spotting friction. Vibe testing works differently.

Instead of hand-scripting rigid test cases or managing spreadsheets of edge cases, you describe the test in plain language. Something like, “Try to buy sneakers under $100, check out as a guest, and flag anything that feels off.”

From there, the AI takes over. It navigates your app like a real user, watching for anything that interrupts the flow or creates friction. It notices when a modal opens too slowly, when an error message is vague, or when a button is hard to see.

These are the things that frustrate users but slip past most automated suites. Vibe testing catches them because it’s aligned with how people actually experience software, not just the logic behind the screen.

Melissa Benua, VP of Engineering at Rocked, in a video talked about vibe coding and testing, defining it as fast conversational improvisation with AI, likening the AI to a "world's smartest and fastest intern".

Benua argued that testers are uniquely suited to this approach because they serve as the customer's advocate, understand product context, and "know what good looks like," which is crucial for guiding the AI.

She demonstrated how AI tools, like Cursor with its Retrieval Augmented Generation (RAG), enable testers to generate test plans, specs, and even implement features and fixes rapidly, turning code into an accessible tool for anyone who can "supervise"

Why Control Still Belongs to You

One of the most common concerns with AI in testing is the fear of losing control.

If you didn’t write the test, how can you be sure it’s checking the right thing?

What if it misses something critical or worse, flags something irrelevant and wastes your team’s time?

These are valid questions, especially for QA leads and engineering managers tasked with ensuring quality and accountability.

But vibe testing doesn’t take control from you. Instead it acts based on your judgement and even scales it. You still define what matters and set the intent. The AI follows your direction, your user goals, and your definitions of quality. Your expertise stays at the center.

Look what this user who attended Melissa Benua’s conference has to say about vibe testing.

Every time a vibe test runs, the AI records exactly what it did, what it expected, and what actually happened. You get detailed, step-by-step context, full screenshots, and a transparent audit trail. There’s no guesswork. Just actionable feedback you can review, tweak, or override.

That visibility flips the script on traditional automation. Instead of locking you out of the test process, it brings you closer to it. So instead of seeing the pass/fail results, you see behavior. And over time, as you review and validate results, the system learns what matters most to your team and your users.

Just as importantly, you stay in control of what happens next. For example, vibe testing might explore dozens of user paths in minutes, but it doesn’t push code, merge PRs, or deploy on its own. You remain the final decision-maker. So in a way, it becomes an obsessive, detail-driven assistant that works overnight, logs every step, and hands you a clean, prioritized report in the morning with the receipts.

Proof that Vibe Testing Works

Theory is great, but real teams work under pressure, tight deadlines, shifting priorities, and the occasional last-minute fixes. So the obvious question is: does vibe testing actually work in practice?

The short answer is yes. And not just in demos or controlled environments, but in production, where stakes are high and things break often. One global healthcare company was tangled in fragile, hand-coded tests. Every minor UI change triggered a wave of rewrites. When they switched to prompt-based testing, they cut test creation time by 90%. What used to take a week of scripting now takes a few hours.

Image Credit:- The New Stack

This is just one example of its usefulness. There are plenty more. We also use AI and other modern tools to do QA and software testing for our clients.

And when things do break, vibe debugging comes to rescue. One team hit a staging deployment failure with no clues in the logs. Instead of jumping between dashboards or sifting through reports, they asked the AI: “Why did my staging deploy fail?” Minutes later, it surfaced. It was a missing dependency in a Dockerfile, and AI suggested the fix. They were back on track in less than five minutes.

What ties all these stories together is speed and confidence. These teams caught issues before customers did. They stayed in control, reviewed AI findings, and made the final calls.

You don’t need to trust vibe testing blindly. It’s a feedback loop that amplifies human judgment with machine consistency.

What Powers Vibe Testing

Underneath the simplicity of natural language prompts and intuitive feedback lies a modern stack of tools, all designed to evaluate not just whether software runs, but whether it feels right to use.

Let’s understand one by one.

- Prompt-based Automation: You describe a test in everyday language like, “Add an item under $50 and check out as a guest”, and the system figures out the rest. It interprets your intent, navigates the UI like a user would, and builds the test on the fly. These tests are flexible by default. They evolve with your product, eliminating the constant churn of fragile scripts and hard-coded selectors.

- Self-healing Selectors: That flexibility comes from self-healing selectors. These are smart identification tools that understand context. When a button moves or a label changes, the system adapts. Instead of breaking, it adjusts. Traditional test automation would fail but vibe testing moves forward here.

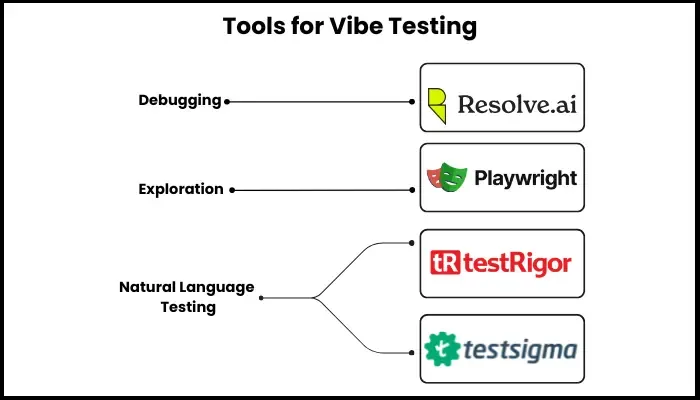

- Debugging: It is powered by assistants like Resolve AI. These tools parse logs, scan configuration files, analyze system behavior, and surface the root cause in plain English. You don’t need to jump between dashboards or decipher cryptic error codes. You get a direct answer, and often a proposed fix too.

- Exploration: It is another key piece. Platforms like Playwright MCP allow the system to interact with your app in real time. You can say, “Explore this page and write tests for it,” and it will scroll, click, fill forms, and watch for issues. Mere than just following instructions, it discovers edge cases and hidden UI states the way a curious user might.

- Natural-language Testing: It is happening because of platforms like testRigor and Testsigma. These tools make this approach accessible across teams. QA leads, product managers, and engineers alike can write and run meaningful tests without touching code. That breaks down silos and turns quality into a shared responsibility.

For advanced teams, tools like TestZeus Hercules take it even further. These proactive agents run your tests and suggest new ones based on real usage data. They identify risky flows, coverage gaps, and areas you might be missing.

These systems adapt, explore, explain, and always put users at the center of testing.

What Vibe Testing Means for Your Team

So what does vibe testing look like for your team, no matter big or small?

There are a lot of ways to see these events. But we have identified the crucial ones which are going to help your team if you implement them right.

- Fewer Broken Tests: Because vibe testing uses intent-driven flows and self-healing selectors, your test suite doesn’t collapse every time someone renames a button or tweaks the layout. Instead of spending hours fixing brittle scripts, your team can focus on building, improving, and shipping. Testing becomes quieter, more reliable, and better aligned with the speed of development.

- Smart Assistant: It’s not replacing your team. It’s amplifying what they do best. Vibe testing takes care of repetitive work like verifying core flows, exploring edge cases, spotting subtle UX flaws so your team can focus on higher-leverage work. QA shifts from checklist execution to deep product insight. Testers become experience advocates instead of bug catchers.

- Much-needed Safety Net: With the pace the AI code is being generated, vibe testing provides safety. With tools like Copilot and ChatGPT accelerating development, it’s easy to introduce logic you didn’t write or fully review. Vibe testing helps you validate those changes not just by confirming they work, but by checking if they make sense to a real user. And when something breaks, vibe debugging doesn’t stop at “what went wrong”, it tells you why, and how to fix it, cutting time from triage to resolution.

- Cultural Shift: When testing becomes more resilient, more user-focused, and less fragile, teams stop seeing it as a bottleneck. They stop fearing change. They start experimenting more. Moving faster and with confidence. QA becomes a leadership function and drives quality conversations, surfacing friction early, and shaping better experiences.

Conclusion

The best thing about vibe testing is that you don’t need a big overhaul to get started. Just pick one critical flow like sign-up, checkout, onboarding, and run it through vibe testing alongside your current test suite. Compare what each approach catches.

What did the old tests miss? What surfaced only through a user’s eyes?

Then scale. Let AI handle the maintenance work. Let your team lead the experience. Because the future is about shipping smart and fast. And vibe testing makes that future possible.

Frequently Asked Questions

Share This Article: