Can Exploratory Testing Be Automated?

By-Michael Bolton

In our earlier blog, Simran wrote about the benefits of Ad Hoc Testing and how important it is. This week we are bringing Michael's thoughts on whether or not Exploratory Testing can be automated. We at ThinkSys believe in making QA Automation fundamental to increasing productivity and decreasing development cycles. There are (at least) two ways to interpret and answer that question.

Definition of Exploratory Testing

Let's look first at answering the literal version of the question, by looking at Cem Kaner's definition of exploratory testing:

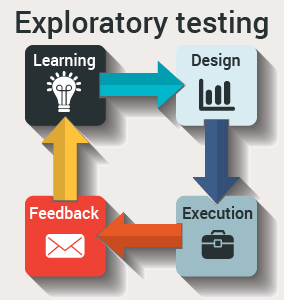

Exploratory software testing is a style of software testing that emphasizes the personal freedom and responsibility of the individual tester to continually optimize the value of her work by treating test-related learning, test design, test execution, and test result interpretation as mutually supportive activities that run in parallel throughout the project.

If we take this definition of exploratory testing, we see that it's not a thing that a person does, so much as a way that a person does it. An exploratory approach emphasizes the individual tester, and his/her freedom and responsibility. The definition identifies design, interpretation, and learning as key elements of an exploratory approach. None of these are things that we associate with machines or automation, except in terms of automation as a medium in the McLuhan sense: an extension (or enablement, or enhancement, or acceleration, or intensification) of human capabilities. The machine to a great degree handles the execution part, but the work in getting the machine to do it is governed by exploratory—not scripted—work.

Exploratory Approach Including Automation

Which brings us to the second way of looking at the question: can an exploratory approach include automation? The answer there is absolutely Yes.

Some people might have a problem with the idea, because of a parsimonious view of what test automation is, or does. To some, test automation is 'getting the machine to perform the test'. I call that checking. I prefer to think of test automation in terms of what we say in the Rapid Software Testing course: test automation is any use of tools to support testing.

If yes then up to what extent? While I do exploration (investigation) on a product, I do whatever comes to my mind by thinking in reverse direction as how this piece of functionality would break? I am not sure if my approach is correct but so far it's been working for me.

That's certainly one way of applying the idea. Note that when you think in a reverse direction, you're not following a script. 'Thinking backwards' isn't an algorithm; it's a heuristic approach that you apply and that you interact with. Yet there's more to test automation than breaking. I like your use of 'investigation', which to me suggests that you can use automation in any way to assist learning something about the program.

A while ago, I developed a program to be used in our testing classes. I developed that program test-first, creating some examples of input that it should accept and process, and input that it should reject. That was an exploratory process, in that I designed, executed, and interpreted unit checks, and I learned. It was also an automated process, to the degree that the execution of the checks and the aggregating and reporting of results was handled by the test framework. I used the result of each test, each set of checks, to inform both my design of the next check and the design of the program. So let me state this clearly:

Test-driven development is an exploratory process:

The running of the checks is not an exploratory process; that's entirely scripted. But the design of the checks, the interpretation of the checks, the learning derived from the checks, the looping back into more design or coding of either program code or test code, or of interactive tests that don't rely on automation so much: that's all exploratory stuff.

The program that I wrote is a kind of puzzle that requires class participants to test and reverse-engineer what the program does. That's an exploratory process; there aren't scripted approaches to reverse engineering something, because the first unexpected piece of information derails the script. In work-shopping this program with colleagues, one in particular—James Lyndsay—got curious about something that he saw. Curiosity can't be automated.

He decided to generate some test values to refine what he had discovered in earlier exploration. Sapient decisions can't be automated. He used Excel, which is a powerful test automation tool, when you use it to support testing. He invented a couple of formulas. Invention can't be automated. The formulas allowed Excel to generate a great big table. The actual generation of the data can be automated. He took that data from Excel, and used the Windows clipboard to throw the data against the input mechanism of the puzzle. Sending the output of one program to the input of another can be automated. The puzzle, as I wrote it, generates a log file automatically. Output logging can be automated. James noticed the logs without me telling him about them. Noticing can't be automated. Since the program had just put out 256 lines of output, James scanned it with his eyes, looking for patterns in the output.

Looking for specific patterns and noticing them can't be automated unless and until you know what to look for. But automation can help to reveal hitherto unnoticed patterns by changing the context of your observation. James decided that the output he was observing was very interesting. Deciding whether something is interesting can't be automated. James could have filtered the output by grepping for other instance of that pattern. Searching for a pattern, using regular expressions, is something that can be automated.

James instead decided that a visual scan was fast enough and valuable enough for the task at hand. Evaluation of cost and value, and making decisions about them, can't be automated. He discovered the answer to the puzzle that I had expressed in the program' and he identified results that blew my mind—ways in which the program was interpreting data in a way that was entirely correct, but far beyond my model of what I thought the program did.

Learning can't be automated. Yet there is no way that we would have learned this so quickly without automation. The automation didn't do the exploration on its own; instead, it supercharged our exploration. There were no automated checks in the testing that we did, so no automation in the record-and-playback sense, no automation in the expected predicted result sense. Since then, I've done much more investigation of that seemingly simple puzzle, in which I've fed back what I've learned into more testing, using variations on James' technique to explore the input and output space a lot more. And I've discovered that the program is far more complex than I could have imagined.

So: is that automating exploratory testing? I don't think so. Is that using automation to assist an exploratory process? Absolutely.

Republished with permission from (http://www.developsense.com/blog/2010/09/can-exploratory-testing-be-automated/) , by Michael Bolton. Republication of this work is not intended as an endorsement of ThinkSys's services by Michael Bolton or DevelopSense.

Share This Article: