Understanding Docker Components

As the race to release software quicker than ever continues, organizations take extraordinary measures to reach the number one spot. Software development is the most significant factor that directly influences the entire software lifecycle. Docker is one of the most popular software development platforms that has revolutionized how software development works. Legions of software development companies use Docker to enhance the overall development process. However, certain components of Docker should be understood to get the best results. This article explains all about basic and advanced Docker components, Docker architecture, how it works, and some of the best practices.

What is Docker?

Docker is an open-source virtualized software platform that helps enterprises create, deploy, and run applications in containers. A Docker container is a lightweight package that has its own dependencies, including bins and frameworks. With a Docker container, the developer can deploy the software quickly by separating them from the infrastructure.

Docker containers have become immensely practical because they eradicate the need for a separate operating system or guest OS installed on the host operating system. A container can run using a single OS without needing to install multiple guest operating systems.

- Portability: Whenever you want to deploy your tested containerized application to any other system, you can rest assured that it will perform exactly the same in every other system. The only catch is that the new system should have a running Docker. It will save a lot of time that would be spent on setting up the environment and testing the application.

- Simple and Fast: Docker is far-famed for simplifying the process. When deploying a code, the users have to put their configuration into the code and deploy it. The entire process is seamless and does not come with any complications. Furthermore, the infrastructure requirements are not linked with the application environment, simplifying the process even further.

- Isolation: One of the key benefits of Docker is the isolation it provides for the applications. Sometimes, removing the applications may leave behind temporary files on the operating system. As every Docker container has its resources isolated from other containers, each application remains separate from the others. When it comes to deleting the application, the entire container can be removed, which will have no impact on other applications and ensures that it does not leave behind any configuration or temporary files.

- Security: Each Docker container has its own set of resources and is segregated from other containers. This container isolation gives the developer maximum control over container management and traffic flow. As no container can look or influence the functioning of any other container, the security is never compromised, ensuring a secure application environment.

Docker Vs. Virtual Machine:

Due to its similar functionalities, people often compare Docker with a virtual machine. Though they may seem similar initially, there are vast differences between them. With that in mind, here is a quick yet detailed comparison between a Docker and a Virtual Machine.

- Operating System:The main difference between Docker and a VM is how they support the operating system. A Docker container can share the host operating system, making them lightweight. On the contrary, each VM has a guest OS over its host operating system, making them heavy.

- Performance: Even though Docker and VMs are used for different purposes, still comparing their performance is a crucial element. The heavy architecture of a VM is the cause of its slow booting. However, the lightweight architecture combined with the capability of customized resource allocation makes Docker boot up faster. Also, Docker can work on a single operating system that eradicates any additional effort, making duplicating and scaling easier. In simpler terms, Docker is proven to be better performing than a VM.

- Isolation: One of the key benefits of Docker is the isolation it provides for the applications. Sometimes, removing the applications may leave behind temporary files on the operating system. As every Docker container has its resources isolated from other containers, each application remains separate from the others. When it comes to deleting the application, the entire container can be removed, which will have no impact on other applications and ensures that it does not leave behind any configuration or temporary files.

- Security: Security is the part where virtual machines have the more significant advantage. As VMs do not share any operating system and come with strict isolation in the host kernel, they remain highly secure. Unlike VMs, a Docker has a shared host operating system and may even share certain resources, posing a security threat to not just one container but all the containers sharing the OS.

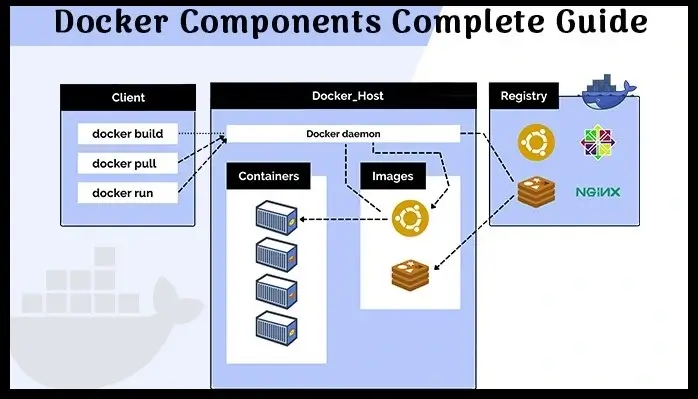

Docker Architecture:

Docker uses a client-server architecture where the Docker client communicates with the Docker daemon. The Docker daemon is responsible for creating, running, and distributing containers.

Depending on the user, the client can be connected to a remote Docker daemon, or both can run on the same system.

The communication between the two is done through REST API using a network interface. Docker Compose is also a client that helps with applications with several containers.

Docker Components( Basic and Advanced):

The Docker components are divided into two categories; basic and advanced. The basic components include Docker client, Docker image, Docker Daemon, Docker Networking, Docker registry, and Docker container, whereas Docker Compose and Docker swarm are the advanced components of Docker.

Basic Docker Components:

Lets dive into basic docker components:

- Docker Client: The first component of Docker is the client, which allows the users to communicate with Docker. Being a client-server architecture, Docker can connect to the host remotely and locally. As this component is the foremost way of interacting with Docker, it is part of the basic components. Whenever a user gives a command to Docker, this component sends the desired command to the host, which further fulfils the command using the Docker API. If there are multiple hosts, then communicating with them is not an issue for the client as it can interact with multiple hosts.

- Docker Image: Docker images are used to build containers and hold the entire metadata that elaborates the capabilities of the container. These images are read-only binary templates in YAML. Every image comes with numerous layers, and every layer depends on the layer below it. The first layer is called the base layer, which contains the base operating system and image. The layer with dependencies will come above this base layer. These layers will have all the necessary instructions in read-only, which will be the Dockerfile. A container can be built using an image and can be shared with different teams in an organization through a private container registry. In case you want to share the same outside the organization, you can use a public registry for the same.

- Docker Daemon: Docker Daemon is among the most essential components of Docker as it is directly responsible for fulfilling the actions related to containers. It mainly runs as a background process that manages parts like Docker networks, storage volumes, containers, and images. Whenever a container start up command is given through docker run, the client translates that command into an HTTP API call and returns it to the daemon. Afterwards, the daemon analyses the requests and communicates with the operating system. The Docker daemon will only respond to the Docker API requests to perform the tasks. Moreover, it can also manage other Docker services by interacting with other daemons.

- Docker Networking: As the name suggests, Docker networking is the component that helps in establishing communication between containers. Docker comes with five main types of network drivers, which are elaborated on below.

- None: This driver will disable the entire networking system, hindering any container from connecting with other containers.

- Bridge: The Bridge is the default network driver for a container which is used when multiple containers communicate with the same Docker host.

- Host: There are stances when the user does not require isolation between a container and a host. The host network driver is used in that case, eradicating this isolation.

- Overlay: Overlay network driver allows communication between different swarm services when the containers run on different hosts.

- macvlan: This network driver makes a container look like a physical driver by assigning a mac address and routing the traffic between the containers through this mac address.

- Docker Registry: Docker images require a location where they can be stored and the Docker registry is that location. Docker Hub is the default storage location of images that stores the public registry. However, registries can either be private or public. Every time a Docker pull request is made, the image is pulled from the desired Docker registry where it was the same. On the other hand, Docker push commands store the image in the dedicated registry.

- Docker Container: A Docker container is the instance of an image that can be created, started, moved, or deleted through a Docker API. Containers are a lightweight and independent method of running applications. They can be connected to one or more networks and create a new image depending on the current state. Being a volatile Docker component, any application or data located within the container will be scrapped the moment the container is deleted or removed. Containers mostly isolate each other and have defined resources.

Docker Advanced Components:

- Docker Compose: Sometimes you want to run multiple containers but as a single service. This task can be accomplished with the help of Docker compose as it is specifically designed for this goal. It follows the principle of isolation between the containers but also lets the containers interact with each other. The Docker compose environments are also written using YAML.

- Docker Swarm: If developers and IT admins decide to create or manage a cluster of swarm nodes in the Docker platform, they can use the Docker swarm service. There are two types of swarm nodes: manager and worker. The manager node is responsible for all the tasks related to cluster management, whereas the worker node receives and implements the tasks sent by the manager node. No matter the type, every Docker swarm node is a Docker daemon and communicates through Docker API.

Docker Community Edition (CE) VS Docker Enterprise Edition (EE)

Docker comes in two variants: Docker Community Edition (CE) and Docker Enterprise Edition (EE). Launched in 2017, the Docker EE merged with the existing Docker Datacenter and was specifically created to fulfil the business deployment needs. On the other hand, the free-to-use Docker CE is more about development.

This information was just the tip of the iceberg and there is a lot to unveil about the differences between the two. Here are all the significant differences between the two versions of Docker.

- Purpose:The Docker Community Edition is an open-source platform for application development. This platform is aimed at developers and operations teams who want to perform all the tasks by themselves through containerization. On the other hand, the Docker Enterprise edition is made for mission-critical applications.

- Functionalities and Features: Regarding core functionalities, then both Docker CE and EE come with similar functionalities. Though Docker CE will serve all the primary requirements of a developer, certain additional features come with Docker EE. These additional features include running certified images and plugins, leveraging the Docker datacenter with various levels of options, receiving vulnerability scan results, and official same-day support.

- New Releases: Both Docker CE and EE have major differences in how new releases are made. The Docker CE has two different release channels; edge and stable. Edge releases are made available every month, but they may have a certain issue with them, whereas stable releases are released once every three months and are always stable. In contrast, the new releases for Docker EE are made available once every three months, and every release is supported and maintained for a year.

- Pricing:The final difference between the two lies within the pricing. The Docker CE is entirely free of cost for its users. However, the pricing per node per year starts from $1500 and goes up to $3500 per year. Also, the users can get one month's free trial of the Docker EE.

Docker Development Best Practices:

There is no denying the fact that Docker has revolutionized software development and delivery. Software delivery can be improved if the best practices are used. Below elaborated are the best practices of Docker that will help you in getting the desired results.

- Keep the Image Size Small:Several official images are available when picking a Node.js image. Operating system distributions and version numbers are the primary differences between these images. When choosing the right image, it is worth noting that the smaller size image will make the entire process faster. A smaller size image will consume less storage, making the image transfer faster and easier.

- Keep Docker Updated: When you begin work on a new project on Docker, you need to make sure that you update it to the latest version. The images that you use should also be the latest. This basic practice is part of the best ones because it will provide you with the latest features while minimizing the overall security vulnerabilities.

- Only Use Official images: If you wish to develop a Node.js application and run it as a Docker image, then you should use an official node image for the app rather than using a base operating image. This practice will provide you with a cleaner Dockerfile as the base image will be made with the right practices.

Docker Services:

Docker is a vast topic to elaborate, and this article covers all the crucial elements of this software development platform. There are innumerable ways how Docker can be used, but the goal of every team working on Docker remains the same; to boost software delivery.

ThinkSys Inc. is a renowned name in the DevOps industry, offering all the primary DevOps services, including Docker. Whether you want to learn more about Docker or want to implement the best Docker practice in your organizations, all you have to do is connect with ThinkSys Inc. Our professionals will take all the measures to get the best results for your organization using Docker.

Below explained are the Docker services provided by ThinkSys Inc. Our team is proficient in delivering all these services effectively to make sure that your Dockerized environment remains functional.

- Docker Consulting:Get the best guidance on Docker from the experienced professionals from ThinkSys who will recommend the right methodologies to implement Docker in your organization.

- Docker Implementation:: Want professional assistance in implementing Docker? ThinkSys Inc. is a pioneer in helping organizations implement Docker. Our experts will run an analysis of your requirements and the goals you want to achieve and create an implementation blueprint based on that.

- Container Management: Manage your containers for mobile and web-based apps that use Kubernetes effectively. Our container management service includes automatic container creation, deployment, and scaling.

- Docker Customization: Run your Docker containers with a personal touch by using custom plugins and API which can be personalized depending on your organizational requirements or expectations.

- Docker Container Management: Docker containers may have certain issues that need immediate attention. Our Docker container management service will help you as our experts will analyze and detect issues in the containers. Moreover, managing the containers to keep their performance top-notch is also part of this service.

- Docker Security: Manage your containers for mobile and web-based apps that use Kubernetes effectively. Our container management service includes automatic container creation, deployment, and scaling.

- Docker Support:Have any trouble post Docker implementation? ThinkSys offers Docker support whenever you want so that your application deployment is never halted due to any unforeseen circumstance. Our qualified team will identify and fix the issue as quickly as possible.

FAQ: Docker Components

Q1: What is a Docker Container?

Q2: What are Docker Components?

a. Docker Client.

b. Docker Image.

c. Docker Daemon.

d. Docker Networking.

e. Docker Registry.

f. Docker Container.

Advanced Docker Components:

a. Docker Compose.

b. Docker Swarm .

Q3: What is the Purpose of Docker?

Q4: What is Docker Engine?

Q5: What is containerd? How is it different from Docker?

Though some people may think containerd and Docker are the same, the biggest difference between them is that containerd is just container runtime whereas Docker is an assortment of different technologies that function with containers.

Q6: What is a Docker Hub?

Q7: How is Docker used with Microservices?

Q8: What is a good alternative for Docker?

a. Buildah.

b. RunC.

c. LXD.

d. Podman.

e. Kaniko.

Q9: Which Organizations are using Docker?

Q10: Is Docker the future of virtualization?

Q11: What is the difference between image and container in Docker?

Q12: Is it preferable to run more than one application in a single Docker container?

Q13: What is the difference between Podman and Docker?

Share This Article: